Thank to all for your timely submissions!

Here we go...

<<< Bret >>>

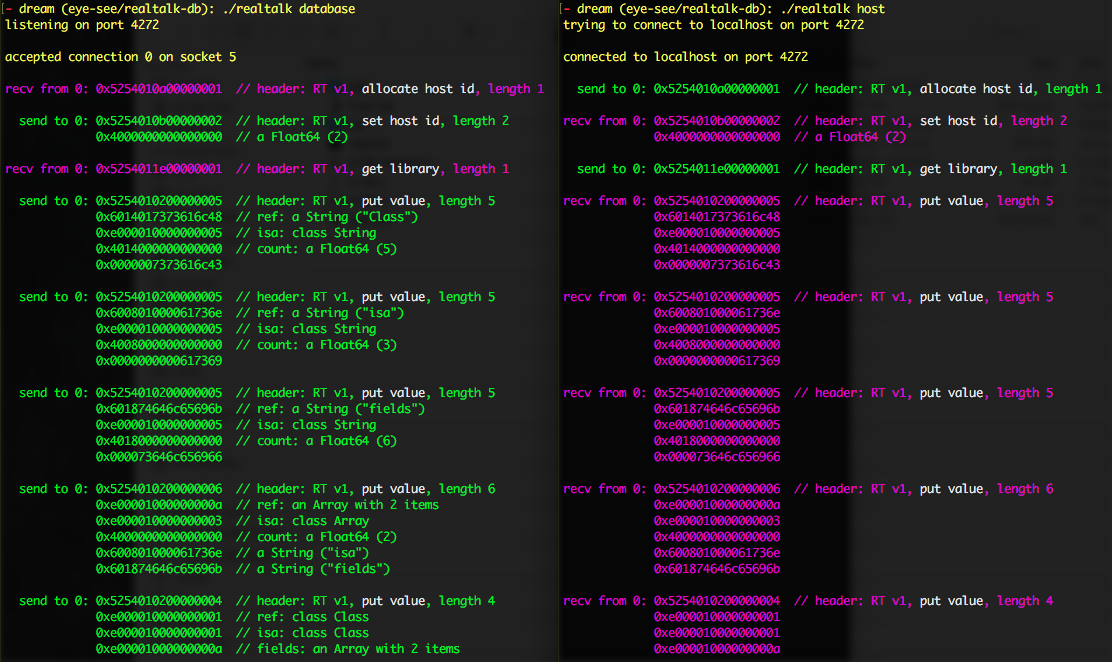

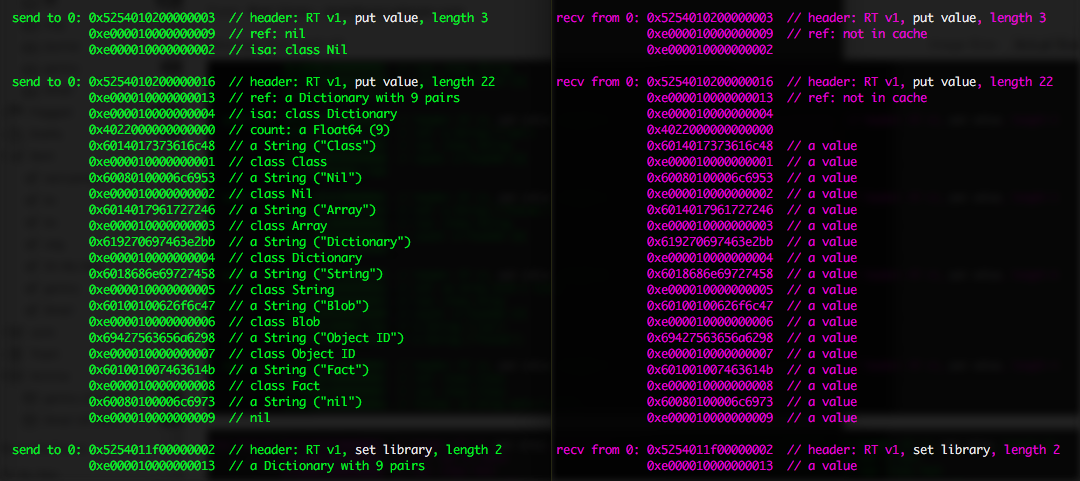

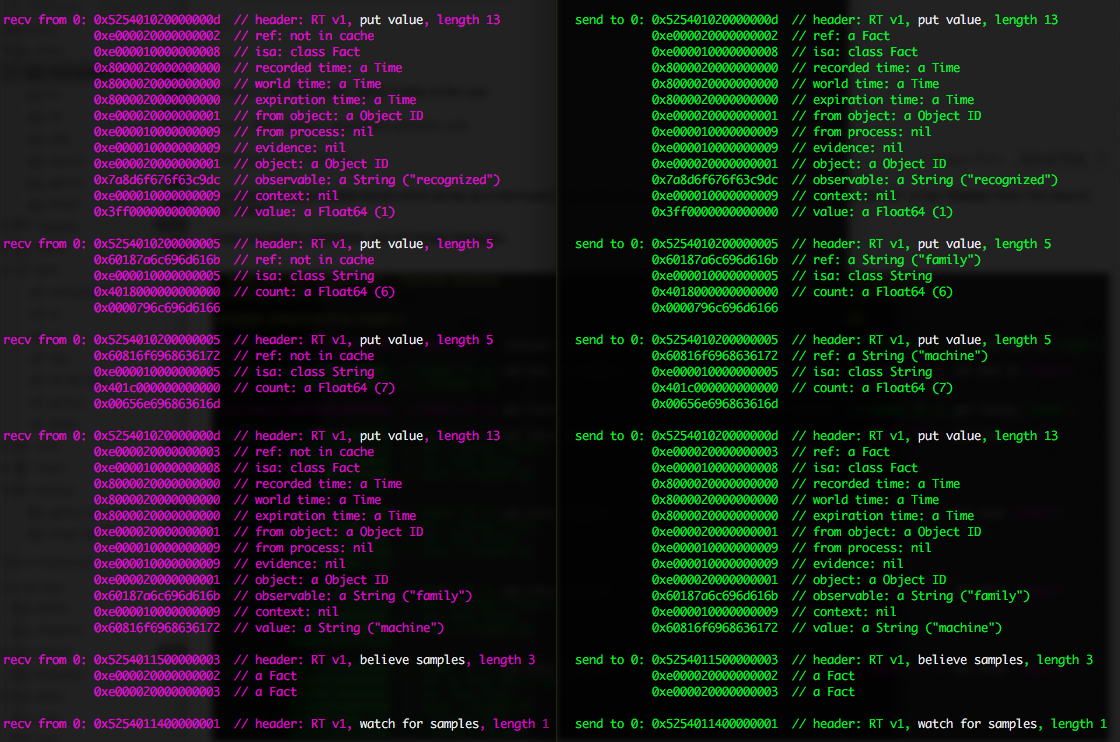

I showed a realtalk kernel which serves as a distributed value store and process host. It implements much of the "left half" of the "Realtalk From The Ground Up" poster.

It's written in plain C, in order to be deliberate about memory layout. It communicates over plain TCP sockets, no wrappers around wrappers. Its only dependency is the Lua library.

It can act as a "database" or "host", which are mostly identical. Hosts connect to a database and exchange messages, mostly putting values (including samples) into each other's value caches. One can tell the other to "believe" a set of samples. The database responds to "believing" by distributing the samples to anyone watching for them; hosts "believe" by (atomically) incorporating the set of samples into the view of the world that they present to their processes.

Hosts start up with an empty value cache, not knowing how to talk about classes or arrays or even nil. In the first screenshot, the host connects to the database, requests a host id so it can generate value refs, and then requests "the library", a dictionary of basics for initial bootstrapping. We see the database put values for defining class Class:

(snip) and eventually puts the library dictionary, and says "set library". The host now knows how to talk about classes, arrays, strings, etc.

(snip) The host then records two samples in which it recognizes and names itself, and then asks to be notified of any other host's samples.

The next step to implement is the "right half" of the "Realtalk From The Ground Up" poster -- running processes and letting them notice and respond to the world.

<<< Chaim >>>

Last week I wrote my talk for ASU, which was then cancelled as I traveled instead for a funeral.

<<< Josh >>>

The past week or two, I've been helping Paula walk through the work I did on a Lua-based Realtalk server and web-based inspector. Hooray for learning! In parallel with that, I've been debugging some latency issues which are causing problems in the "token-tidier" test app.

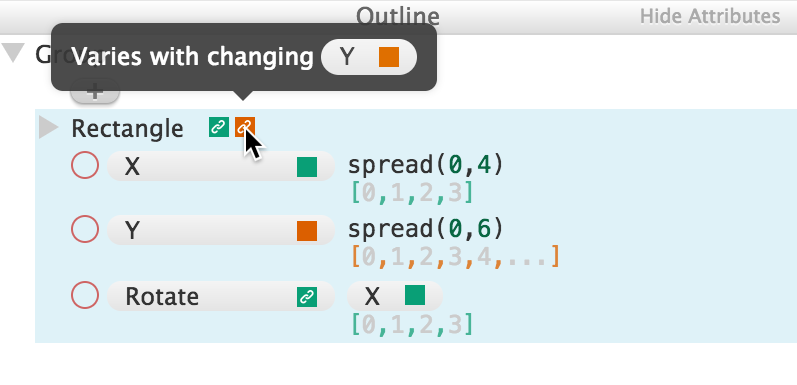

In Apparatus news, I prototyped an interface which colors spreads according to their "spread origins". Hopefully it will help you not be surprised when origin-aligned spreads zip together and origin-unaligned spreads make a 2D table.

Bret thought it would be nice to use these "swatches" as an access point for scrubbing through spreads and things like that. I think that's a great idea. I also remain curious if this interface is actually helpful for new Apparatus users. When I batch up a few more new Apparatus features, I should throw another jam or workshop or something.

<<< Luke >>>

Demonstrated:

New meta-parameter implementation for changing global properties like which scale the song uses, what the root note of each sequence is (transpositions), overall tempo, etc. Currently implemented as “instant sliders” built from a thin strip of paper and a Go-stone.

New RFID “game cartridge” pucks for switching between pong, music, bubbles, etc.

Discussed in-development re-mappable token interface which is intended to allow more free-form interaction with the table; “doing with images makes symbols” style.

Shaders! Allows for customizing the rendering of different synthesizers. Currently used in the additive/scanning synth to drape shifting rainbows over the objects being scanned.

Work in progress, not demoed:

Barrel distortion correction, which we’re hoping will bring a big boost to recognition accuracy and stability. Should enable keeping libraries of scores that can be mixed and matched on the table, as well as more intentional compositions.

New meta-parameter implementation for changing global properties like which scale the song uses, what the root note of each sequence is (transpositions), overall tempo, etc. Currently implemented as “instant sliders” built from a thin strip of paper and a Go-stone.

New RFID “game cartridge” pucks for switching between pong, music, bubbles, etc.

Discussed in-development re-mappable token interface which is intended to allow more free-form interaction with the table; “doing with images makes symbols” style.

Shaders! Allows for customizing the rendering of different synthesizers. Currently used in the additive/scanning synth to drape shifting rainbows over the objects being scanned.

Work in progress, not demoed:

Barrel distortion correction, which we’re hoping will bring a big boost to recognition accuracy and stability. Should enable keeping libraries of scores that can be mixed and matched on the table, as well as more intentional compositions.

<<< Paula >>>

Last week I:

Pair programmed w Josh to make Realtalk token table inspector work in real time. Made UI updates to the real talk token table inspector - such as making sure scrollbar stays to the left when new data comes in. Learned React.

<<< Toby >>>

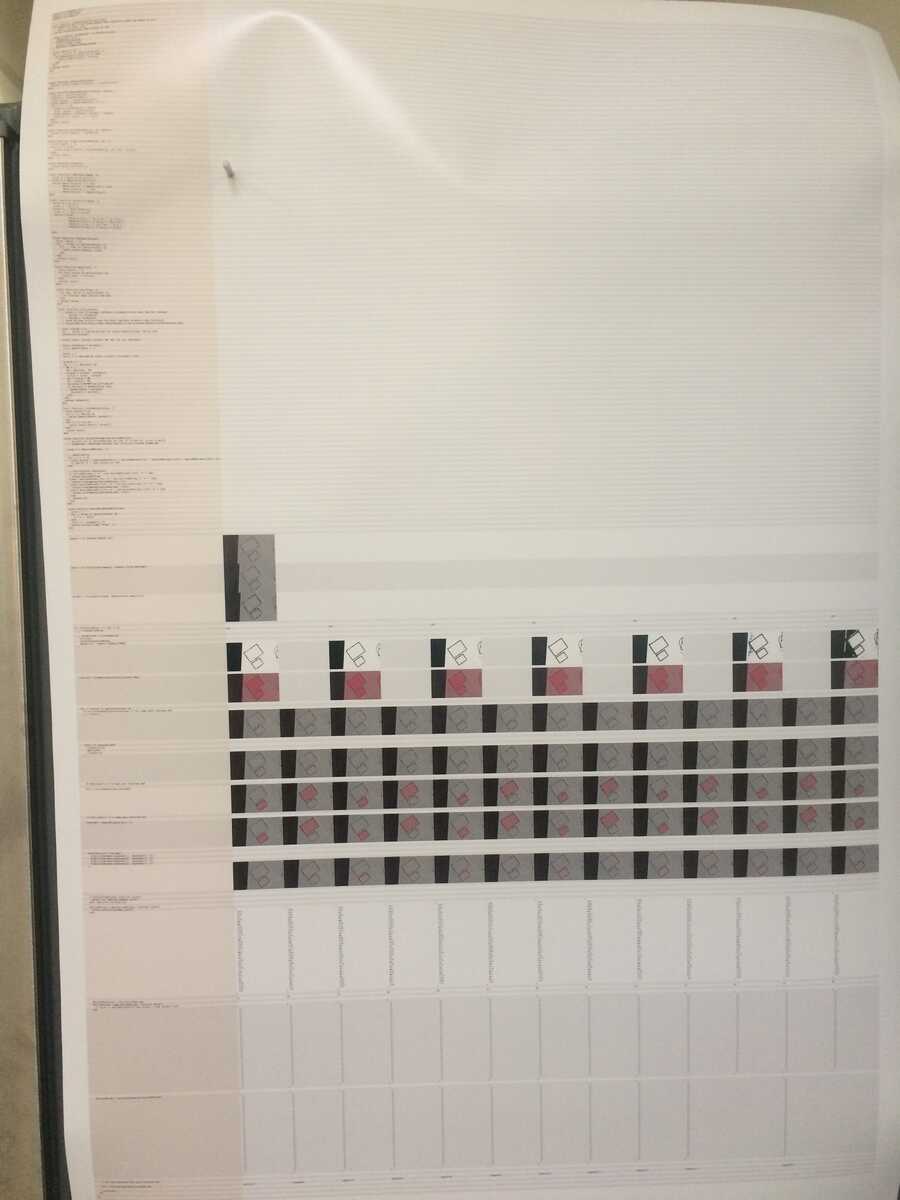

I finished writing the lua code for recognizing "framecodes".

The format I ended up with is a 32-bit code, with 8 bits on each edge. You read it from the top-left in a clockwise direction, black is 1 and gray is 0 (so above would be 11101101 11110000 ...).

The 1st and 9th bit must be 1 and the 17th and 25th must be 0. This is used to determine orientation.

In the remaining 28 bits, 7 of them are checksum bits. The code must satisfy parity in this way:

bit 2 + bit 10 + bit 18 + bit 26 must be even

bit 3 + bit 11 + bit 19 + bit 27 must be even

...

This parity check helps the recognizer algorithm know right away whether it correctly recognized a framecode (with 1/128 probability of a false positive).

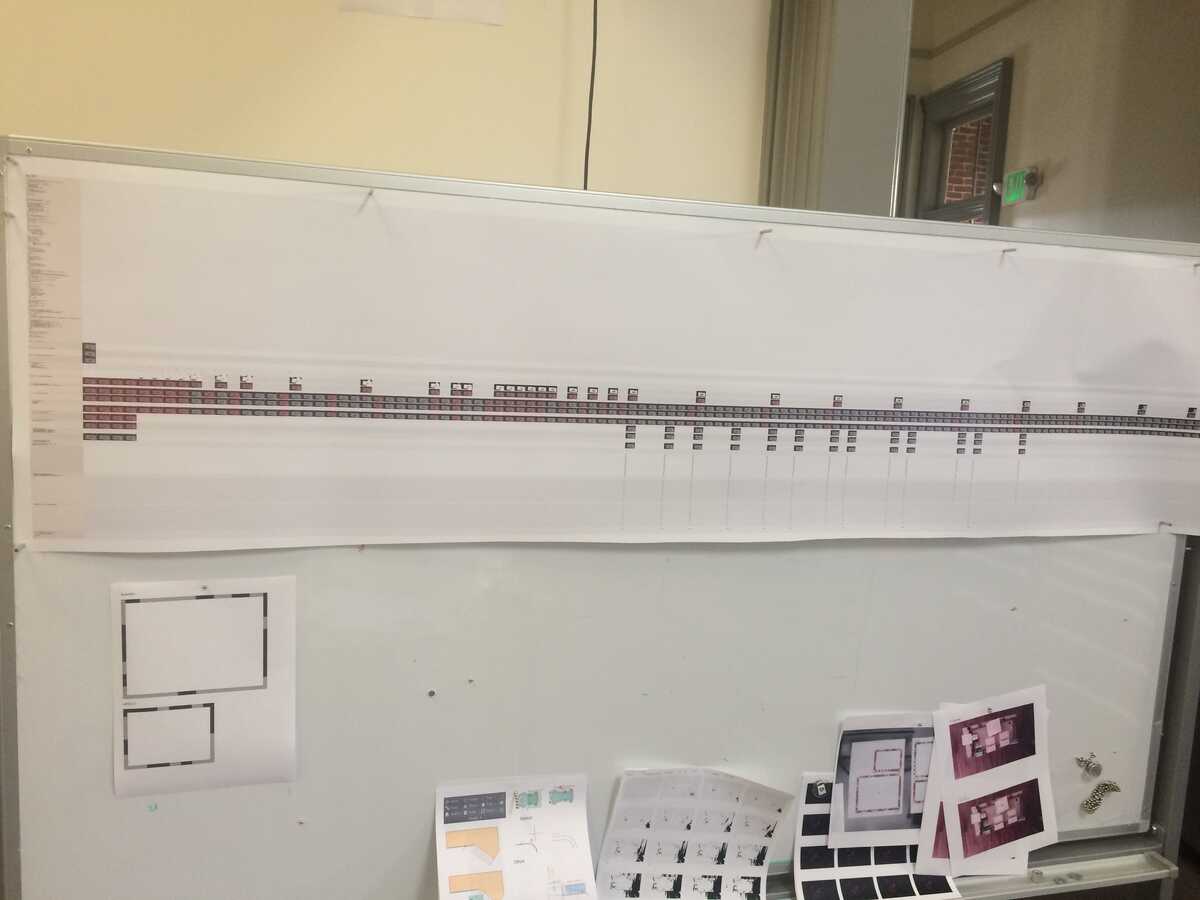

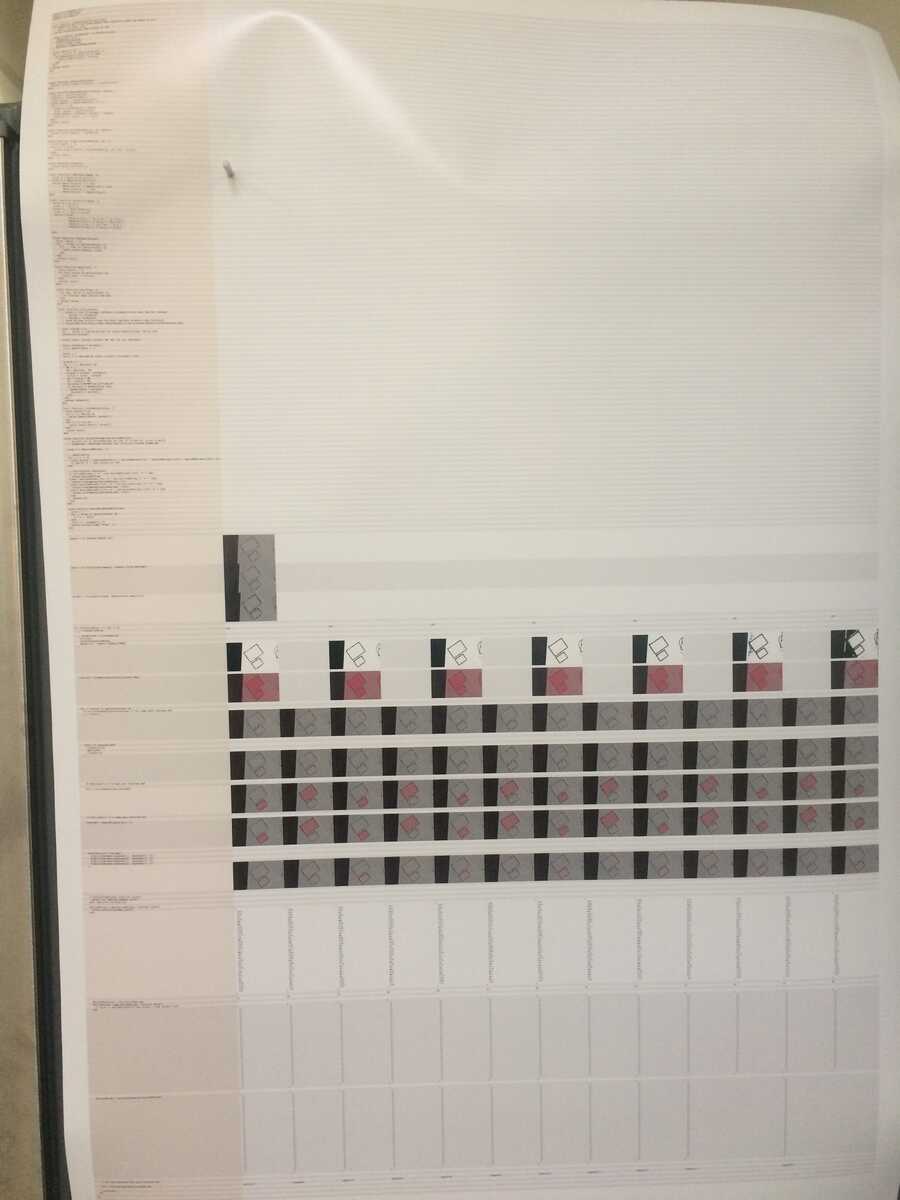

I printed out the run-time analysis of the recognizer algorithm using my in-progress visualizer.

This one shows the big picture of an entire run through the algorithm. The horizontal dimension shows all of the runs through the for-loops. The couple of spindly "legs" are runs where it made it to the end (and successfully found a framecode).

This one is a zoom-in in two ways. First I've zoomed in the camera image (my screen-based viewer affords the ability to zoom in on any part of an image). Second I've "zoomed in" on successful runs of the algorithm, showing only runs where it got to a given line. (This is the "checkbox" from my previous demo.)

I am generally happy with how this visualizer is going. It is already useful for explaining an already implemented algorithm, and it's on its way to being useful during the programming/exploration process.

The printouts were an experiment to try to get a sense for what this tool would be like at scales larger than a screen. I think it was nice during the show and tell that everyone could see it, and look at different parts of it simultaneously. Being able to point at something and ask "what's going on here?" is very useful.

But for these large, static explainer visualizations I (the implementor) had already curated the views. Even the zoomed out view is not the totally zoomed out view, I have culled any contours smaller than a certain area (if they were included the poster would be much longer). It's not clear to me how the visualizer will play out in-the-world during the programming/exploration process, since you want to be able to do the quick filtering and pivoting that screen-based exploration gives you.

I wanted to use the framecodes to build a video "photo album" of all the little clips that Chaim is collecting of the music toy, Paula of the token table, etc. You'd flip through and see the videos on the pages, and you could write notes, clip out videos, collage, etc.

So I started getting video display working on Lumiere and discovered that the rpi is too slow to make video work with LOVE. Luke and I will look into building a compositor that does lower level OpenGL calls as a successor/complement to Lumiere. (And we should also consider rpi alternatives with beefier display capabilities.)