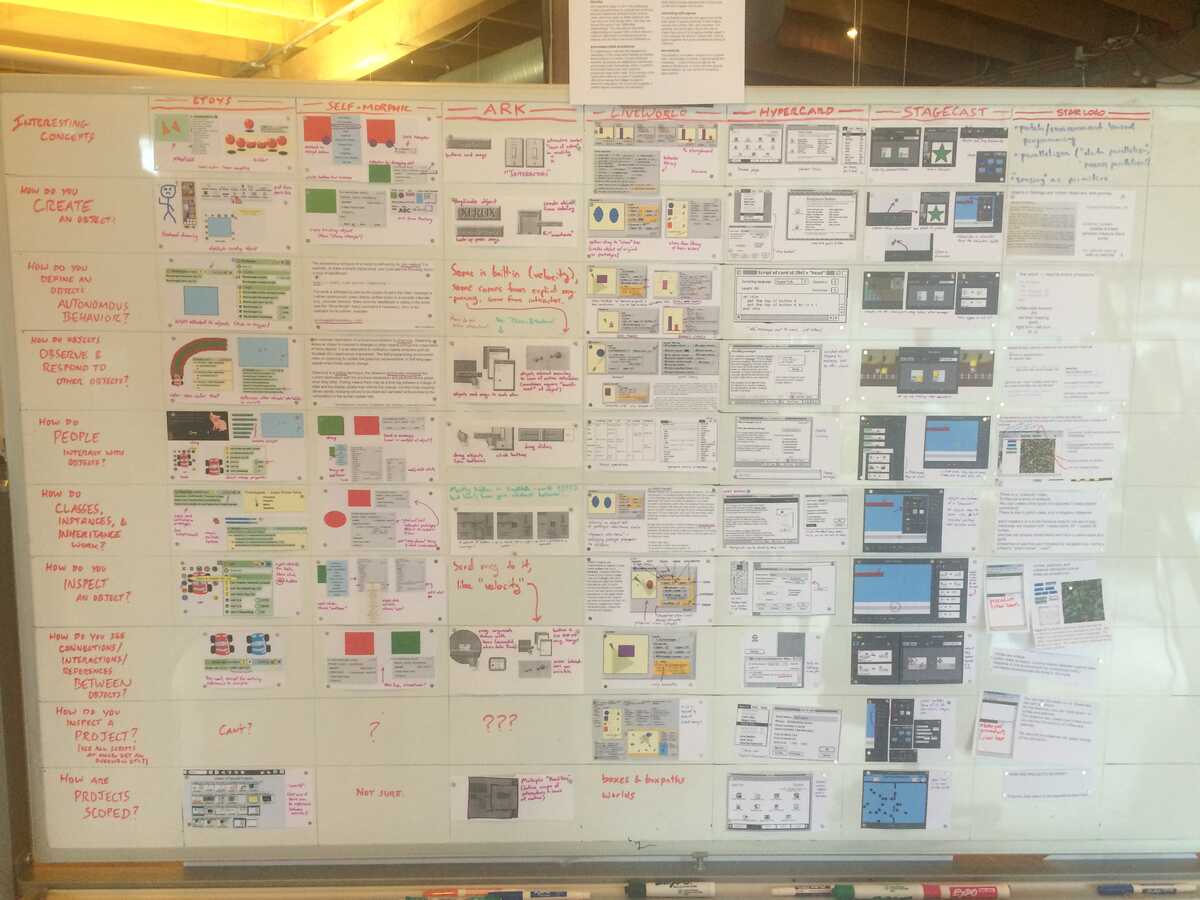

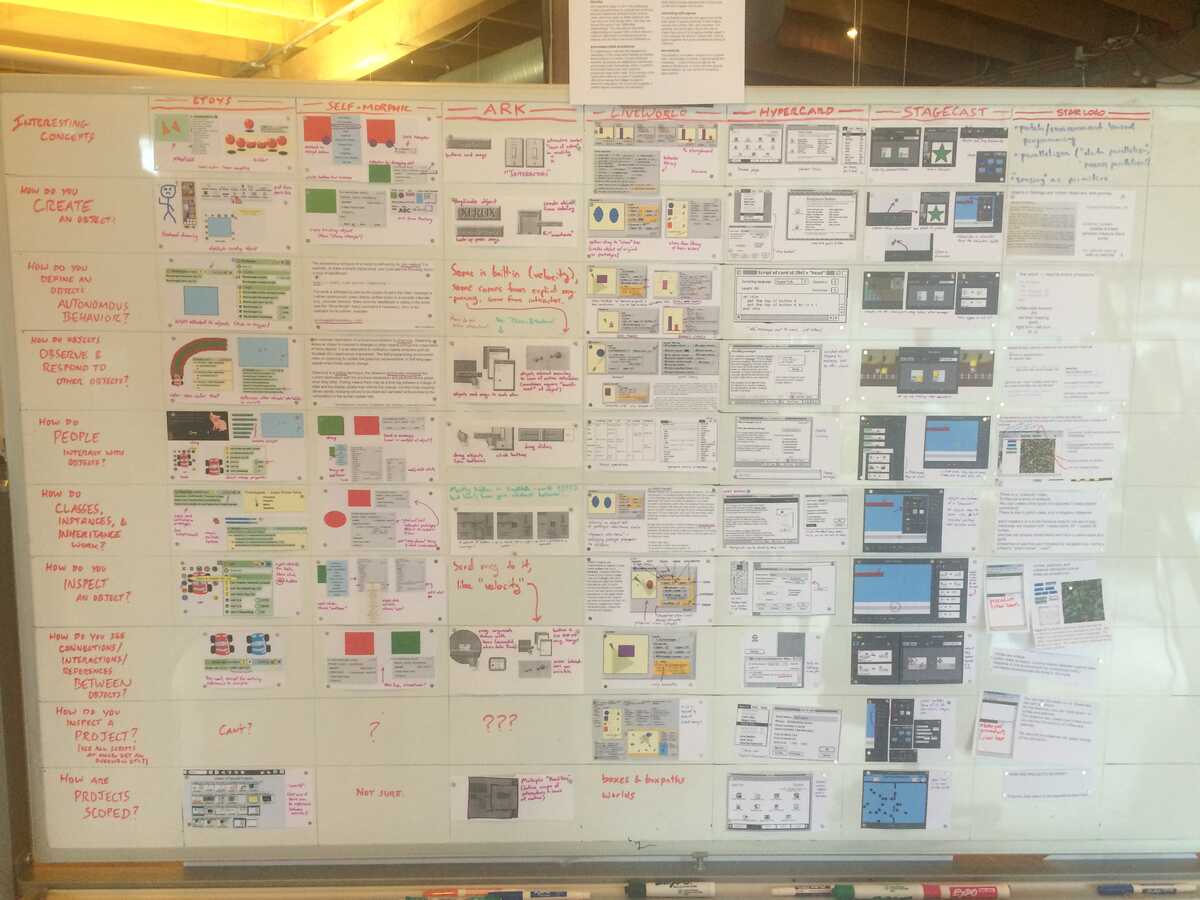

On Friday a couple of us were playing with the new high-res camera and discussing opportunities around whiteboard archiving.

(pic taken with my iphone, not high-res)

I mentioned the idea of "lossless" archiving for whiteboards that are primarily magneted image collages like Bret's summary of media environments, Paula/Nagle's ometropolis summary, my mirror hacking workshop summary, etc. The idea is that the printer would remember every image it ever prints. Then, when you take a photo of a whiteboard, the system would try to register (this is the CV word) recently printed images to regions on the whiteboard. Then, the archived photo could have the actual lossless image superimposed in the right place, or the whiteboard photo could link with an "image map" (anyone remember this old HTML technology?) to the original printed image for better legibility.

But this idea of linking printed images to digital artifacts goes way beyond increasing legibility of whiteboard photographs.

The system could parse whiteboards live and this could aid in presentation. For example, Paula mentioned on Thursday how she wished she had a "zoom in" command to discuss pictures she had printed for StarLogo. Instead, Bret had to google image search to bring these images up on the big screen. If instead the system knew where all the images on the whiteboard came from, then you could just laser the image in question and the system could make it show up on the big screen.

This is not limited to static images. Bret suggested that whenever he "screen captured" an image from eToys, the system could save a snapshot of the dynamic eToys state, print out a screen capture, and link this screen capture image to the state. So then in his presentation, he could laser an image and then start playing with the live eToys example. This is related to the "physical object linking back to the source" idea we discussed for Apparatus laser cuts.

This would also work for videos. I am frustrated that I can't put videos or animations on my whiteboards. But with this system, I could print a representative frame from the video and link it back to the video (a video coaster!). Then you could pull up the video by e.g. holding an iPad next to it. Or I could draw a rectangle on the whiteboard and place the "video coaster" next to it and the system would start projecting the video on loop into the rectangle.

Implementation

I've been looking into how this automatic image identification and registration could be implemented. I'm still in the beginning stages of reading papers and doing experiments.

Probably the pro way to do this would be to find SIFT features (breezy overview, original paper) on the whiteboard and correlate those to features in the images from the printer "database". A feature is a distinctive region of an image, usually a highly textured area centered around a distinctive point (like a corner or a dot). The algorithm gives each feature a descriptor which describes the feature in a scale and rotation invariant way. You can match images together by finding features on each, then comparing descriptors to find likely feature matches.

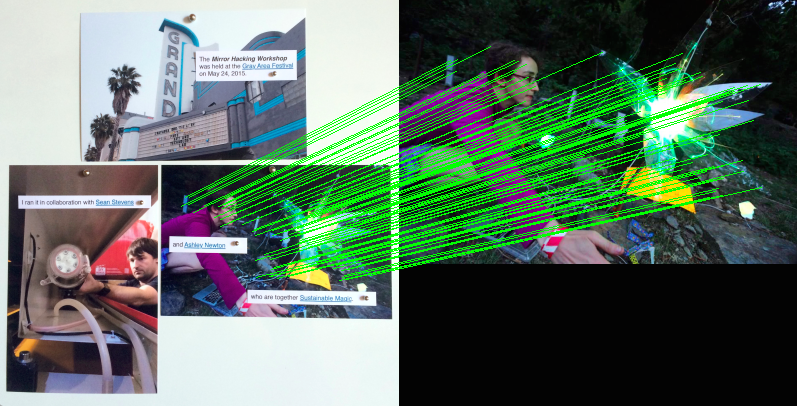

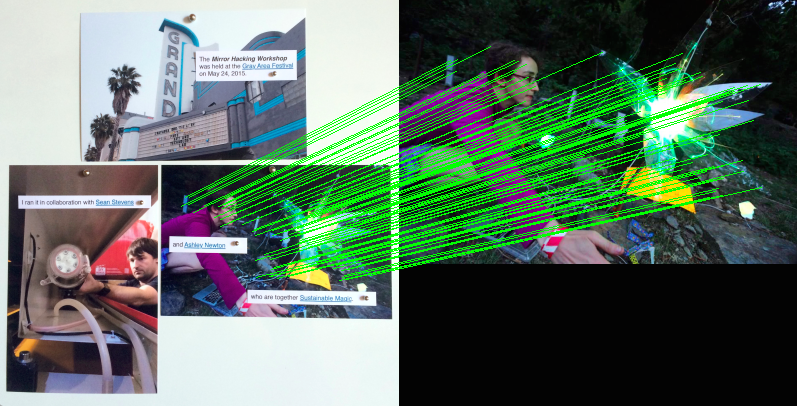

I have had mixed luck with this approach, as I believe Rob has as well. But today I got some potentially promising results following this tutorial on panorama image stitching with SIFT features in Python.

(the green lines show matched features from the whiteboard image on the left to the original jpeg on the right)

The advantage of the feature matching approach is that it would work for cropped/obscured/modified images, as long as there were sufficient features to find a homography (geometric transformation mapping one image to the other).

There are people who have used SIFT matching at very large scales for near duplicate image detection (e.g. Google's query by image, Google Goggles, TinEye) so our scale should be tractable.

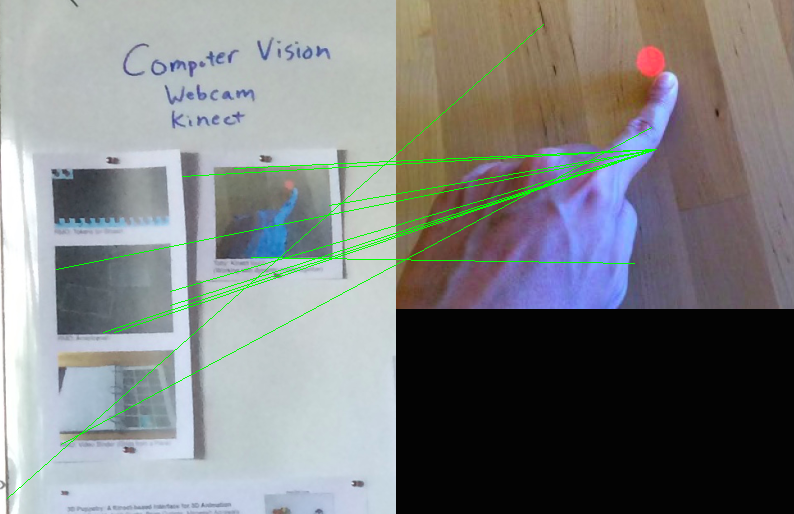

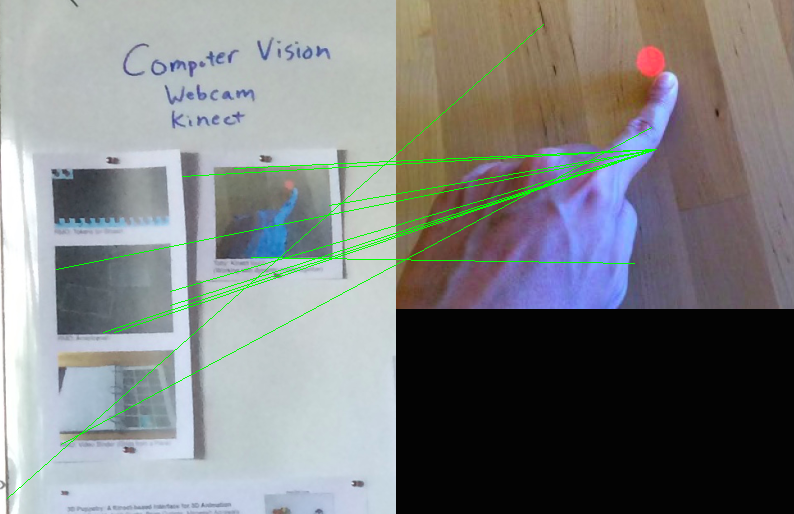

I also got less good results:

I imagine the lighting, print quality, and capture quality are implicated. But this requires more investigation.

SIFT is meant to be scale-invariant, meaning it should detect the same features on an image if you were to scale the image, and the features should have the same descriptor. But with calibrated cameras and surfaces, or with depth cameras, we could eliminate the scaling variable. This could potentially make our problem a lot simpler, but more research is needed.

Also, as I write this I'm realizing a CV glossary would be useful. I'll try to start putting this together.