I prototyped around my process of researching and presenting on computer sensing last week.

I divided the workflow into three stages: collecting, organizing, and presenting.

Though as we'll see these blend into each other and form a cycle.

Collecting

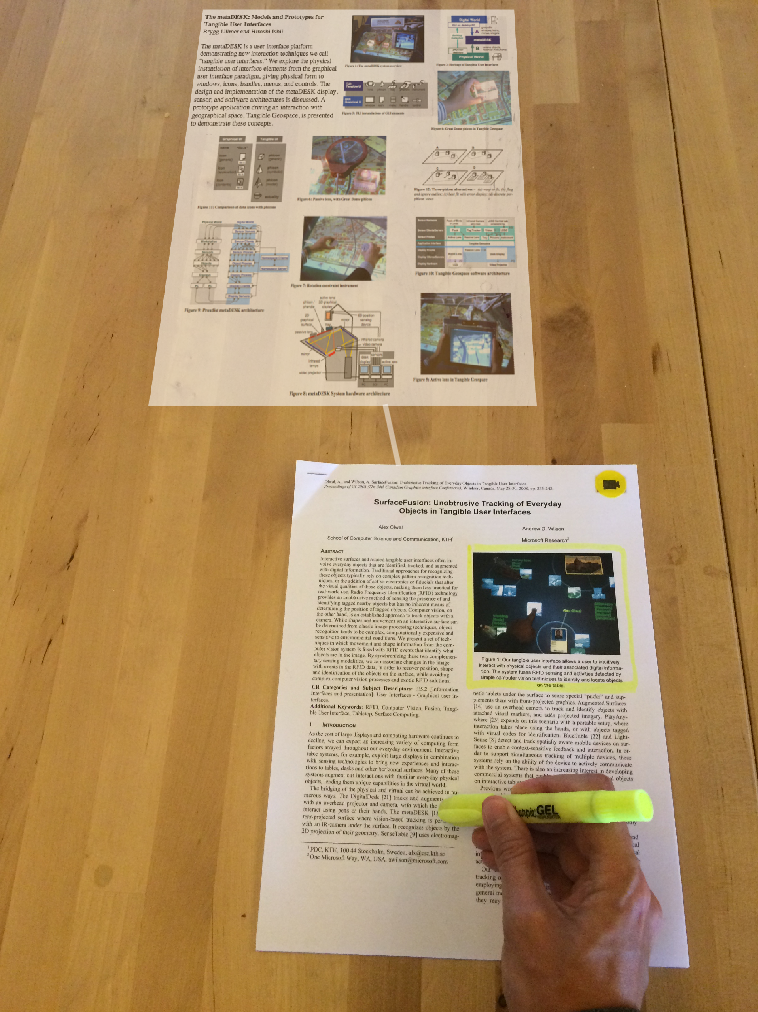

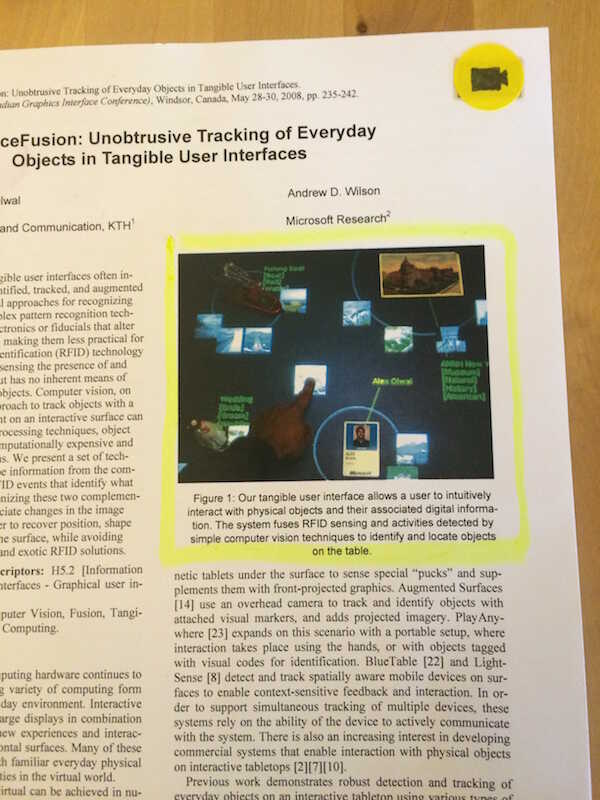

In collecting, I want to be able to quickly follow references I find in papers. Here is an augmented reading desk that lets you follow these links while reading a paper (on paper). By pointing at a link you see a projected preview of the linked-to paper:

If it looked interesting I could say "print" to get a hardcopy of the entire paper.

I think this preview format works pretty well for academic papers: seeing the title, authors, abstract, and all the pictures.

Glen suggested lowering the activation energy to follow references even further. When you have a paper on the table, all the referenced previews could be shown surrounding it. Or perhaps all the references from the page you're on. The idea is to make it easier to follow references (right now it is hard!) and promote serendipitous discoveries.

Organizing

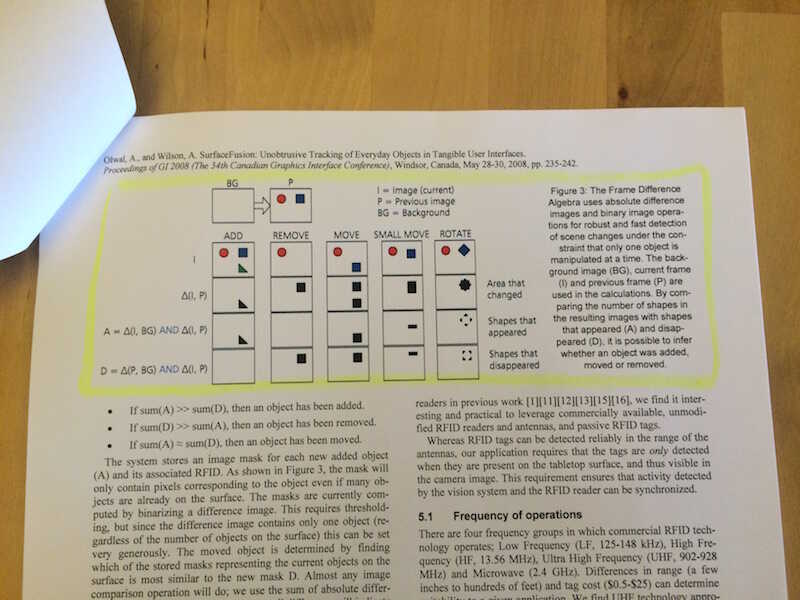

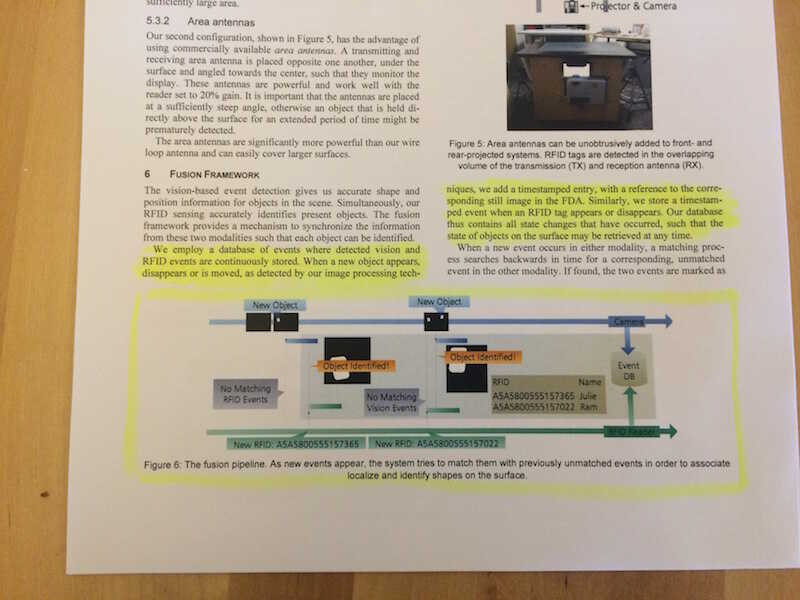

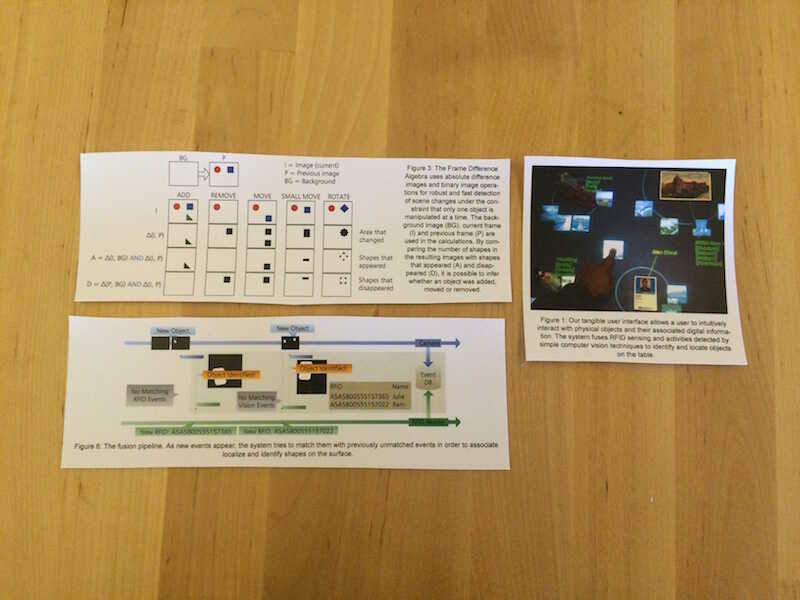

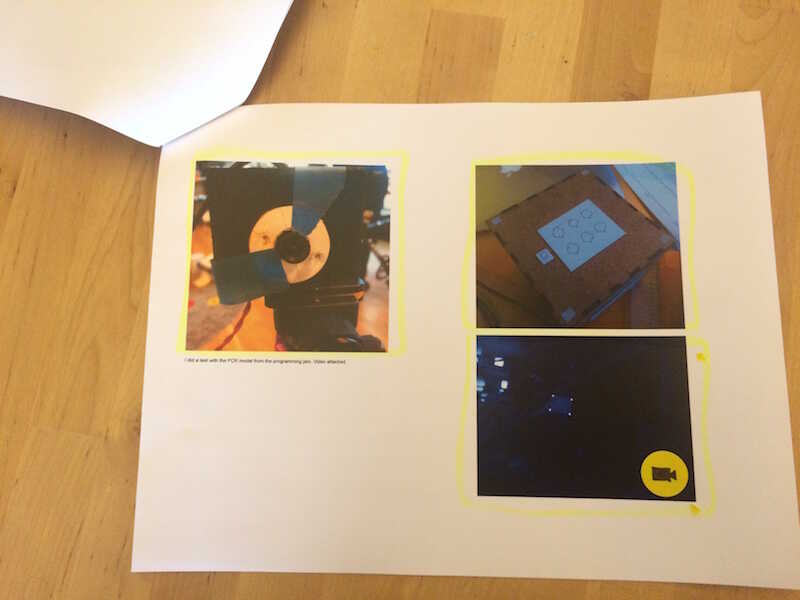

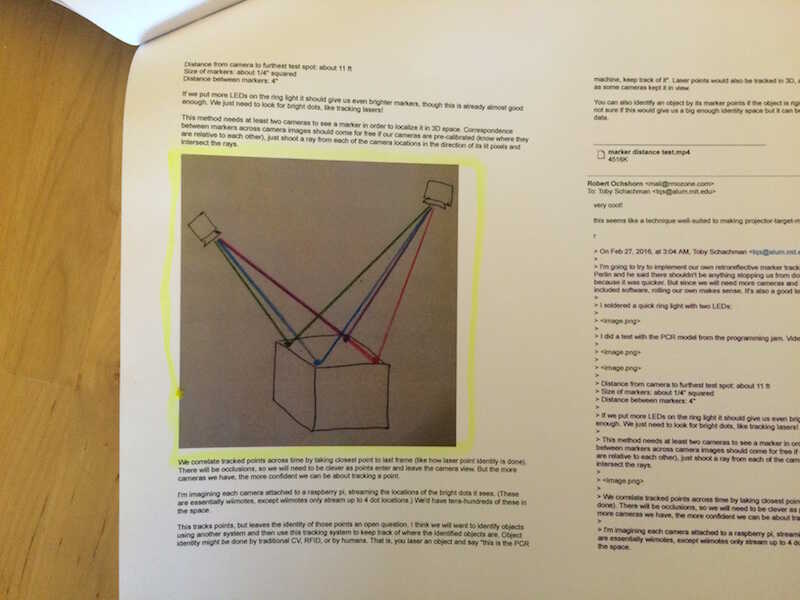

I want to be able to clip out pictures and passages of text and rearrange (collage) them. To do this I highlight regions of the papers and passages and I say "print".

These clippings get printed out and I arrange the clippings on the table, along with some of my own notes:

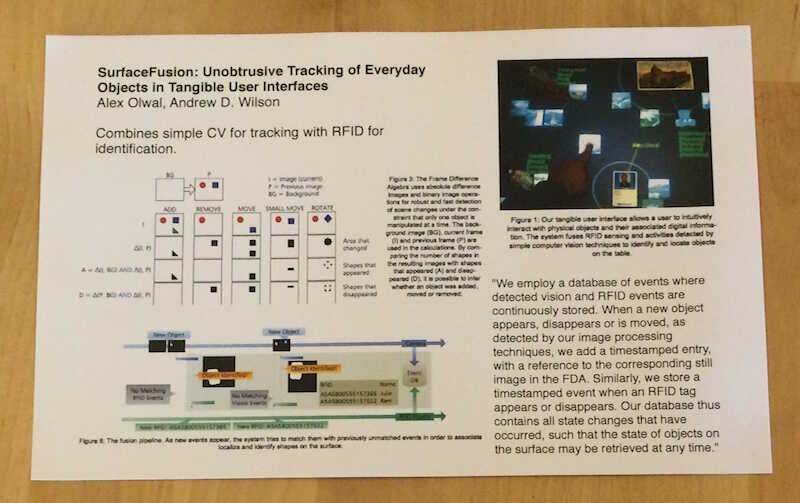

When I'm satisfied, I have the system print a copy of my arrangement:

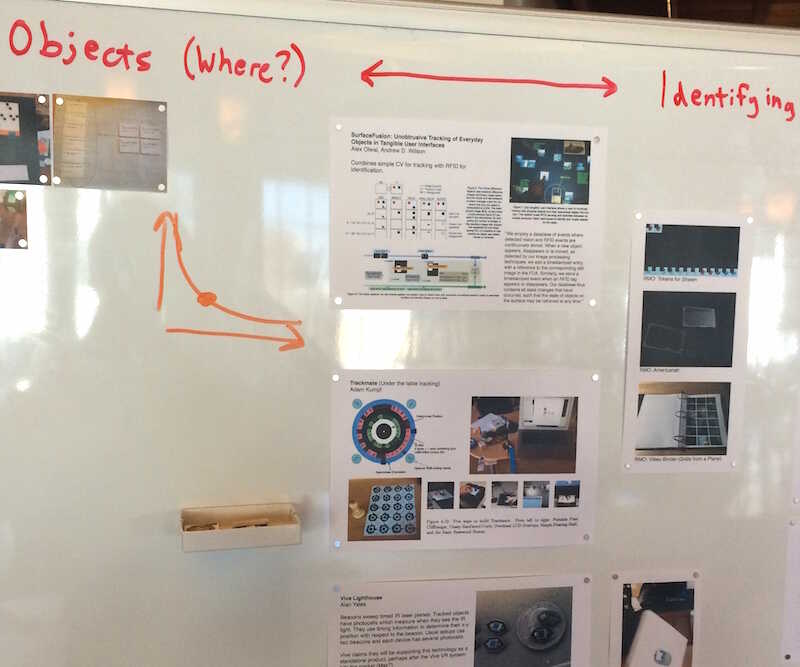

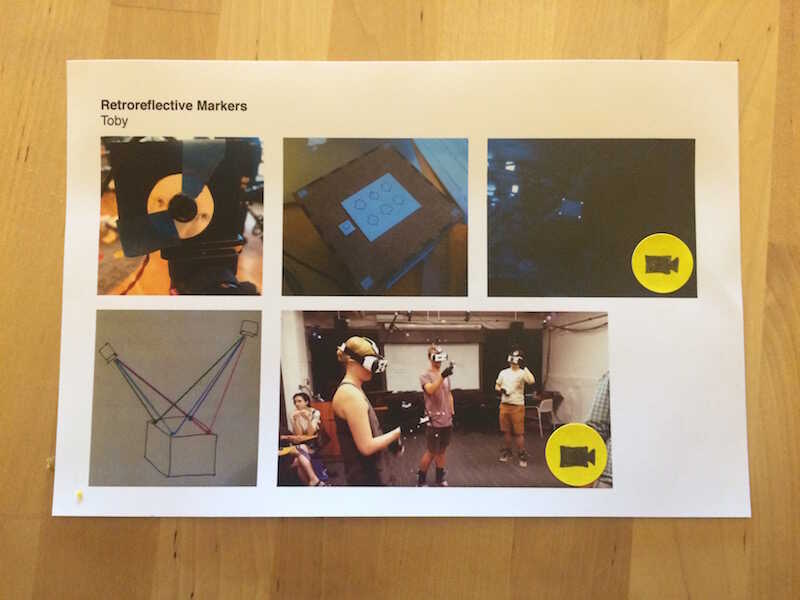

I then collage this collage onto a bigger collage on my whiteboard:

More Collecting

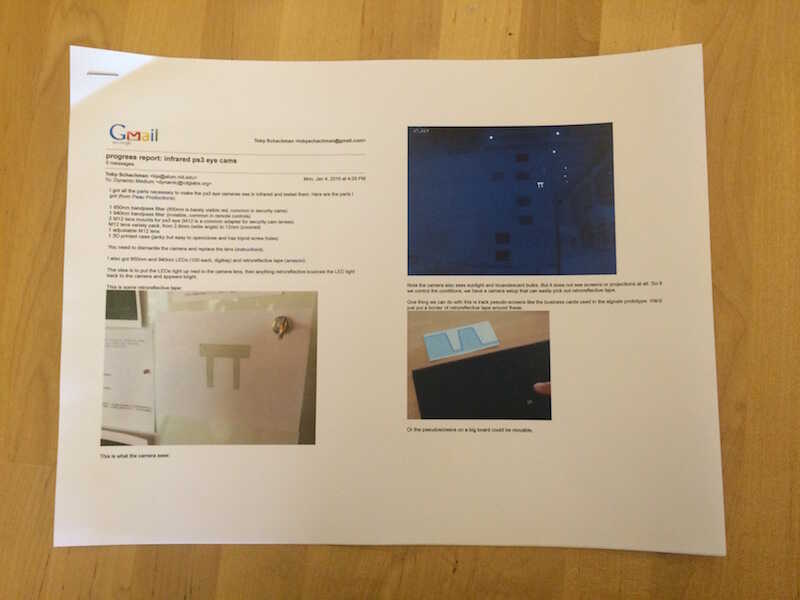

I also collect material from around the lab. I point at a label on the research gallery and tell them system to print the email thread.

I "clip out" sections of this email with my highlighter:

And arrange these into a collage for my whiteboard:

Presenting

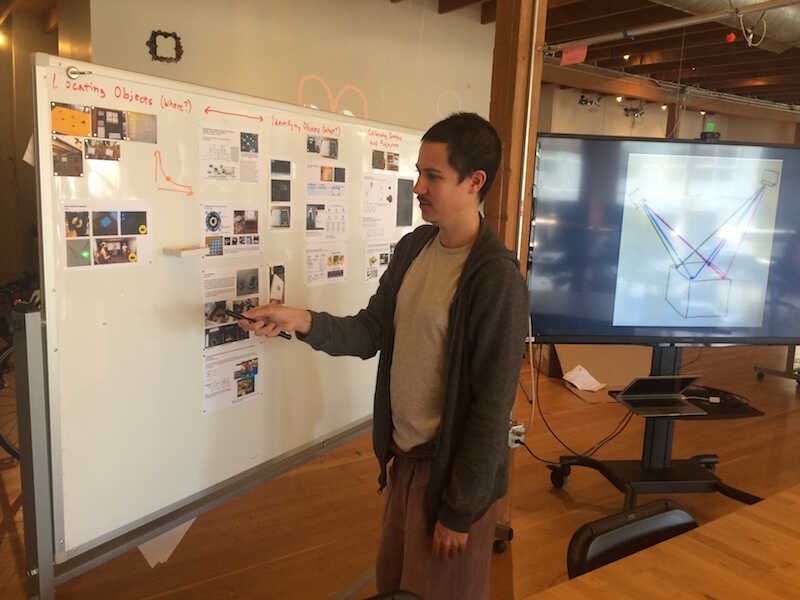

Finally, since all of my media always points back to its original source, I can point at media on my whiteboard and enlarge it on the big screen for showing to the group:

This can show still images, videos (as in the video RFID demo), or also load up "snapshots" of live systems. For example, Bret could point at his "dynamic screenshots" from eToys, HyperCard, etc. and load those interactive examples up on the big screen for group discussion.

Further, here is where the stages mix: while presenting, the group might want to follow a picture back to the original paper, might want to follow links within that paper, etc. Presenting becomes collecting.

The presentation itself could be recorded and linearized into a document (like this email), with spoken transcript interspersed with the media that was called up. Presenting becomes organizing.

Thoughts

I made a list of "actions" that I think you'd need to be able to perform on any given "reference" in the system:

- Print

- Find source (of a clipping)

- Follow links

- Display (on surface X)

- Annotate

- Make link

- Highlight (distinguish a region/clipping)

Other riffs from the group:

- The highlighter colors could function as a form of tagging, as people tend to do. "Show me all highlights done with this pen."

- When you follow a clipping back to the source, the projection mapping could show the rest of the paper "around" the clipping, putting the clipping back in its original context.