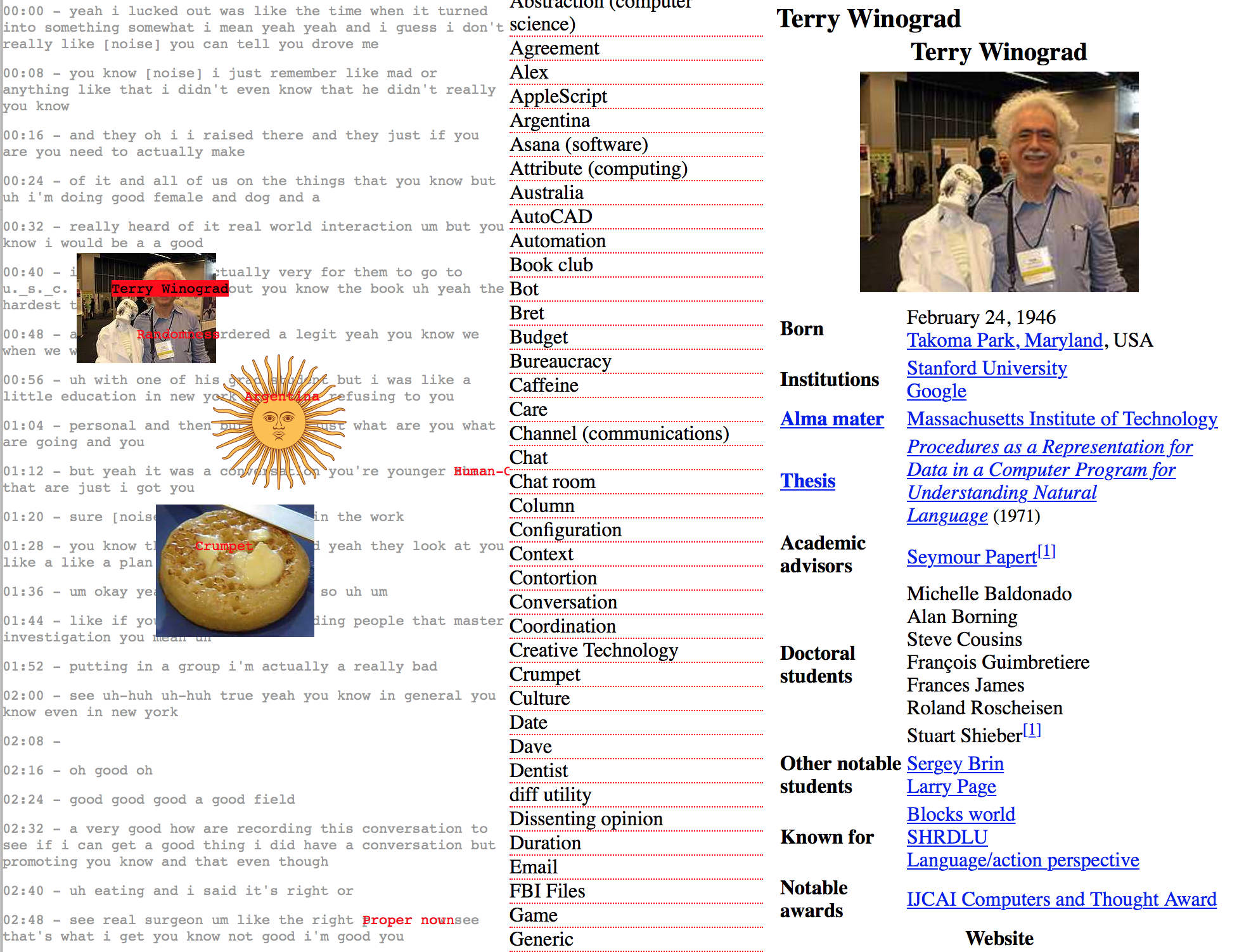

Slowly, audio transcription is becoming a bigger part of my daily life. I’ve been, with increasing regularity, recording conversations and meetings. Missing my Hyperopia Thing (I haven’t set up any thermal printers here, yet), I wrote an interface that allows for cross-referencing nouns and concepts from conversations with Wikipedia pages and external links. It’s another pass at the “correction” thread of working with imperfect transcriptions, and a first stab at “what to do” with a recording after the fact. There’s a lot that’s “wrong” with the current sketch, but here’s what I like about it:

a - this is an invisible change, but I’ve implemented multi-threaded transcription. On my MBP that makes it 8x faster, which is a huge improvement, and moves the process from ~0.8x speed (relative to original duration) to 6x (10min/hr). With my current approach, I suspect that “in the cloud” I’d be able to make a multi-computer implementation and transcribe a three-hour conversation in under a minute! The ability to use “bursts” of very large computation may become more essential when I start prototyping adaptive language modeling. For example, consider making a Wikipedia link to “Alan Kay,” the system could then download Alan’s Wikipedia page, all of the wikilinks, Alan’s books, etc, and incorporate them with added weight into the language model before immediately attempting a re-transcription.

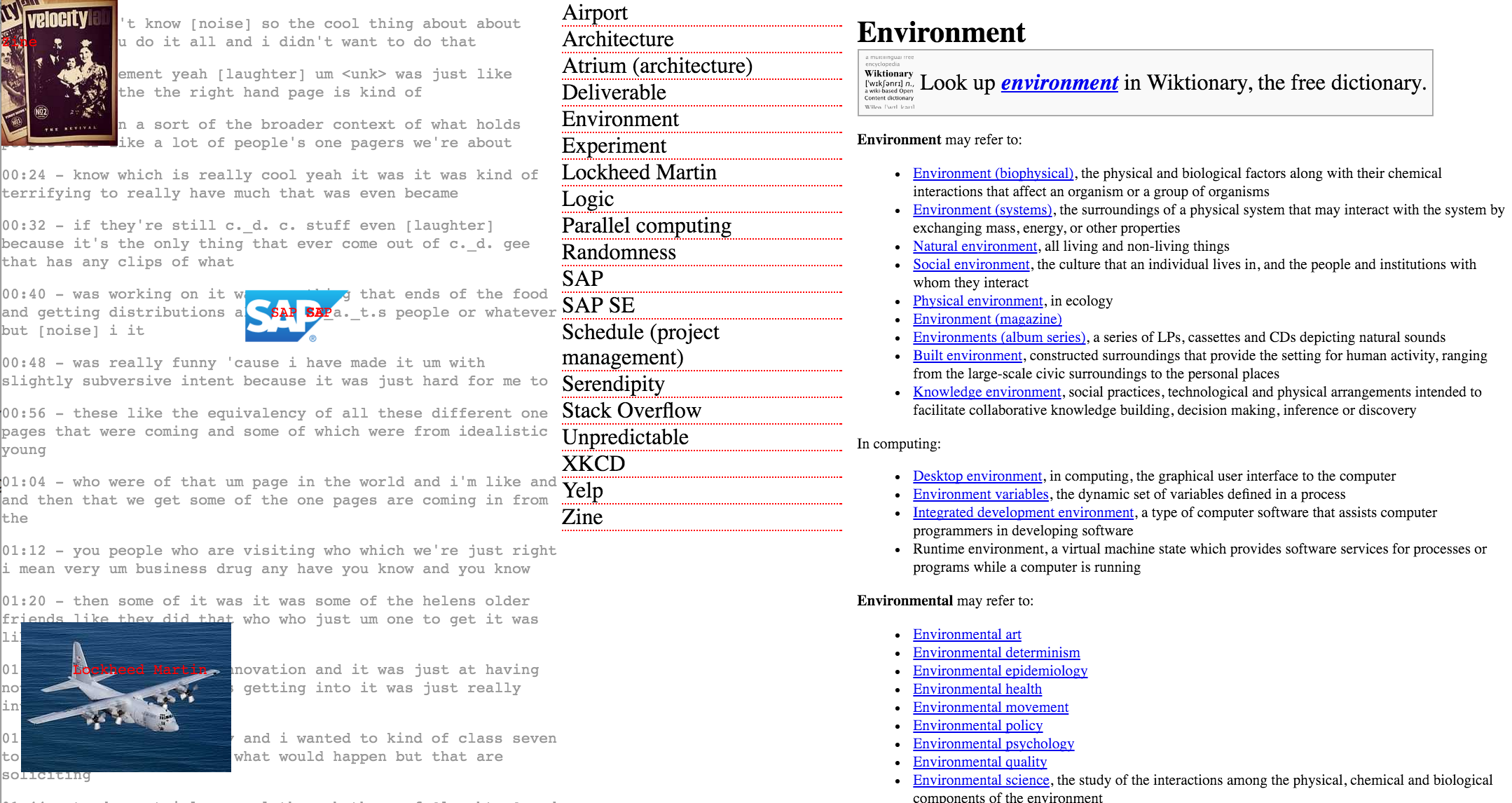

b - it’s fun to read wikipedia pages while listening to the conversation in the background. It feels “respectful” to the user’s time—conversations are slow and tedious and re-living them with full attention is painful. I would like to see “the past” become a starting point that becomes unrecognizable, “subconscious.”

c - adding background images to a transcript really add a lot. At the moment, it’s a fully manual process of clicking on a wikipedia image to “stick” it to the transcript, but I could imagine more automated approaches, and also integrations with Noun Project. In any case, it creates very distinctive “terrains” of conversation that can lead to reliable and robust recall.

d - I’ve been talking with Joe about what to do with “mentions”— you know, real life mentions. We have no idea, yet, but have started outlining an ontology of mentions. Don’t be surprised if some of you start receiving notification of “mentions” from in-person conversations.

Of all the things that I dislike about the sketch, what stands out is a pressing urgency to create a representation of audio that unlike spectograms, waveforms, pitch traces, or any other that I’ve seen, can compactly (inline with text) show speaker/accent/emphasis changes. The wall of text feels straight-up oppressive for multi-speaker recordings. Certainly I could address this with multiple microphones and channels, but I have a strong hunch that a representation that satisfied the above criteria would be deeply worthwhile.

Fig 1. “Terrain” of a conversation with Joe Edelman, Patrick Dubroy, and Jamie Brandon in Berlin. Demo: shift-click to add a wikipedia reference; click on any wp-image to “stick” it to the transcript.

Fig 2. Another conversation, with Patrick Dubroy and also Evelyn Eastmond, in Munich. (Starts off about this very interface and the relationship to the CDG All Hands Zine.)

Your correspondent,

R.M.O.