Thanks for sending this. I will get in touch with Maneesh at some point to ask his advice on this.

I think you are spot on, that all these matching algorithms come with assumptions about the images they are fed, and the assumption of a world made up of lots of small images everywhere (e.g. what our lab looks like) is not one taken by most of the research out there.

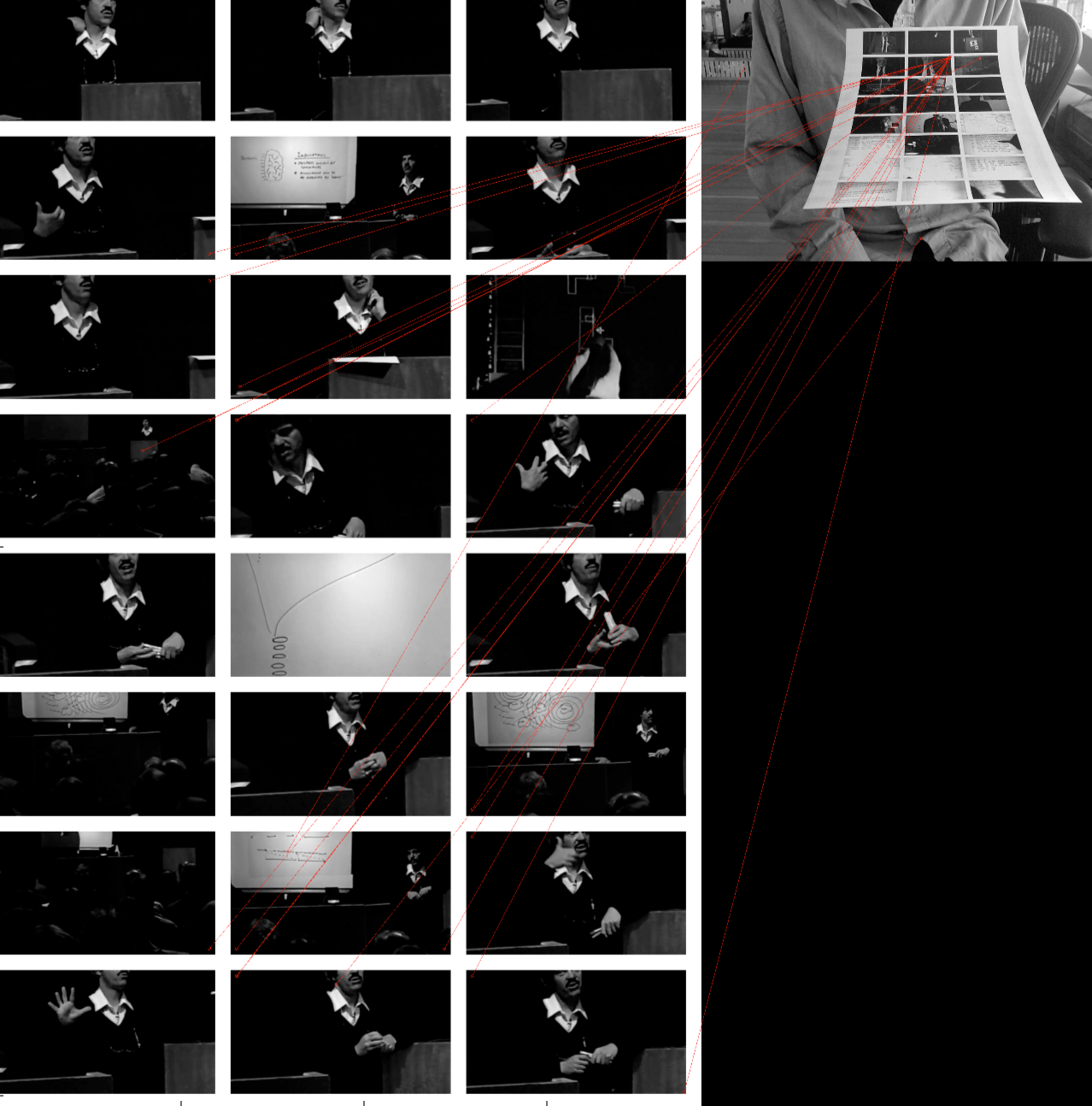

One thing I noticed just now, looking at both of our false positives, is that there seem to be a bunch of matches radiating out from a single point. If these are all coming from a single feature, we should be able to filter this out at matching time (I thought this was what SIFT's "ratio test" to determine matches better than the second-best match was doing...). Or if they are coming from multiple features that all happen to be clustered around the same point, perhaps we can penalize these pathological homographies in the RANSAC objective function.

On Wed, Mar 16, 2016 at 3:47 AM, Robert Ochshorn wrote:

Hi!On Mar 14, 2016, at 12:39 AM, Toby Schachman wrote:I have had mixed luck with this approach, as I believe Rob has as well. But today I got some potentially promising results following this tutorial on panorama image stitching with SIFT features in Python.Maybe this’ll come up at the Memex Jam (!), but I BCC’ed the research gallery on correspondence with Maneesh on this point last July:Dear Maneesh,On Jul 2, 2015, at 12:24 AM, Maneesh Agrawala wrote:That would be great! I looked through the 3d puppet code/approach and it seems like it will be a useful reference. The project is so beautiful! I see what you mean about blurring physical/virtual boundaries, and how to create open-ended mental models. Technically speaking, I had tried to do something similar when I wanted to make the book-page turning installation, but (as you would have probably anticipated before writing an implementation) SIFT homographies are completely useless on typographic elements. (Turns out the features/corners of digital typography are rather uniform—ha!)I like using SIFT for feature detection, but it's parameter settings can be a little finicky. Still I think it should work well on text elements unless there are resolution issues.I'd be interested in hearing more about what you tried with SIFT. SIFT can be a little slow, so for tracking you may need an optical flow-based technique like KLT.I dug up some of the screens from when I had tried SIFT for identification—it was actually for my “video binder” experiment, not for typography as I had mistakenly recalled. Here you can see the working homography:It seemed to work on the first try, but once I had different printouts (in the same format), it wasn’t helpful for the n-way classification because the SIFT keypoint detector was picking up so many image corners that it could always find a correspondence. Here’s it failing (with a false positive), warping all manner of identical corners to the same dark corner of the sheet I’m holding:Anyway, in this particular case, using the thumbnail grid as a fiducial and matching within each thumbnail works very well—once you can isolate the individual images it becomes trivial to classify the page—but I show this just to demonstrate that the implicit assumptions of some computer vision techniques that make perfect sense in the natural world (e.g. that corners are unique and reproducible discriminants) may not apply in a designed environment (e.g. where the harshest corners may be the least meaningful discriminants).I’m trying to figure out a generic approach to classification/tracking for very different types of objects (3-D, natural, designed, templated, non-opaque, &c) but suspect I will simply need to take a hybrid approach.Maneesh wrote back:Thanks for sending the examples. My sense is that the difference in orientations and resolutions between the images istoo large for SIFT. While SIFT is invariant to a range of transformations, that range is a bit limited -- especially when theresolution is relatively low in one of the images (i.e. the image containing the printout). In this case going with the gridstructure as you did will be more robust for sure.… anyway, my conclusion is that SIFT matching is optimized for individual images, not for designed objects, especially when high-level features (in this case the very fact of the thumbnail grid) are the same for every instance of the design.RMO