Hi,

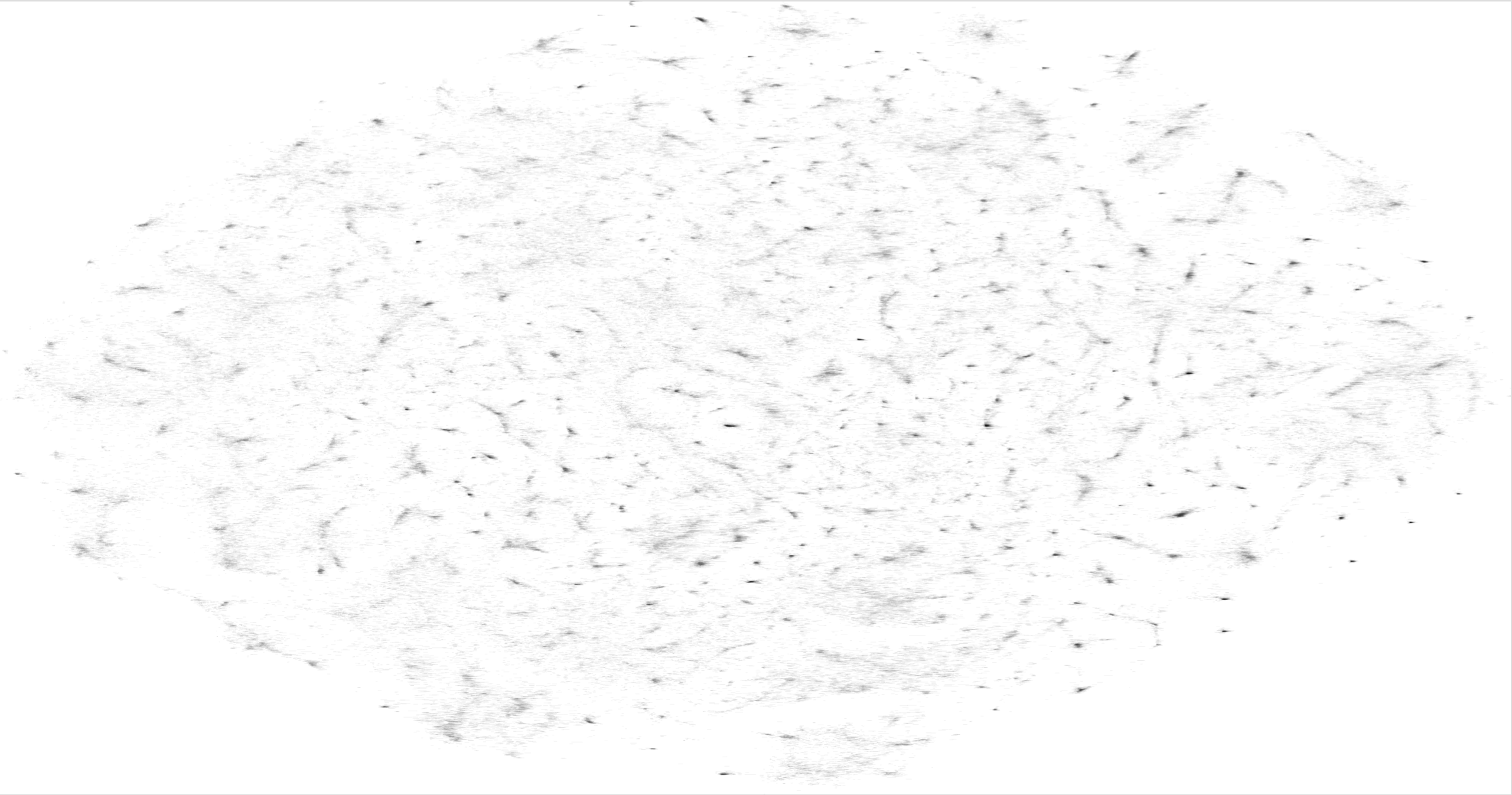

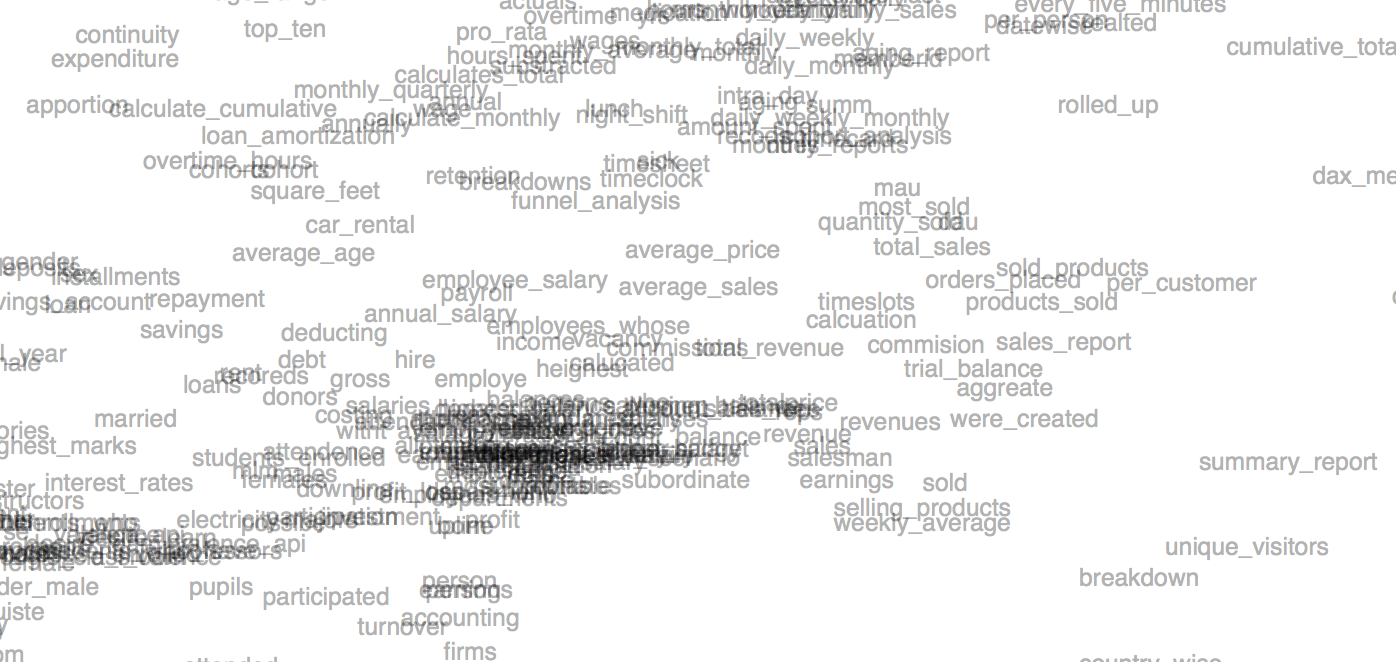

I made an enormous map of all of the things people try to do when they’re programming:

I rendered it as a PDF, thinking it might be a good thing to print out for CDG, but I had to keep making it bigger for it to be at all legible, and by now, at 240×126 inches, it’s a little ridiculous to actually imagine it in the space.

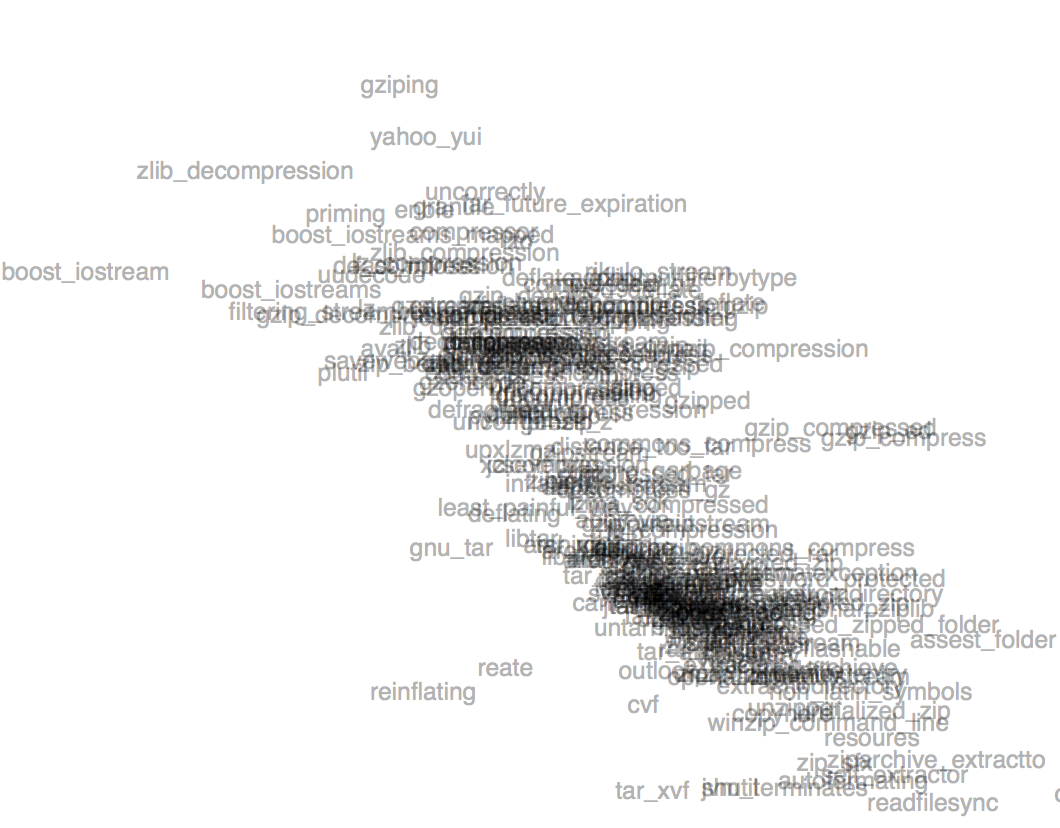

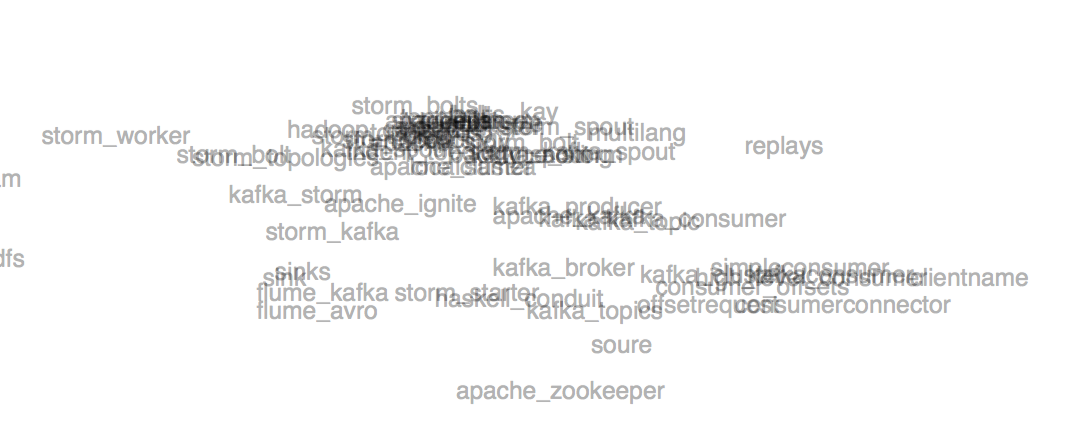

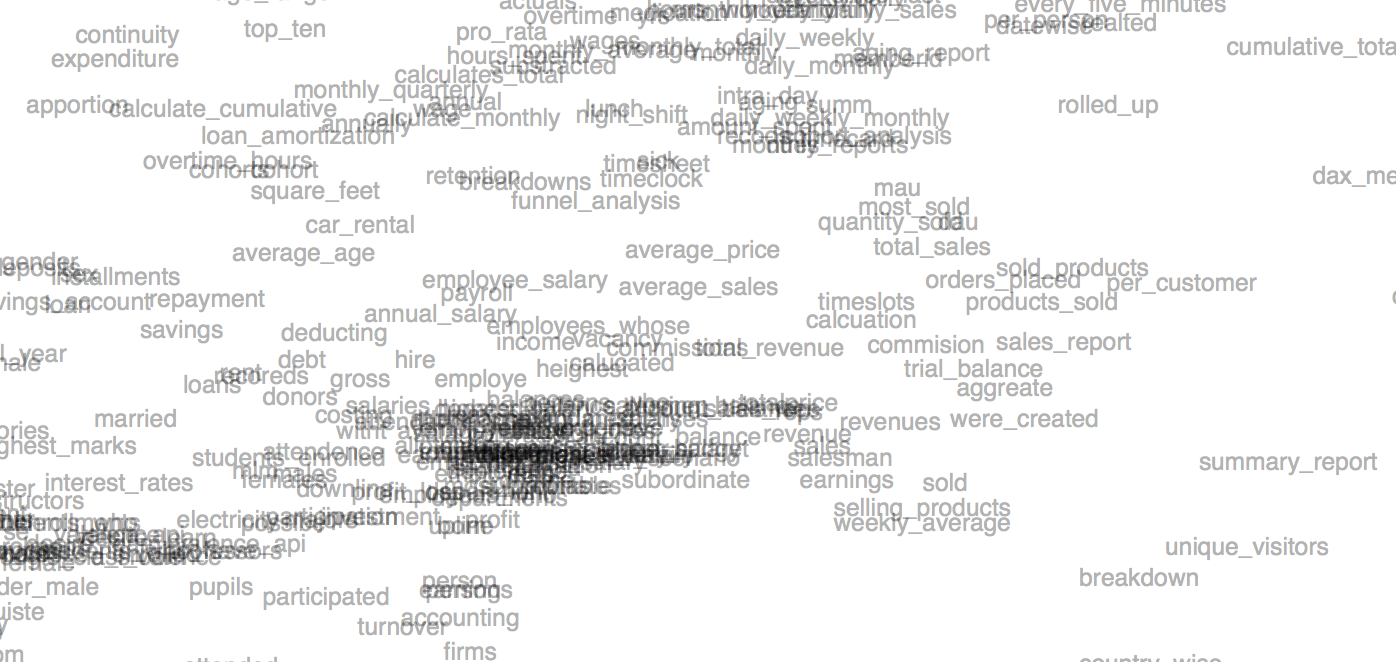

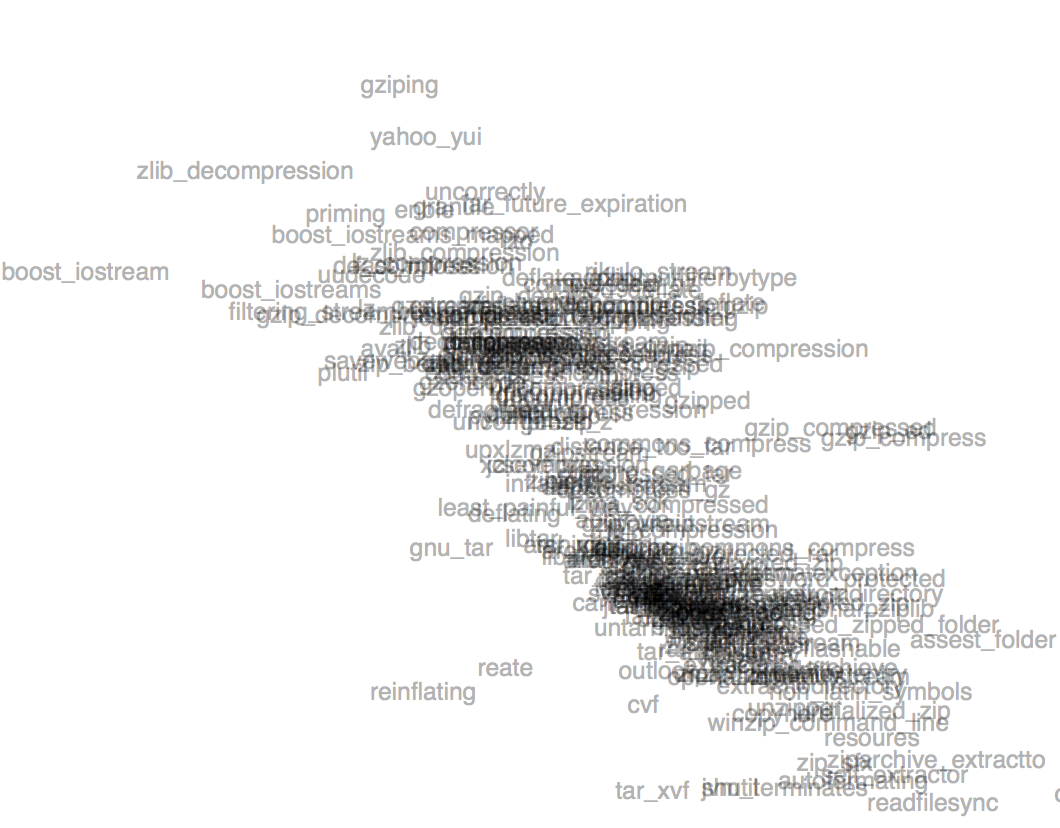

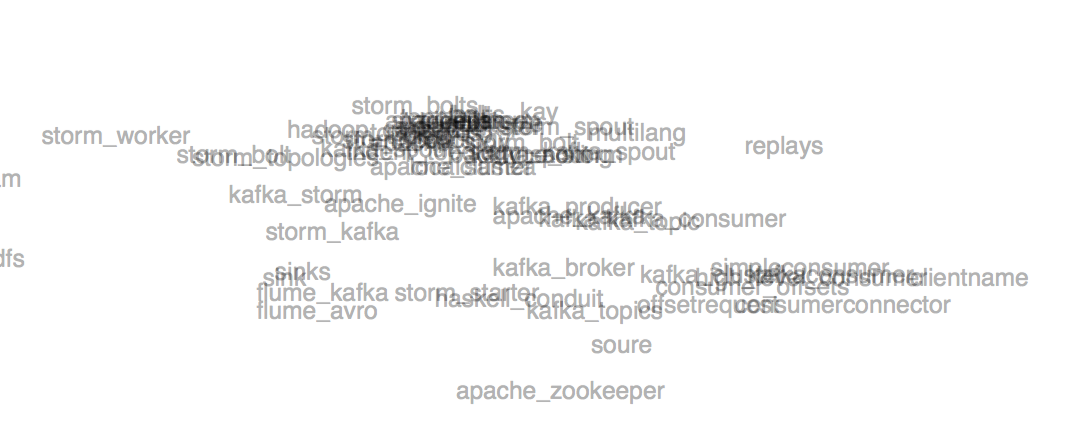

Up close, there are many clusters of familiar, as well as unfamiliar, topics:

Here’s a cluster about compressing things.

Someone must have made a piece of software called “Kafka.”

And there’s a whole region of people who seem interested in business, sales, employees, and all that.

…oh, but now Preview quit.

Anyway, it’s fun to poke around through this, but it might make your computer fan turn on.

These are all of the words from all of the titles of questions on Stack Overflow. I downloaded it and am hoping to explore some ways of searching through that site. It might have implications for programming IDEs (imagine typing a comment and automatically finding a block of code from Stack Overflow that does the thing you just described). I’m wondering if it’s possible to do search at reasonable scale (all of the posts in Stack Overflow total 39G of raw text) with an “explorable,” rather than “query/response” feel.

Technically, this is much the same as the Augmented Augmented Human Intellect prototype, below, except:

• I’m training it entirely through itself (no external data source); and

• multi-word phrases (like “sales_report”) are automatically conjoined.

Your correspondent,

R.M.O.

On Feb 8, 2016, at 9:55 PM, Robert Ochshorn wrote:On Jan 27, 2016, at 11:27 PM, Chaim Gingold wrote:Not off the top of my head from the text itself, and I don’t have time right now to dig through Augmenting Human Intellect, but I’m almost certain it’s in there.Hmmm…. not enough time to dig through Augmenting Human Intellect—since we’re talking about search, perhaps it would help if you had a search interface to AHI? (video)<Screen Shot 2016-02-08 at 9.15.25 PM.png>Obviously, text search is commonplace by now. What this does is combines the in-browser text search on Engelbart’s AHI with a bidirectional link to a semantically-spatialized mapping of all of the words in the piece.Talking with his daughter brings this point home: she points out that Doug’s tools offered more powerful ways of working, but people had to work hard to learn how. The chording keyboard is a great example. She and her siblings were drilled by their dad on the chording keyboard. And in AHI he talks about about the tools as well as craft knowledge (but I don’t remember the term he uses).Training is an essential part of Engelbart’s vision; it’s part of the H-LAM/T acronym:<Screen Shot 2016-02-08 at 9.17.51 PM.png>I think he’s right that there are some things that take a good deal of time to learn but that pay off. I’m happy, for instance, that I can type very quickly on a QWERTY keyboard. And it’s a valid point of argument that I might have been better off if I had learned a chorded keyboard. I can see benefits, as well as drawbacks.But there’s a big difference between training to learn a system, and training to understand each individual document in the system. I was referring to Engelbart’s fixation on acronyms (he calls them “abbreviations”):<Screen Shot 2016-02-08 at 9.26.00 PM.png>This is coming from a reading of Whorf which I don’t believe has held up into the 21st century:<Screen Shot 2016-02-08 at 9.33.12 PM.png>This very interface captures my suspicion of extending-language-and-thereby-extending-thought-through-abbreviation (ELATETTA): while nearly all of the words that Engelbart uses have a rich set of connotations that are captured by the scatterplot—even many of the proper nouns like Korzybski and Whorf—H/LAM-T is a string of characters without any semantic connotation. Something might, eventually, be gained by adding “H/LAM-T” into common usage, but my claim is that there is a severe cognitive cost associated with the use of characters outside of known semantic understanding. Engelbart thinks that “quick look-up” will overcome the strangeness of these characters. I disagree.<Screen Shot 2016-02-08 at 9.42.01 PM.png>I’ll include a little more about this technique, below. I haven’t really gotten it to a satisfactory state yet, but it’s starting to point some directions forward.More broadly, I’m interested in “seeing inside” of search. I’m blown away by the amount of complexity that Google is able to hide with their ten blue results. It’s an incredible achievement, but perhaps we’d expand from our own “personalized” perspective if we were able to better understand the “space” in which we are looking.Your correspondent,R.M.O.• • •I’ve been transfixed lately by the open source Google-initiated word2vec library. I trained it on the first billion characters of Wikipedia (of course), and it has “learned” to assign a 200-dimensional vector to each word contained therein. The simplest implication of this is its function as a misbehaving thesaurus: you give it a word, and it will output the “closest” other words. Some examples will help.In NYC I gave a talk at a center formerly known as Hacker School (now Recurse Center), and I started with the computational connotations (hereafter “CC”) of “hacker”:Enter word or sentence (EXIT to break): hackerWord: hacker Position in vocabulary: 6799Word Cosine distance------------------------------------------------------------------------hackers 0.641573hacking 0.634869hack 0.519038jargon 0.496075geek 0.480616japh 0.473775hacks 0.472019cyber 0.461035newbie 0.434005leetspeak 0.426675ursine 0.424415cyberpunk 0.419892securityfocus 0.419757subculture 0.419074leet 0.415718cracker 0.407582lamo 0.404582steele 0.402015developer 0.401020sussman 0.398030malicious 0.392435mitnick 0.391394newsgroup 0.391120hackerdom 0.387594intercal 0.386764grep 0.377167fannish 0.374869perl 0.373532tridgell 0.369218flamer 0.366661shrdlu 0.364778fic 0.363257demoscene 0.363033usenet 0.360683obfuscation 0.360228programmer 0.357891warez 0.357649webcomic 0.356827neuromancer 0.356489slang 0.354669Does this not explain why the organization might have decided to change their name? I’m very much familiar with the conscious attempts that humans take to shape our language. I know the dogma about how “hackers” are ethically motivated clever explorers of the digital unknown. But what does it mean to insist on a meaning that’s quantitatively different from the words it co-notes? Connotation, by the way, is a very good way to think about these results: the vectors are trained on pairs of words sharing a sentence.At a Tactical Media Files workshop that I participated in on Saturday, I attempted a similar trick, this time opening with “tactical”:Enter word or sentence (EXIT to break): tacticalWord: tactical Position in vocabulary: 6478Word Cosine distance------------------------------------------------------------------------combat 0.549381strategic 0.534384tactics 0.526885countermeasures 0.514575warheads 0.486942interceptors 0.485585strategy 0.475635reconnaissance 0.472701abm 0.467699deployed 0.467696targets 0.466220stealth 0.465901alq 0.465775firepower 0.463735blitzkrieg 0.460129amraam 0.459038infantry 0.457187logistics 0.451000cvbg 0.450855aiming 0.446344maneuver 0.445396manoeuvre 0.443323aggressor 0.441265defensive 0.440341operationally 0.438317airlifter 0.436964tactic 0.435498defense 0.433977offensives 0.433798sidewinder 0.428010fighters 0.427416icbm 0.425953deployable 0.425019weapon 0.423242fighter 0.422070grappling 0.421749icbms 0.418057interceptor 0.414731weaponry 0.412819agility 0.411716Look at how militant the vector of tactical co-notes! I turned these CC’s inward at my own work and values, and was tickled to see the very concept of “visibility” laced with the aerial perspective of modern warfare:Enter word or sentence (EXIT to break): visibilityWord: visibility Position in vocabulary: 12642Word Cosine distance------------------------------------------------------------------------altitudes 0.540396altitude 0.529357maneuverability 0.488706airspeed 0.488132survivability 0.467124speed 0.438493cockpit 0.436485sensitivity 0.428580glare 0.427989humidity 0.427278situational 0.415761fairing 0.412770subsonic 0.407297vfr 0.405353significantly 0.404337intakes 0.396664capability 0.395556manoeuvrability 0.395410leakage 0.391842level 0.390953airspeeds 0.389908acuity 0.389621transonic 0.387485intensity 0.387420quality 0.387222thermals 0.384842throughput 0.383414brightness 0.382153levels 0.381913dramatically 0.381580readability 0.378554congestion 0.378261ceilings 0.376643vigilance 0.374827performance 0.374289lethality 0.373615sunlight 0.373360greatly 0.373039agility 0.371887reliability 0.371846Note the contrast with our aural sense. To “listen” is to acknowledge the other, to learn, to ask:Enter word or sentence (EXIT to break): listenWord: listen Position in vocabulary: 7642Word Cosine distance------------------------------------------------------------------------hear 0.594319you 0.508852learn 0.507548listening 0.507300listened 0.498478realaudio 0.485728listens 0.484657sing 0.484529headphones 0.476096heard 0.472872podcast 0.466580want 0.460877your 0.460069instruct 0.459724talk 0.450591listener 0.449981tell 0.444445read 0.439060exclaiming 0.432227sampled 0.431869watching 0.431578conversation 0.428230playlist 0.421875listeners 0.419649loudly 0.417167forget 0.416092audible 0.414083remember 0.413376outtake 0.413345jingles 0.411440speak 0.409109gotta 0.405322me 0.403930say 0.399365whistles 0.398721laugh 0.397030ask 0.396598audio 0.396549pause 0.396406know 0.395876Obviously there are drawbacks, and I was much tickled to see “forgetting” so closely involved with the act of listening.The Minsky vectors were from this same technique. Minsky is dead, but “Minsky” still evokes a complex network of concepts and peers. What greater compliment for an intellectual than to enter into our language? But the query-response logic of traditional computer programs is not what I am after. For me, the point of a “symbiosis” between human and machine is help us find the questions to ask, not to presume to have an answer.