I spent some time fleshing out the “thermodynamic laboratory” concept which I introduced at the original Imagination Jam. The idea is to create a scientific workspace where you can build and investigate combinatorial systems which represent microscopic behavior.

In particular, we’re going to make a simulation of what goes on in blocks of metal, as little packets of energy bounce around from atom to atom. What happens when you put a hot piece of metal next to a cold one?

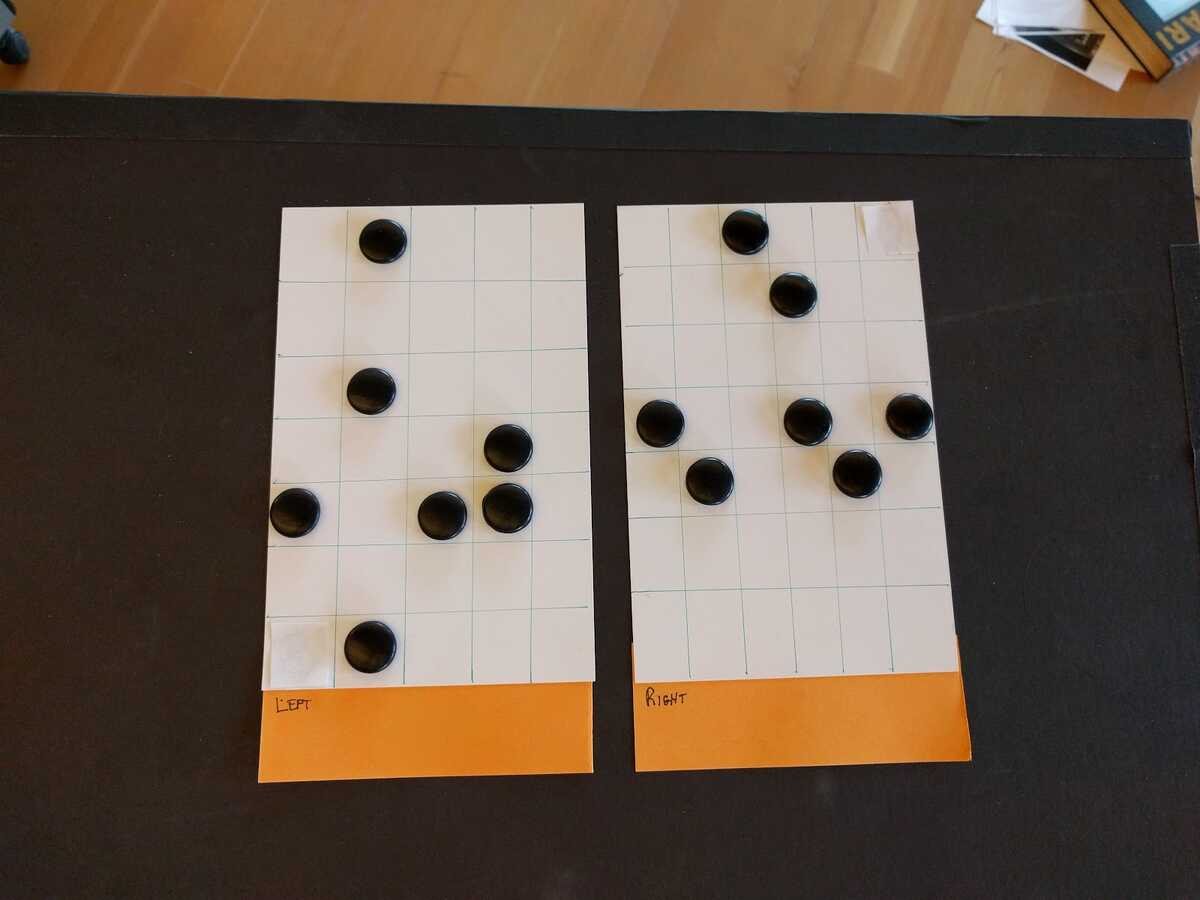

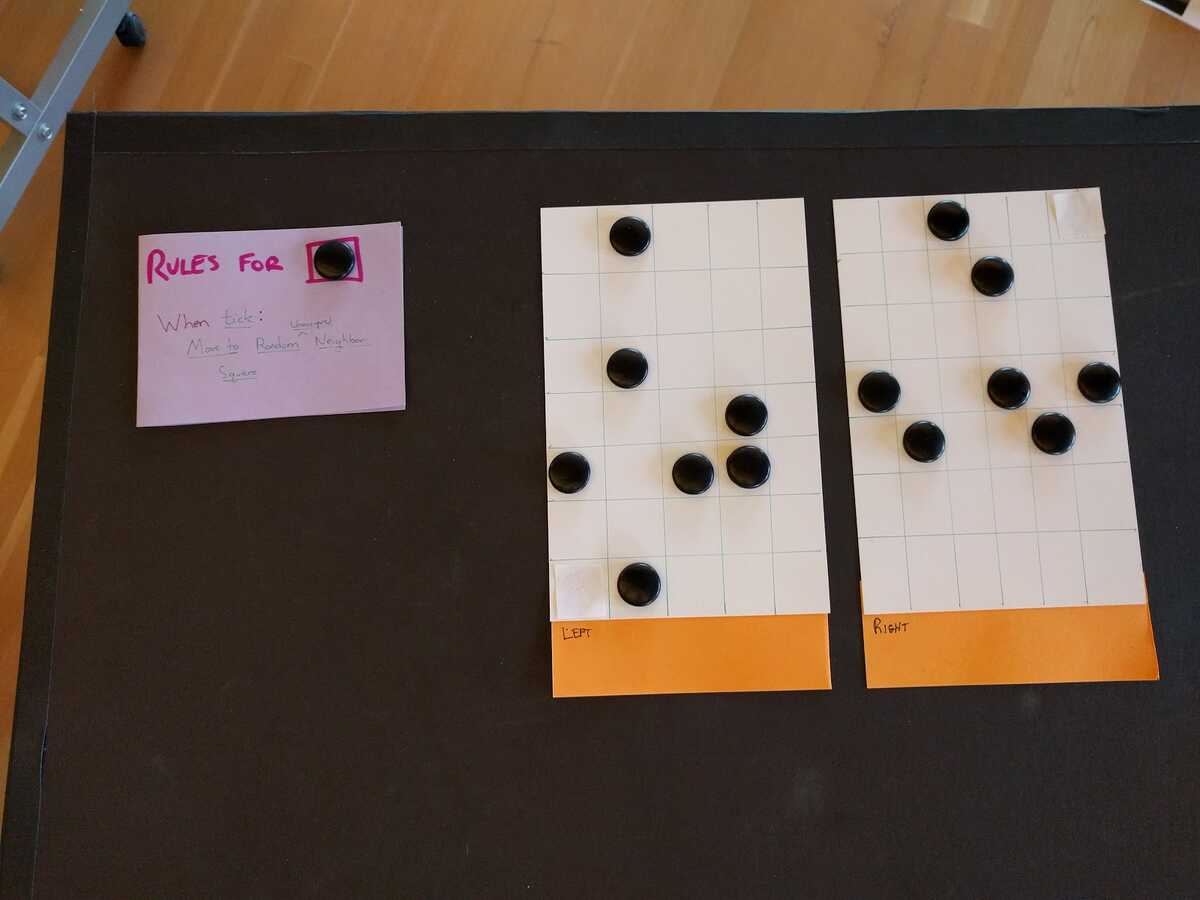

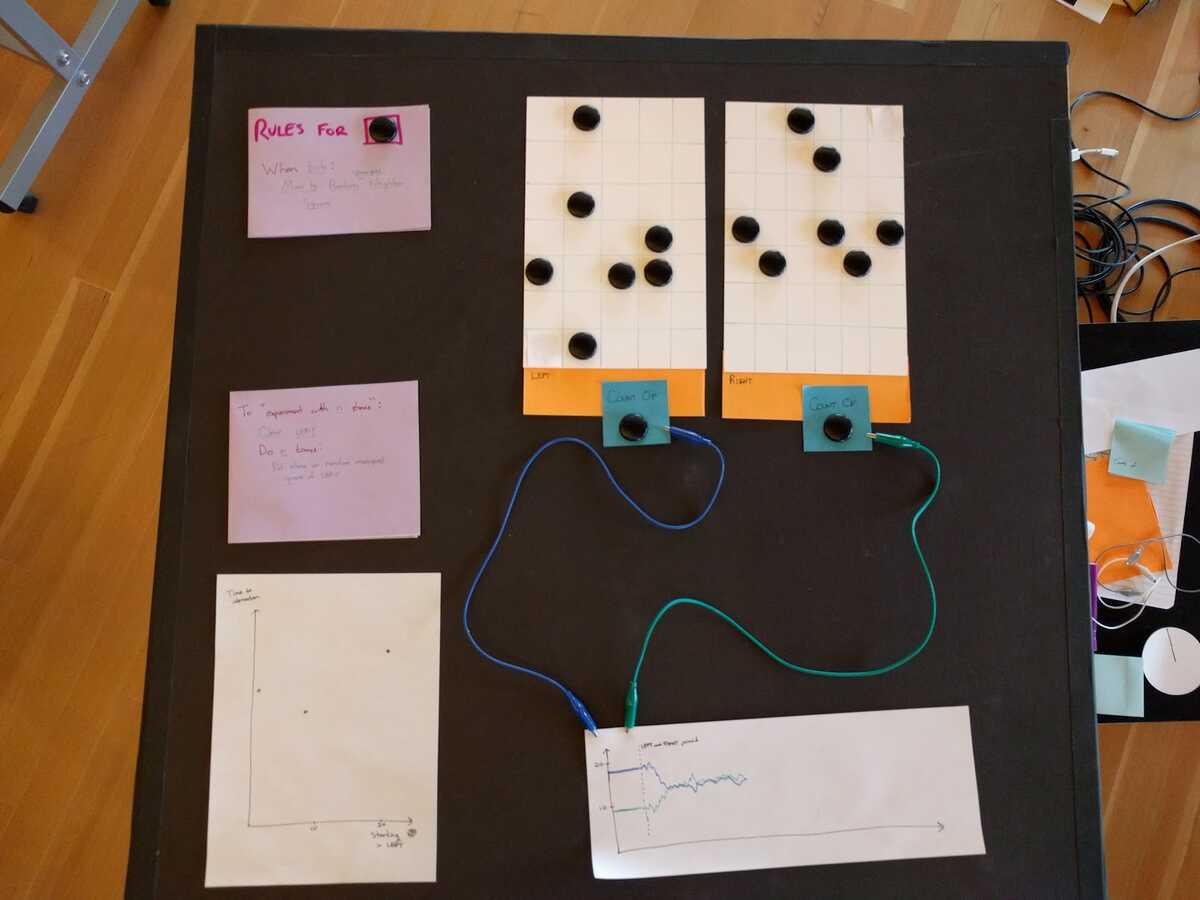

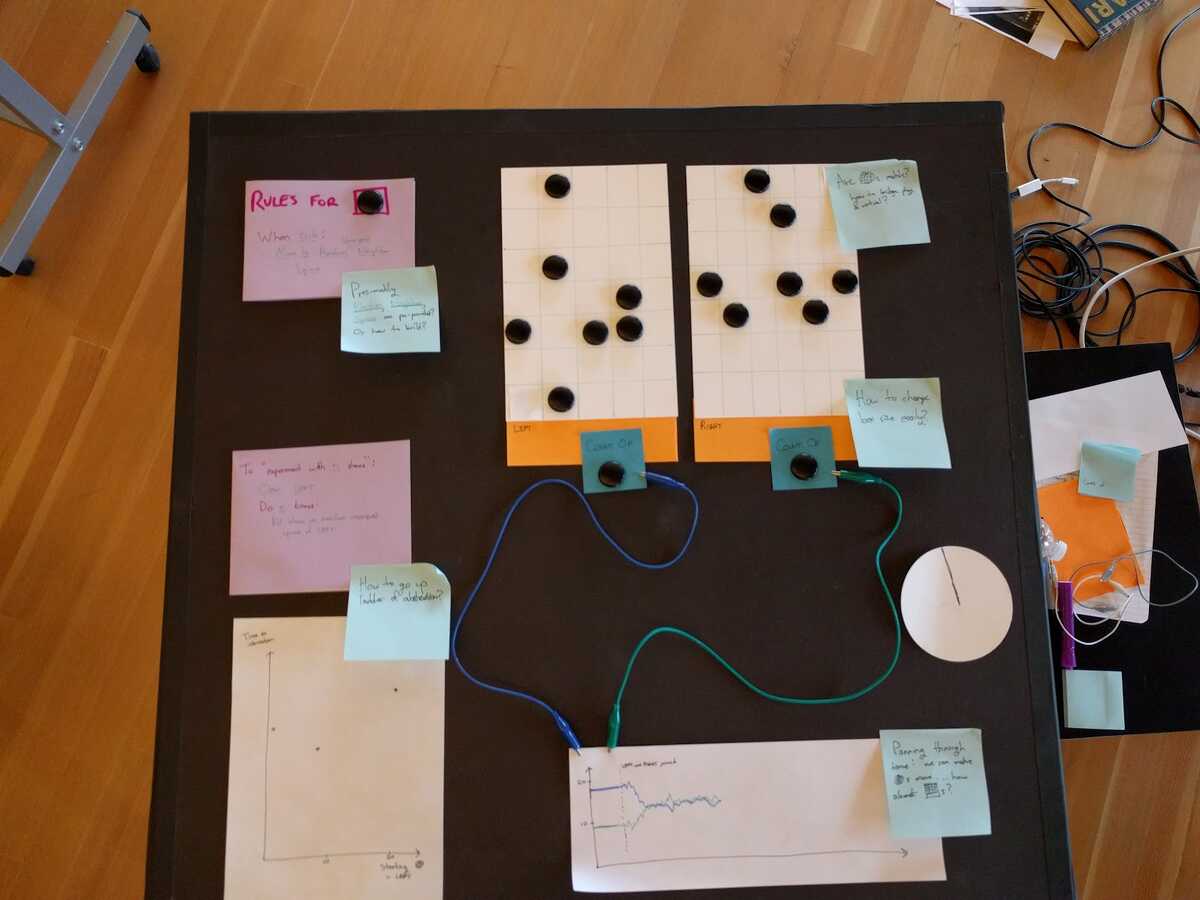

| First, you bring out the “blocks of metal”, which are just sheets divided up into grids of squares. You place some stones onto the cells to represent cute little bundles of energy. The orange strips at the bottom of the blocks are “handles”, where we can give the blocks names and attach things to them without worrying about getting in the way of the stones. |

| Next, you add a “rules” card which describes the behaviors stones have. This card says that on each “tick”, each stone should move to a random unoccupied neighboring square. This makes sense to us as readers. For it to make sense to the computer, the system needs to know what “unoccupied” “neighboring” and “square” mean (etc.). I imagined that the user in our story was relying upon a pre-built toolkit which defined what it means to have bunch of squares connected together with stones on them. (It’s interesting to consider what it would take to build this toolkit, and whether it can be made easy enough that the user in our story wouldn’t need to rely on a toolkit at all.) For this jam, I took the liberty [go joke] of imagining that the stones could be dynamically actuated, so the computer could move them around as it pleased. This might be unrealistic. In that case, there’s an interesting question about whether we can get the kinesthetic/intuitive benefit of setting the system up with stones, even though the platform might replace them with a projection for the simulation. I am really interested in this theme of moving back and forth between physical and virtual representations (which also arose in Paula’s presentation). FWIW, even if we had moving stones, we would have to revert to a virtual representation to scale the blocks’ size to 100x100, 1000x1000, etc. Perhaps we can still maintain some connection to the physical even then: pour sand instead of placing stones. Another question (shared by other people’s projects) was how the “behavior card” could be written in the first place. There are lots of good directions to go with this, but one thought which I liked was that you could “act out” the sequence of steps with physical tokens in order to write the script. I imagined this could involve the system soliciting disambiguations: you place a stone on the board, and the system asks “Do you mean, place a stone at (3,2)? Or place a stone on a random square? Or…” Bret seemed to prefer a more deliberate process where you “roll dice” to generate random numbers and then map those to the stone’s intended position. People also brought up questions about how time works in the simulation. I imagined the stones moving one at a time. Others imagined them moving simultaneously. Toby proposed that the simulation could be made more explicit by calling out the two-step process of “pick a random stone, then move it randomly”. Etc. |

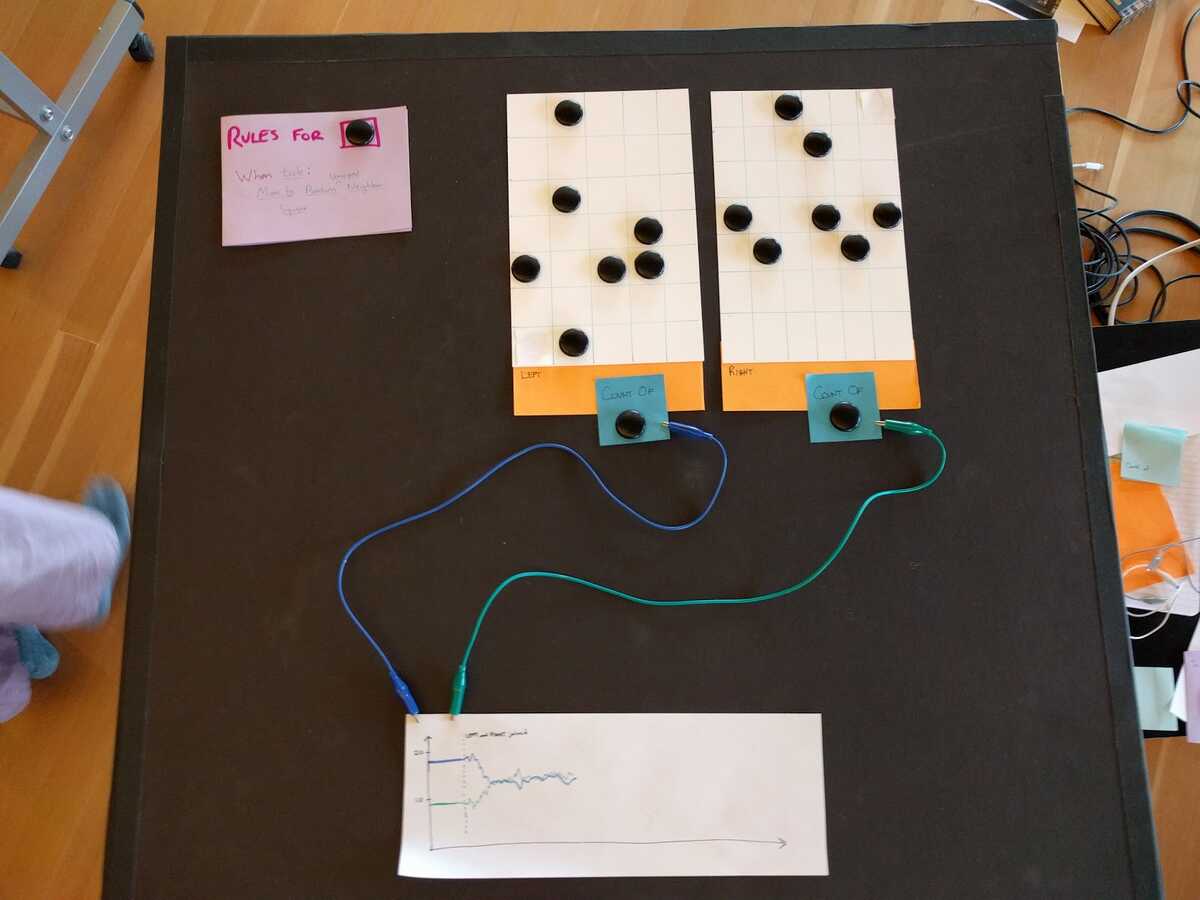

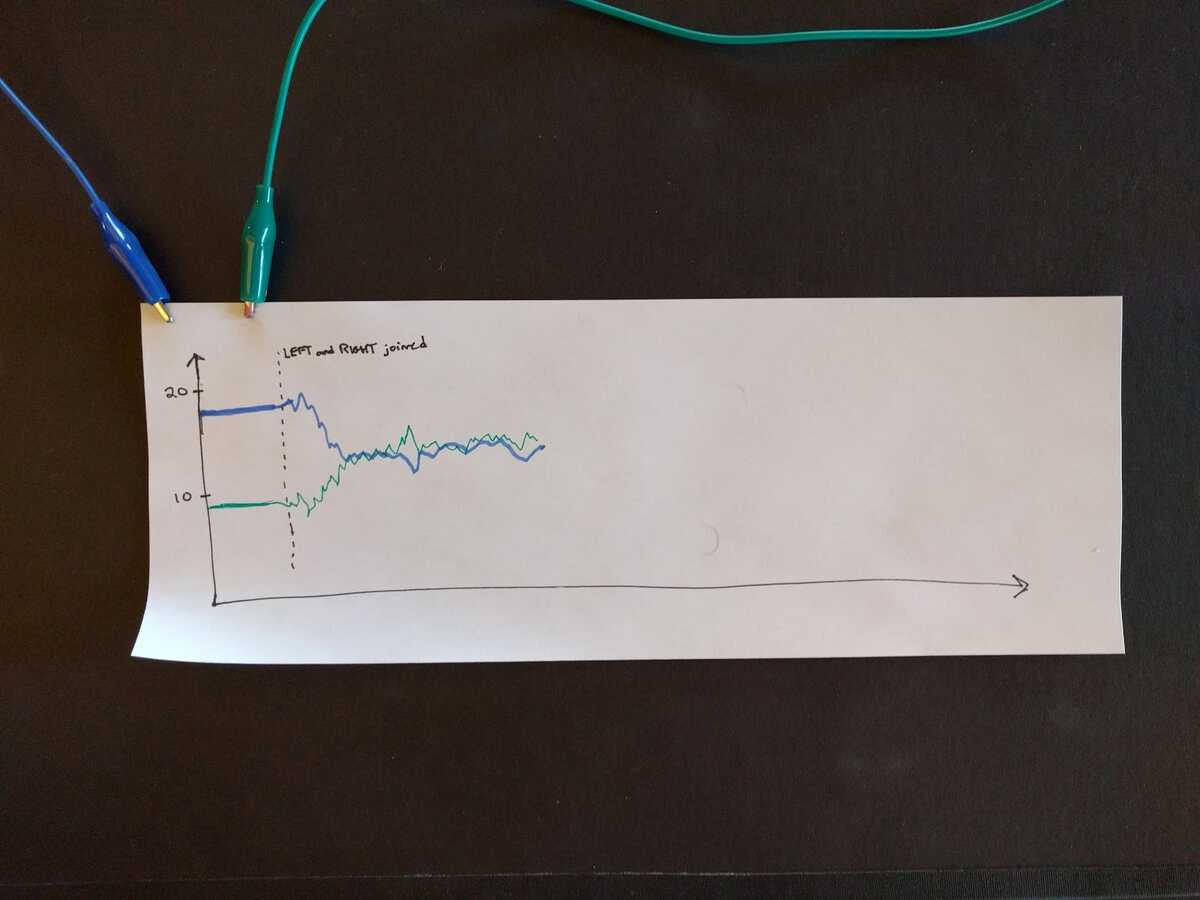

| At this point, our user could run the simulation and watch as the stones zoom about. But they probably want to see what happens over time. So they put “COUNT OF [STONE]” instruments on the two handles and then alligator-clip them to a charting card, which begins recording (color-coded) plots of the counts over time. (We can also tap / laser through time using this chart.) As long as the blocks are separated, the plot will show flat lines. But once the blocks are placed next to each other (not shown), stones can move across the boundary and the plot will show more interesting behavior. Although the counts move up and down unpredictably, they seem to drift towards one another until they are just buzzing around in the same spot. The two blocks are exchanging energy until their temperatures are equal! [Note: This is sort of BS. Imagine the two blocks were different sizes.] We might want to know how long this process takes. A concrete version of this question is “how long does it take for the curves to intersect”? We might use an interaction like Demo 4 of Media for Thinking the Unthinkable to mark the intersection on this plot so we don’t need to feel it out by hand. Then we can start climbing the ladder of abstraction. For instance: How does changing the initial energy of the left block affect the time to equilibrium? |

| This is where things start getting sketchy. I express the concept of “changing the initial energy of the left block” in the form of a parameterized procedure, added in the bottom-left of the table. This procedure is basically an experimental protocol: the sort which a grad student would follow by hand if we were in a real laboratory. But we’re in a magic computer laboratory, so the computer can follow the procedure for us. Then we add a plot to store the results of a bunch of runs of this procedure. The interactions involved in this are yet to be determined. It feels a lot like running the experiment over and over again with different parameter values shouldn’t result in lengthening a single global timeline, but should instead introduce a bunch of parallel timelines, which can be flipped through and navigated at will. Bret suggested reifying this by making the time chart into a stack of time charts. This feels totally right to me. It suggests that there is a lot of thought left to do around “scoping” time. |

| For posterity, here are the post-its I left to myself while working on the demo. I also included the “clock” which I added early on, thinking to myself “man it’s gonna be really important to reify time one of these days” and then subsequently ignored. |

Thanks everyone; this was great!

We decided for our writeup to the programming imagination jam that we would each document our own project. Here's mine!---For the programming jam, I mocked up a video editor using the video coasters I had previously made.

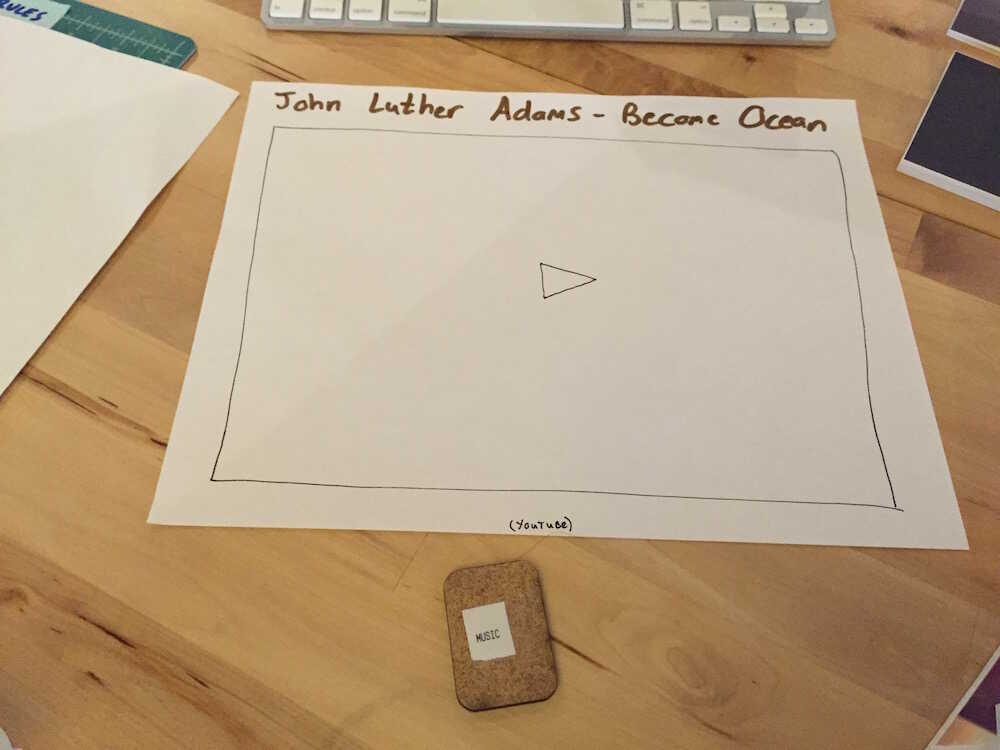

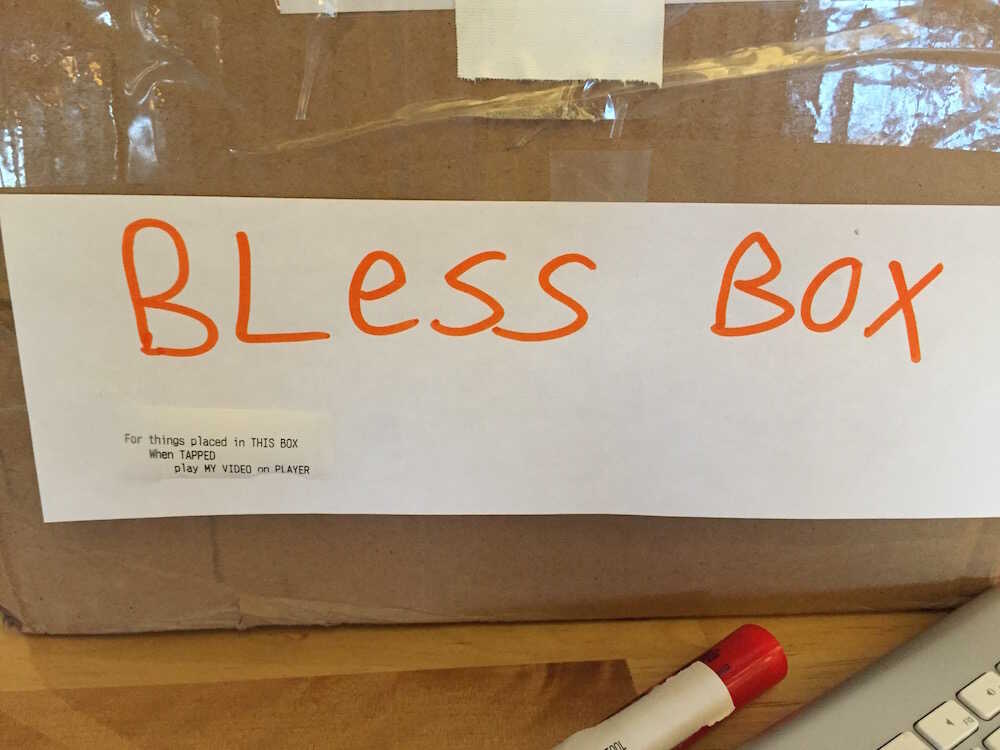

As before, you tap each coaster which plays the video on the large piece of posterboard.The music is reified in a "browser tab" which is playing a youtube video:The "music" token is a scrubber that when moved along an axis changes the position of the audio. I imagine I would be using this interface two-handed (tapping and scrubbing) like so:Responding to the issue of how I program all 110 coasters, I thought maybe the box I was using to store them could also "bless" the coasters with a behavior when a coaster was taken out of it:

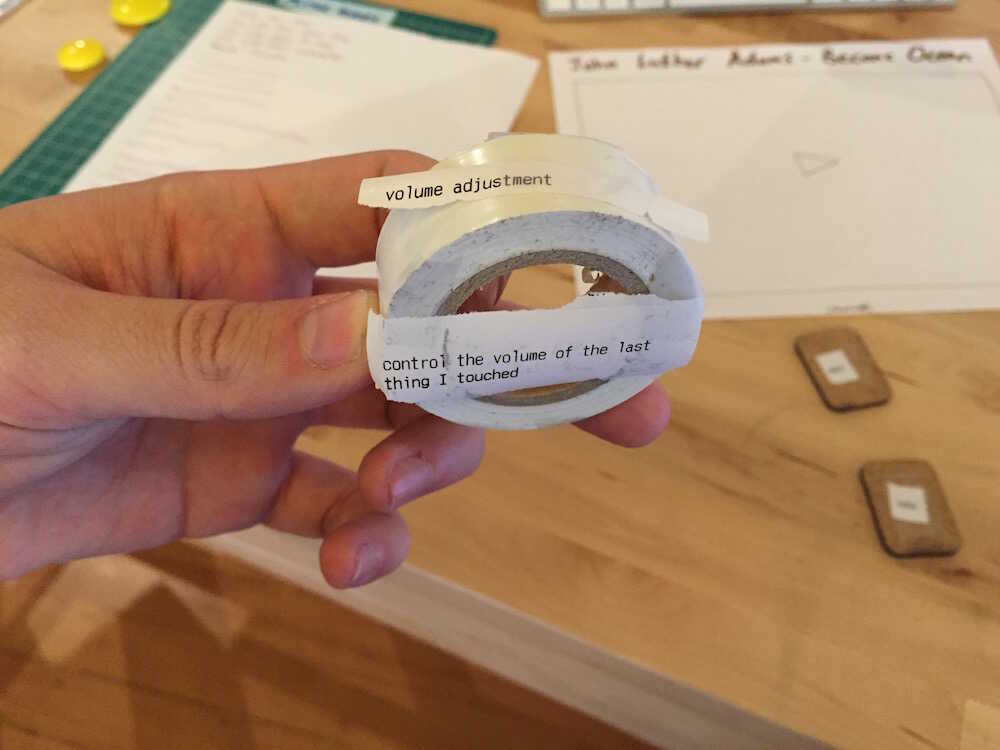

I also used a tape roll for volume adjustment, with the code on the bottom.When rotated, the volume of the main video player changes. I don't know if "last thing I touched" is an interesting primitive or not.

It seemed important that this stuff was made out of random objects I had lying around.

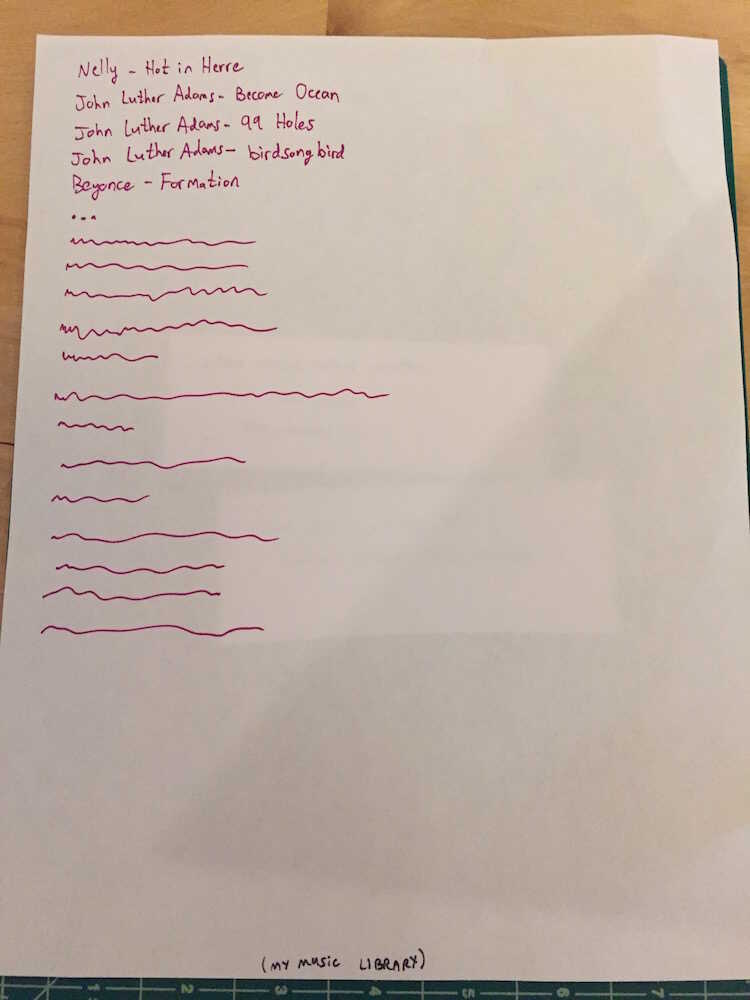

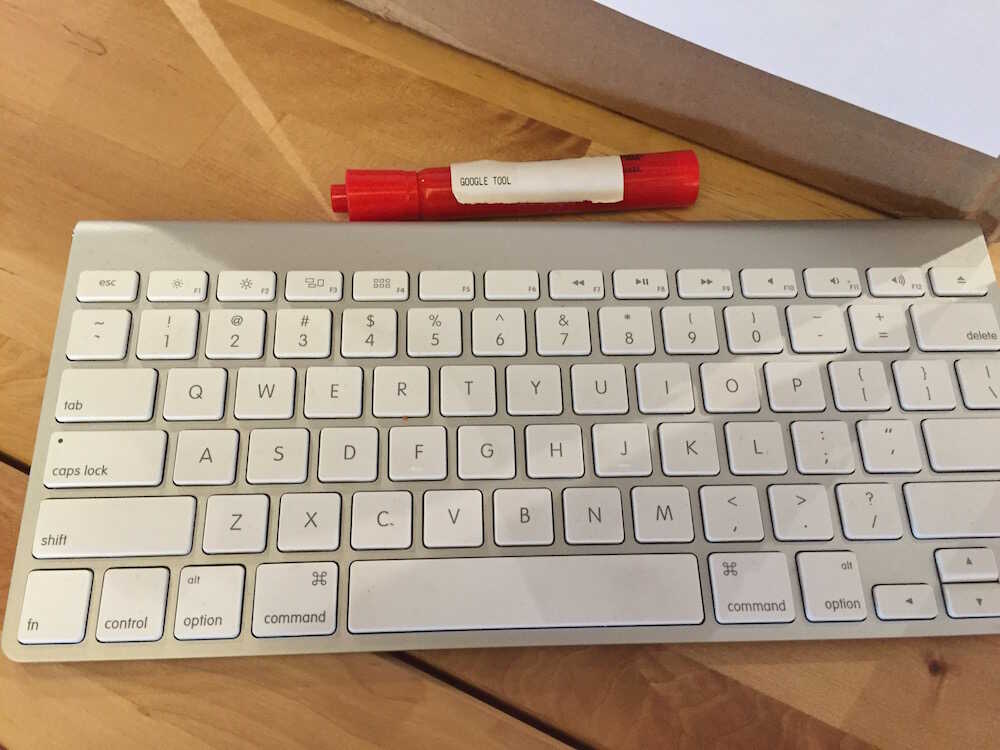

It also seemed important that I be able to find and test out different music. This component could be an ipad-like interface to my personal music collection that I could choose from and try songs out:Or I could make a blank piece of paper into a browser tab with the GOOGLE TOOL and a keyboard for searching and finding youtube videos of music: