On Jan 27, 2016, at 11:27 PM, Chaim Gingold wrote:Not off the top of my head from the text itself, and I don’t have time right now to dig through Augmenting Human Intellect, but I’m almost certain it’s in there.

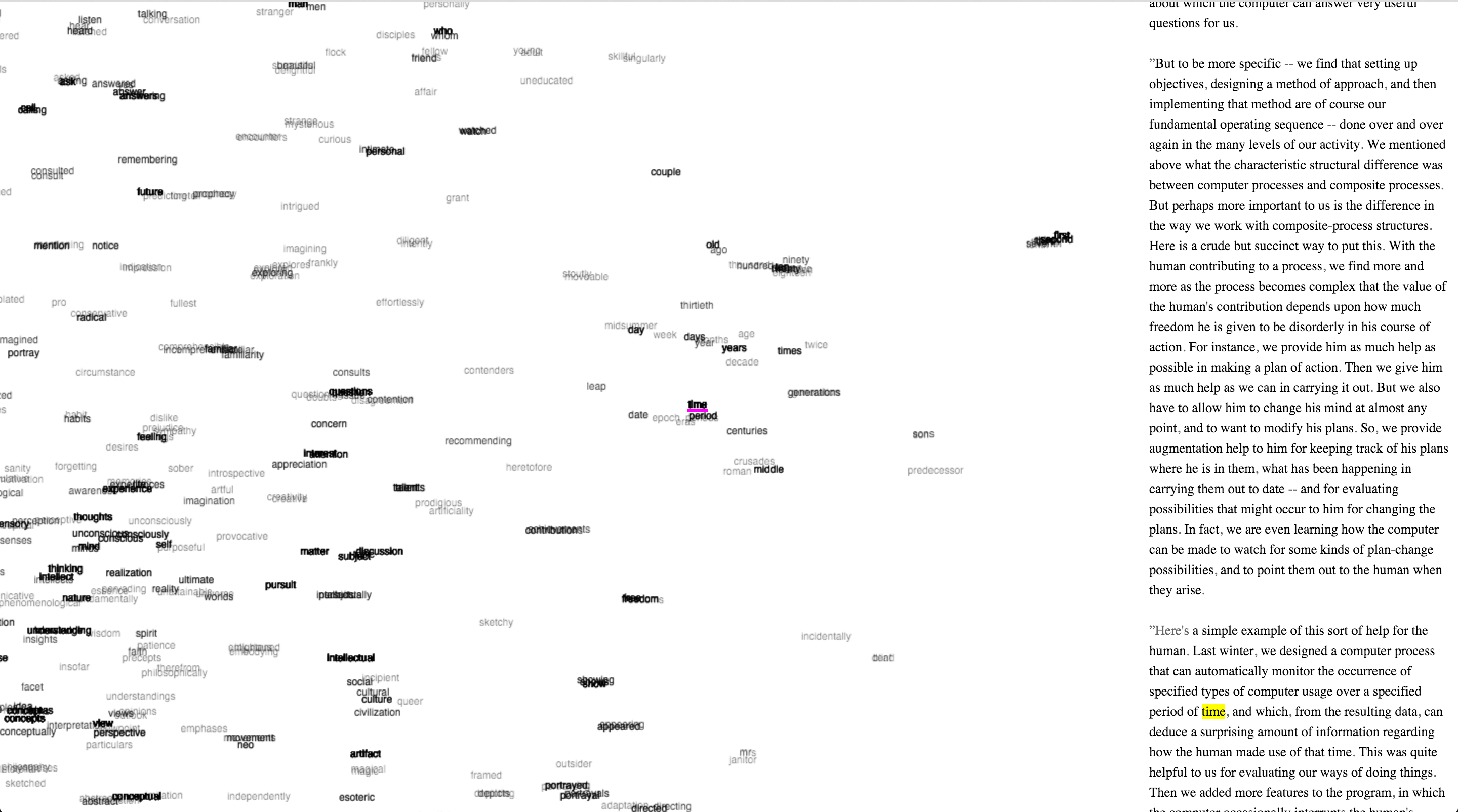

Hmmm…. not enough time to dig through Augmenting Human Intellect—since we’re talking about search, perhaps it would help if you had a search interface to AHI? (video)

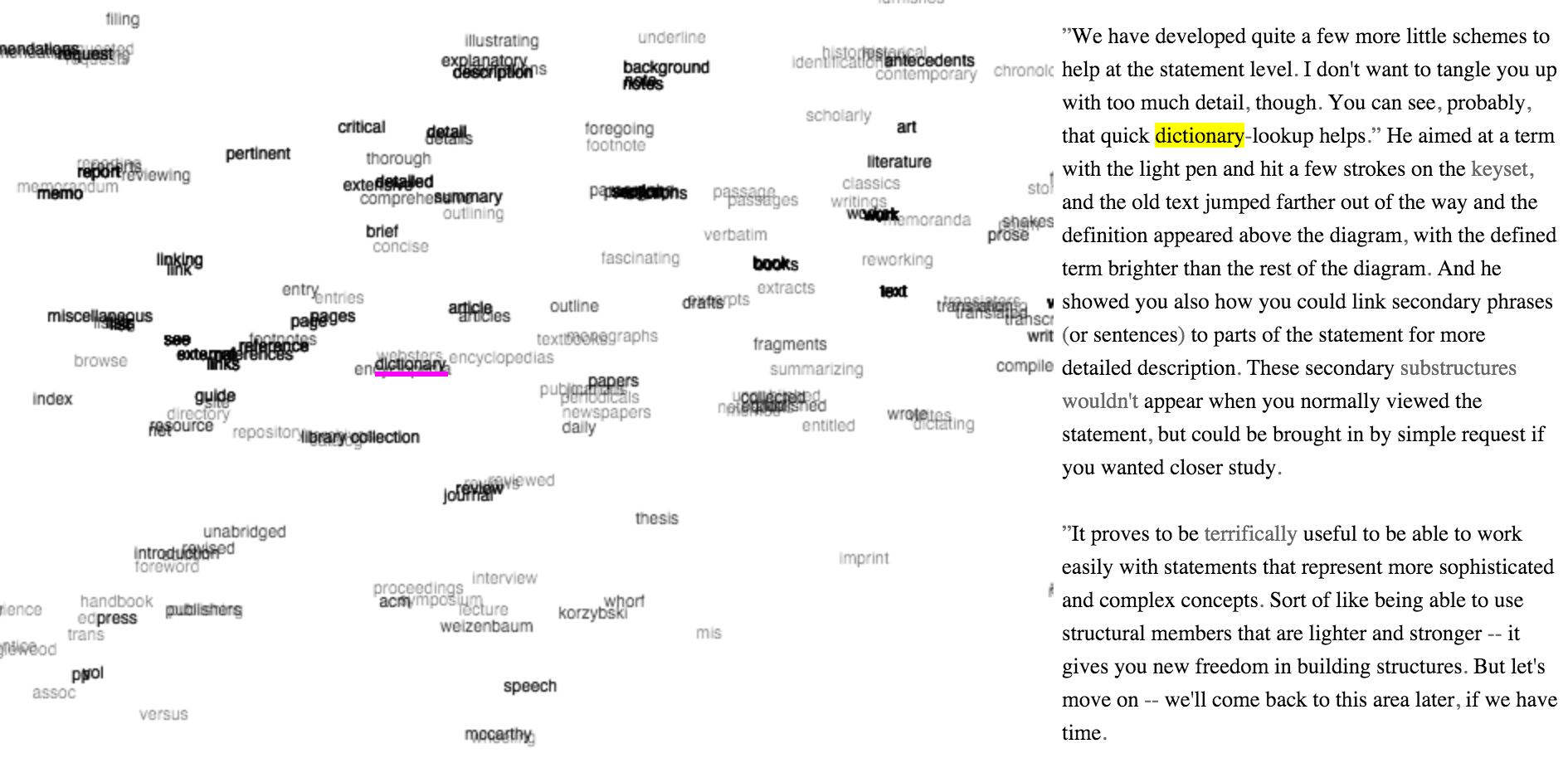

Obviously, text search is commonplace by now. What this does is combines the in-browser text search on Engelbart’s AHI with a bidirectional link to a semantically-spatialized mapping of all of the words in the piece.

Talking with his daughter brings this point home: she points out that Doug’s tools offered more powerful ways of working, but people had to work hard to learn how. The chording keyboard is a great example. She and her siblings were drilled by their dad on the chording keyboard. And in AHI he talks about about the tools as well as craft knowledge (but I don’t remember the term he uses).

Training is an essential part of Engelbart’s vision; it’s part of the H-LAM/T acronym:

I think he’s right that there are some things that take a good deal of time to learn but that pay off. I’m happy, for instance, that I can type very quickly on a QWERTY keyboard. And it’s a valid point of argument that I might have been better off if I had learned a chorded keyboard. I can see benefits, as well as drawbacks.

But there’s a big difference between training to learn a system, and training to understand each individual document in the system. I was referring to Engelbart’s fixation on acronyms (he calls them “abbreviations”):

This is coming from a reading of Whorf which I don’t believe has held up into the 21st century:

This very interface captures my suspicion of extending-language-and-thereby-extending-thought-through-abbreviation (ELATETTA): while nearly all of the words that Engelbart uses have a rich set of connotations that are captured by the scatterplot—even many of the proper nouns like Korzybski and Whorf—H/LAM-T is a string of characters without any semantic connotation. Something might, eventually, be gained by adding “H/LAM-T” into common usage, but my claim is that there is a severe cognitive cost associated with the use of characters outside of known semantic understanding. Engelbart thinks that “quick look-up” will overcome the strangeness of these characters. I disagree.

I’ll include a little more about this technique, below. I haven’t really gotten it to a satisfactory state yet, but it’s starting to point some directions forward.

More broadly, I’m interested in “seeing inside” of search. I’m blown away by the amount of complexity that Google is able to hide with their ten blue results. It’s an incredible achievement, but perhaps we’d expand from our own “personalized” perspective if we were able to better understand the “space” in which we are looking.

Your correspondent,

R.M.O.

• • •

I’ve been transfixed lately by the open source Google-initiated word2vec library. I trained it on the first billion characters of Wikipedia (of course), and it has “learned” to assign a 200-dimensional vector to each word contained therein. The simplest implication of this is its function as a misbehaving thesaurus: you give it a word, and it will output the “closest” other words. Some examples will help.

In NYC I gave a talk at a center formerly known as Hacker School (now Recurse Center), and I started with the computational connotations (hereafter “CC”) of “hacker”:

Enter word or sentence (EXIT to break): hacker

Word: hacker Position in vocabulary: 6799

Word Cosine distance

------------------------------------------------------------------------

hackers 0.641573

hacking 0.634869

hack 0.519038

jargon 0.496075

geek 0.480616

japh 0.473775

hacks 0.472019

cyber 0.461035

newbie 0.434005

leetspeak 0.426675

ursine 0.424415

cyberpunk 0.419892

securityfocus 0.419757

subculture 0.419074

leet 0.415718

cracker 0.407582

lamo 0.404582

steele 0.402015

developer 0.401020

sussman 0.398030

malicious 0.392435

mitnick 0.391394

newsgroup 0.391120

hackerdom 0.387594

intercal 0.386764

grep 0.377167

fannish 0.374869

perl 0.373532

tridgell 0.369218

flamer 0.366661

shrdlu 0.364778

fic 0.363257

demoscene 0.363033

usenet 0.360683

obfuscation 0.360228

programmer 0.357891

warez 0.357649

webcomic 0.356827

neuromancer 0.356489

slang 0.354669

Does this not explain why the organization might have decided to change their name? I’m very much familiar with the conscious attempts that humans take to shape our language. I know the dogma about how “hackers” are ethically motivated clever explorers of the digital unknown. But what does it mean to insist on a meaning that’s quantitatively different from the words it co-notes? Connotation, by the way, is a very good way to think about these results: the vectors are trained on pairs of words sharing a sentence.

At a Tactical Media Files workshop that I participated in on Saturday, I attempted a similar trick, this time opening with “tactical”:

Enter word or sentence (EXIT to break): tactical

Word: tactical Position in vocabulary: 6478

Word Cosine distance

------------------------------------------------------------------------

combat 0.549381

strategic 0.534384

tactics 0.526885

countermeasures 0.514575

warheads 0.486942

interceptors 0.485585

strategy 0.475635

reconnaissance 0.472701

abm 0.467699

deployed 0.467696

targets 0.466220

stealth 0.465901

alq 0.465775

firepower 0.463735

blitzkrieg 0.460129

amraam 0.459038

infantry 0.457187

logistics 0.451000

cvbg 0.450855

aiming 0.446344

maneuver 0.445396

manoeuvre 0.443323

aggressor 0.441265

defensive 0.440341

operationally 0.438317

airlifter 0.436964

tactic 0.435498

defense 0.433977

offensives 0.433798

sidewinder 0.428010

fighters 0.427416

icbm 0.425953

deployable 0.425019

weapon 0.423242

fighter 0.422070

grappling 0.421749

icbms 0.418057

interceptor 0.414731

weaponry 0.412819

agility 0.411716

Look at how militant the vector of tactical co-notes! I turned these CC’s inward at my own work and values, and was tickled to see the very concept of “visibility” laced with the aerial perspective of modern warfare:

Enter word or sentence (EXIT to break): visibility

Word: visibility Position in vocabulary: 12642

Word Cosine distance

------------------------------------------------------------------------

altitudes 0.540396

altitude 0.529357

maneuverability 0.488706

airspeed 0.488132

survivability 0.467124

speed 0.438493

cockpit 0.436485

sensitivity 0.428580

glare 0.427989

humidity 0.427278

situational 0.415761

fairing 0.412770

subsonic 0.407297

vfr 0.405353

significantly 0.404337

intakes 0.396664

capability 0.395556

manoeuvrability 0.395410

leakage 0.391842

level 0.390953

airspeeds 0.389908

acuity 0.389621

transonic 0.387485

intensity 0.387420

quality 0.387222

thermals 0.384842

throughput 0.383414

brightness 0.382153

levels 0.381913

dramatically 0.381580

readability 0.378554

congestion 0.378261

ceilings 0.376643

vigilance 0.374827

performance 0.374289

lethality 0.373615

sunlight 0.373360

greatly 0.373039

agility 0.371887

reliability 0.371846

Note the contrast with our aural sense. To “listen” is to acknowledge the other, to learn, to ask:

Enter word or sentence (EXIT to break): listen

Word: listen Position in vocabulary: 7642

Word Cosine distance

------------------------------------------------------------------------

hear 0.594319

you 0.508852

learn 0.507548

listening 0.507300

listened 0.498478

realaudio 0.485728

listens 0.484657

sing 0.484529

headphones 0.476096

heard 0.472872

podcast 0.466580

want 0.460877

your 0.460069

instruct 0.459724

talk 0.450591

listener 0.449981

tell 0.444445

read 0.439060

exclaiming 0.432227

sampled 0.431869

watching 0.431578

conversation 0.428230

playlist 0.421875

listeners 0.419649

loudly 0.417167

forget 0.416092

audible 0.414083

remember 0.413376

outtake 0.413345

jingles 0.411440

speak 0.409109

gotta 0.405322

me 0.403930

say 0.399365

whistles 0.398721

laugh 0.397030

ask 0.396598

audio 0.396549

pause 0.396406

know 0.395876

Obviously there are drawbacks, and I was much tickled to see “forgetting” so closely involved with the act of listening.

The Minsky vectors were from this same technique. Minsky is dead, but “Minsky” still evokes a complex network of concepts and peers. What greater compliment for an intellectual than to enter into our language? But the query-response logic of traditional computer programs is not what I am after. For me, the point of a “symbiosis” between human and machine is help us find the questions to ask, not to presume to have an answer.