On Dec 11, 2014, at 11:53 PM, Aran Lunzer wrote:Hi RobertThanks for jumping into these experiments!

It’s been a year: maybe it’s time for Conferencing 5.0?

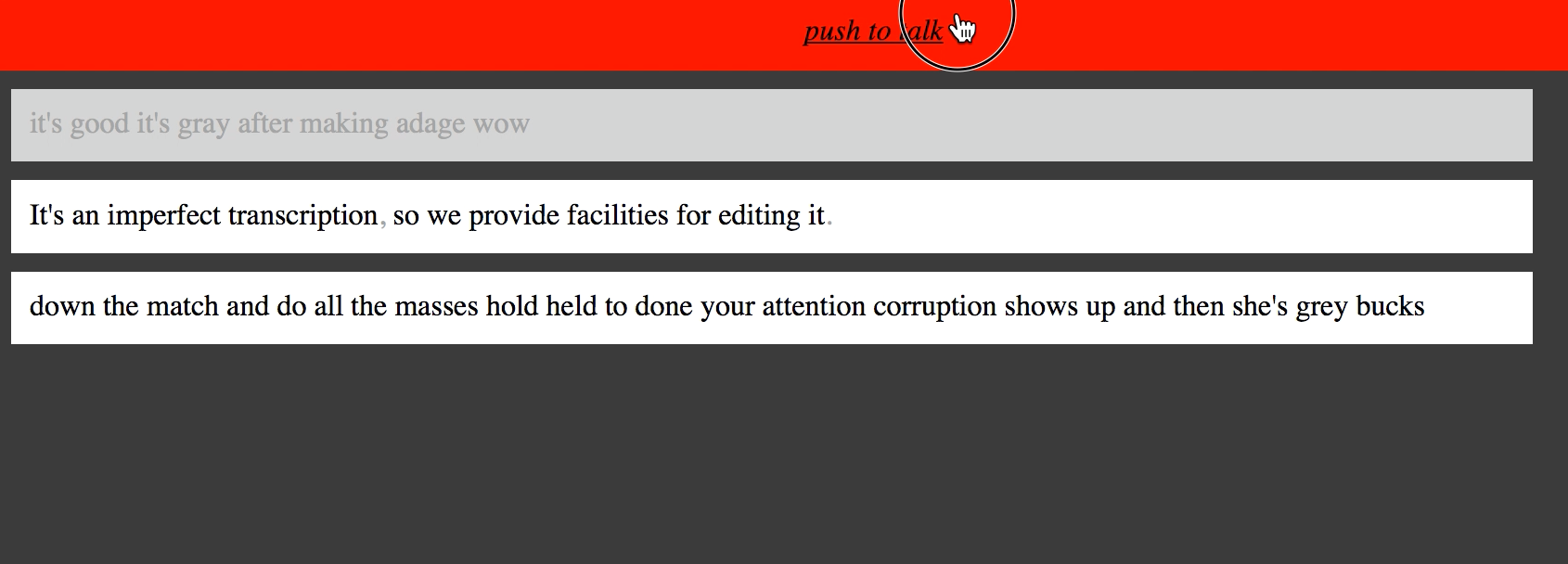

In any case, I came back to this thread recently. I’ve been doing some work with audio/transcriptions/alignment, and have wanted to build tools for thinking through voice/audio (aided by text).

I’ve recorded a demo/documentation video of my latest sketch, and will temporarily run a test instance on lowerquality; it’s set up where different subdirectory URLs create “private” “rooms.” We can meet at https://conf.lowerquality.com/cdg.

I’m not exactly happy with it, but it’s interesting enough that I thought to share it (i.e. so I can stop working on it for a while).

The things I’m disappointed with:

• The transcription quality (provided by Kaldi) is not quite up to commercial (Google, Apple, Microsoft, Baidu) standards. That said, it’s not hugely behind, and I have many ideas for improving the quality. More damning, however, is how authoritative the text appears, even when it’s wrong. I would like to show a confidence measure, or alternatives. I’m craving lattices.

• The transcription speed can lag behind realtime. On my MacBook, locally, it’s just about realtime, but my server doesn’t quite have the CPU to keep up. And since the transcription uses a lot of RAM, I only allocate two transcription resources, so if more than two people are speaking at once, not everyone will be transcribed live. This one, at least, is a problem that can easily be solved by money.

• The boxy design doesn’t really work. What I was going for initially was something that looked more like a shared document, but which was entirely assembled (live) by voice. So, for instance, when speaking you would have to indicate where in the document you were speaking. I’m still thinking through a UI that can effectively disambiguate between correcting a transcription and editing the document.

• It’s fairly low bandwidth (should only need 16kB/s upstream and 16*N down), but does not handle poor connections gracefully. Then again, at least you can get back to the audio, so in some ways it’s better than anything else out there…

The things that are sort of interesting:

• The click-a-word-to-listen interaction is very satisfying. Combined with the text area being editable (when the background is white), it’s a compelling beginning to an extremely simple transcription UI. It’s already much better than VLC + an empty Microsoft Word document, which as far as I know is what most non-professionals use…

• What would it be like to use this as a “kiosk” in a shared space to record audio notes, decisions, messages, etc?

Would love to “hang out” with people here to test it. It’s been very buggy[0], but it’s hard for me to break it alone :)

I’ll try to keep the cdg channel more-or-less open for a couple days.

[0] It won’t work in Safari (they refuse to give access to the microphone), nor will it work in mobile (though Android support is hypothetically possible). Please send me bug reports off-list.

I'm also really looking forward to exploring how your video summarisation and browsing interfaces could be put to good use in Conf 4.0.

Incorporating video/screens/grids would be a possible next step. More on this soon (by some definition of soon).

Wondering: is there a snappier name we could use?

“Snapping” is actually a meaningful concept w/r/t the alignment of text and audio.

Your correspondent,

R.M.O.

AranOn 11 December 2014 at 22:22, Robert M Ochshorn wrote:Hello!

I implemented a dead-simple, dead-ugly, multi-user audio conference:

http://rmozone.com:8001/

It's tested in Chrome and Firefox.

- You have to hold down the "push to talk" button when you want to talk.

- You can use the yellow rectangle to rewind.

Some notes, in no particular order:

- I'm sending raw PCM 16-bit audio at ~11KHz in ~1/40sec chunks. That

works out to ~22kb/s, which seems reasonable for up- and downstream:

even at CDG.

- While the server stores each stream independently, it muxes the

streams before sending back to clients, so from a client perspective

bandwidth is constant regardless of number of connections.

- Client-server communication uses websockets.

- When multiple people are talking simultaneously, they each receive a

unique composite stream back without the sound of their own voice.

- Since every speaker gets a custom stream, positional audio tricks

might be fun to play with.

- Getting the raw audio data from the microphone required use of a

WebAudioApi ScriptProcessorNode object, which is deprecated[1].

- All audio is stored in discrete per-user chunks, which could be

represented in beyond a single timeline.

- The server is written in python, using Twisted and Autobahn. I've

posted the sourcecode online[2] in case it's a useful reference.

Even in its primitive state, it would be fun to test this with many

people, in many different locations.

I'm excited to see where this will lead!

Your correspondent,

R.M.O.

[1] To be replaced by AudioWorker objects, which are not yet

implemented in any browsers:

https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API#Audio_Workers

[2] http://readmes.numm.org/rmo-sketchbook/audioconf/server.py

On Thu, Dec 11, 2014 at 04:07:56PM -0800, Felix Wolff wrote:

>

> On Dec 11, 2014, at 3:42 PM, Marko Röder wrote:

>

> > Hey Aran and Felix -

> >

> > Here are some questions and thoughts that I had when reading the summary (great ideas!):

> >

> >> - A conference call could be a lively world, which contains a widget for the presentation content, as well as screens for the video streams of the participants.

> >

> > Could be? Imagine screen sharing (other apps), too. I know we are currently working on making Lively a good presentation system but I imagine Non-CDG-Members joining an presenting some other content… The questions is, (how) will I be able to interact with those things in the past?

>

> My first idea about that is integrating arbitrary video streams. I think we cannot do anything more than just showing a shared screens video. In the first approaches using WebRTC, I’ve built a little Chrome extension, that was streaming a shared screen into a lively world. Remember that you can just access screen sharing in chrome via an extension and not from the page content itself (well, Hangouts can, but we all know that they have several backdoors like accessing your webcam without permission), so an extension will be necessary for sharing the content of arbitrary windows. Then you get a video stream that we can use as media in our conference system.

>

> >

> >> - When someone goes back in time, he switches to his own layer. The other participants are not influenced by that and still reside in the present.

> >

> > Would I be able to see that someone is time-traveling right now (I think yes) and could I join him as a viewer (and maybe branch of his/her video at one point, …)?

>

> I think you should. Time traveling is more fun with a buddy, I guess.

>

> >

> >> - We are able to record everything on the server.

> >

> > Does this include branches? Those might also be interesting (for someone watching in the future) and I, as someone watching later, could see where someone branched off (went back to).

>

> This goes along with the idea of joining someone in the past. If you want to have that ability, you have to stream someone’s branch contents as well and then you can record them on the server side.

>

> >

> >> - The meeting is actually never over.

> >

> > I like the idea a lot! You should be able to hold a follow-up (Tuesday meeting) in the same space, maybe someone watching can just skip over the other time/6 days and will be transported to the next session/interaction…

>

> …or if you missed the first part of the several-days-meeting, you can just watch it when ever you want and join the next meeting live.

>

> >

> >

> > How about notes and bookmarks? I think especially notes (from all the participants) could be valuable.

>

> Good point. Yes.

>

> >

> >

> >> Thank’s for visiting SF and sharing your thoughts.

> >

> > I agree! Thanks for visiting, Aran! We should figure out a way to do this more often (maybe in the next year — budget) so we will not have to wait for this tool that will eventually make us feel like we all are in the same space!

> >

> > Cheers,

> >

> > - Marko

> >

> >

> > PS: Where does the 4.0 come from, I thought we still live in a 2.0 world… ;o)

>

> As Microsoft said some weeks ago, this is not an incremental step on what is already there. So then it would have to be 3.0, but I liked 4.0 even more. :-p

>