Today, Libre Graphics Magazine v2.4 arrived in the office.

I opened it, having completely forgotten that I submitted a “thing” at the end of June.

It’s nicer to read in print—I’ll leave it on the table in the library—but for “posterity” (until they update the website) here’s the stuff I sent them.

r

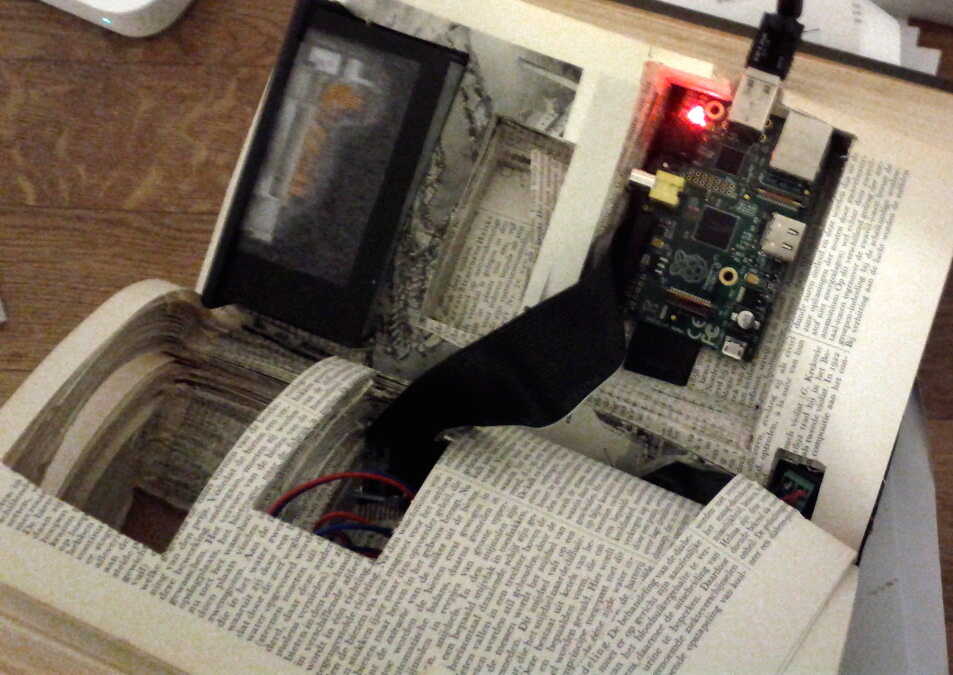

Begin forwarded message:From: Robert M OchshornDate: June 29, 2015 at 12:14:19 PM PDTTo: Ana Isabel CarvalhoCc: ****************, ****************Subject: Re: Heigen-text in the next Libregraphicsmag?I think images will be pretty important for making any sense of the text below. I’m not sure how the images will interleave with text, captions, and project descriptions, but I will leave that task in your capable hands and hope for the best :)I’ve attached a screenshot from the Hyperopia web interface (online at http://hyperopia.meta4.org/):The “central” image of the project can be downloaded from:http://rmozone.com/misc/revue-art-data/2014_hyperopia-thing/IMG_1944.JPG and shows the physical trace.I have attached a cropped image of the carved-out “guts” of the book, and if these three aren’t sufficient, you can find a few more images here.For Pdf To Cognition, I think the most compelling artifact is actually a video animation:Maybe extracting a few screenshots from that can create a sense of its betweenness?I also have a couple of scatterplot renderings:which could be described with the following caption:Rendering, Pdf To Cognition (2014). Words are digitally represented from an image-form basis (here trained on academic neuroscience papers) instead of character codes. The PCA basis forms a continuous space of text-image.(one interesting thing about the second scatterplot is that if you look closely, you’ll see that the blobs are actually not composed of characters—they are just hazy “word forms”)… and this is another good one to include, as it shows the “traces” of training data poking through all comparisons:Caption for that image:Screenshot, Pdf2 To Cognition (2014). The training data (from critical theory reading lists) can reappear in unlikely arrangements when parsing new data.Finally, I mentioned InterLace/Montage Interdit at the beginning. There are some MI images here:(“presort” may be a good one, or the “GOD” studies also have good slitscans… ask me for a caption depending if you need help making sense of these)One other InterLace photo option is to use a photo from the VideoVortex InterLace instance as the photo of me: http://rmozone.com/misc/revue-art-data/rmo/vv9-rmo.pngAll best! Apologies again for the delay, and I hope it will work…-RMOOn Jun 29, 2015, at 11:58 AM, Robert M Ochshorn wrote:OK! SORRRYY I’m so slow. I hope this will work for you. Let me know if you need anything else, or if I can help in any way. Images in-reply.TEXT:“Bring a thing!” Such a simple and innocuous prompt to incite fear and apprehension in your humble free software developer. A thing? But I write software!

I had been exploring the idea that careful reading could be a creative process—that the traces from active navigation of media could themselves be media. And I had been writing software around that concept. Perhaps I had achieved at least a virtual tangibility for digital video collections that would otherwise float through the ether-net, leaving traces exclusively for the advertising and spy agencies with an interest in one form of targeting or another. With InterLace (2012 - ), the playback rectangle is always shown in context, and every attempt is made to treat the viewer as a full participant in determining their points of focus.

While my timelines gave graphic form to the passage of time—at least a shadow of the moment—I still didn’t think my software qualified for membership in the world of things. So was born Hyperopia Thing (2014). Building on the kiwix standalone Wikipedia server, I wrote an experimental wiki interface that would open links inline and implicitly build an “associative trail” in the right-hand sidebar with all of the links, selections, and images clicked on while browsing. To make it a “thing,” I looked to the past and appropriated the book-form of yesteryears’s reference collection: I loaded all of Wikipedia onto a microSD card in a Raspberry Pi, and carved it into an old Dutch encyclopedia. A new research lab should have a copy of Wikipedia for their shelves, I reasoned, even if the physical form was symbolic.

The thermal printer was a joke, but like many good jokes it turned out to be more “true” than expected. Housed inside the physical encyclopedia, I wired up a receipt printer to create an instant record of the reading process, emanating out from the soul of the book. At first I saw the receipts as a gimmicky echo of the digital sidebar, but as I spend more time with them and they outlive the server and database and disk migrations of their digital counterparts, I begin to wonder if there’s something to having a physical trace (a receipt, if you will) that’s worth, well, holding on to.

What traces of us will be left when machines have “learned” from our literature and seem capable of reading and writing and translating our languages with relative fluency? Paradoxically, the word for the digital data inputs fed into computers, the “corpus,” comes from the Latin word for “body.” I was thinking about the assumptions and impenetrable insides of machine learning with a study, Pdf To Cognition (2014), that trained a word-image based model of written text. Is a corpus strung together with characters isomorphic to ASCII character codes, or is a corpus compiled of typographic shape, reaching our eyes as image? And does the model ever transcend its inputs, or do its traces—us—forever lurk within?PROJECTS MENTIONED:InterLace (2012 - )

The first use of InterLace was in collaboration Eyal Sivan on his web documentary, Montage Interdit.

As a data-based film, continuity is not a linear narrative, but is rather achieved at “runtime” by the viewer, who can navigate by tag, source, or timeline, re-sorting to make new continuities and montage. The act of authorship, then, is in the creation of focus and metadata rather than narrative.

Hyperopia Thing (2014)

Custom offline-Wikipedia interface and thermal printer, embedded into an old encyclopedia. Offers wireless network with captive portal collaborative encyclopedia; tracks browsing and prints receipts of drifts.

Hyperopia is a device based on the transitive principle that “Reading is Writing" and that if whole is comprised of parts then the parts should stay connected to the whole: look at something closely enough and the truth of the universe is manifest in its every detail. The name “hyperopia” refers to a (supposed) defect of vision commonly known as farsightedness.link: http://rmozone.com/snapshots/2014/02/hyperopia/

Pdf To Cognition (2014)

Word-shape representation of text, trained on the Neuroscience literature. By taking PDF journal articles and splitting them into words—without breaking them down into letter—I saved each word as an image, and then built up a basis set of word-shapes. The distance between words is entirely a function of their visual similarity.

To encode a concept of difference and distance into these word-forms, I have used a statistical technique known as Principal Component Analysis (PCA), which is a mainstay of any modern compression and data analysis workflow. PCA takes data as input with any number of characteristics, and forms a basis set of abstract components in the same form as the input data, much like Gilbert Ryle's Average Taxpayer. In this case, the components are statistically relevant word-forms that represent correlations between different parts of the shape, and PCA functions by enabling projection from any word-form into a combination of its basis set. Moving from a complex word form with, say, 1,500 pixels, and reducing it to a specific blending of 50 basis words is a dramatic dimensional reduction, and it is this reduction that allows for spatialization and comparison of words as shapes. If I take the liberty of calling this compression a “reading” of the word, then PCA is significant because its reading is bidirectional—to read implies an ability to write. Analysis and synthesis are deeply entwined.BIOPlease feel more than welcome NOT to include this, or to include as little as you can get away with…

Robert M Ochshorn is an artist and researcher working at the Communications Design Group in San Francisco, where he is developing media interfaces for extending human perceptive and expressive capabilities.

Ochshorn holds a BA in Computer Science from Cornell University (Ithaca, NY, USA), and after graduation worked as a Research Assistant in the Interrogative Design Group at MIT and Harvard. In 2012, he was a researcher at the Jan van Eyck Academie (Maastricht, NL), where he developed the open-source InterLace software that was used in collaboration to create the web-based documentary Montage Interdit (presented at the Berlin Documentary Forum 2, June 2012, Berlin, Germany), and he has recently completed a residency at Akademie Schloss Solitude (Stuttgart, Germany). He has performed, lectured, and exhibited internationally.On Jun 2, 2015, at 4:00 AM, Ana Isabel Carvalho wrote:Hello Robert,

I'm writing about the new issue of Libre Graphics magazine. At this moment, we just wrapped up the call for submissions for 2.4, which will go under the theme Capture. From the call description:

Data capture sounds like a thoroughly dispassionate topic. We collect information from peripherals attached to computers, turning keystrokes into characters, turning clicks into actions, collecting video, audio and images of varying quality and fidelity. Capture in this sense is a young word, devised in the latter half of the twentieth century. For the four hundred years previous, the word suggested something with far higher stakes, something more passionate and visceral. To capture was to seize, to take, like the capture of a criminal or of a treasure trove. Computation has rendered capture routine and safe.

But capture is neither simply an act of forcible collection or of technical routine. The sense of capture we would like to approach in this issue is gentler, more evocative. Issue 2.4 of Libre Graphics magazine, the last in volume 2, looks at capture as the act of encompassing, emulating and encapsulating difficult things, subtle qualities. Routinely, we capture with keyboards, mice, cameras, audio recorders, scanners, browsing histories, keyloggers. We might capture a fleeting expression in a photo, or a personal history in an audio recording. Our methods of data capture, though they may seem commonplace at first glance, offer opportunities to catch moments.

We’re looking for work, both visual and textual, exploring the concept of capture, as it relates to or is done with F/LOSS art and design. All kinds of capture, metaphorical or literal, are welcome. Whether it’s a treatise on the politics of photo capture in public places, a series of photos taken using novel F/LOSS methods, documentation of a homebrew 3D scanner, any riff on the idea of capture is invited. We encourage submissions for articles, showcases, interviews and anything else you might suggest.

--

For this, we felt the 'Eigen-text' project, you presented at Cqrrelations, would be a precious and relevant addition.

We would love to feature it as part of the Showcase section. For that, we were thinking of a text with around three or four paragraphs, as well images and visual documentation that we can use to illustrate the project.

We are running on a tremendously tight schedule, and would need your materials by the 18th of June.

Do you think that would be feasible? Let us know -- we're really eager to feature your work.

All the best,

Ana Isabel

--

Libre Graphics magazine

http://libregraphicsmag.com

https://gitlab.com/libregraphicsmag

@libgraphicsmag