Great progress on this idea. Matthias and I have been implementing the necessary computer vision and infrastructure to create this workflow.

The idea is you draw on paper using different colored sharpies. Then you "run" the drawing by putting it under a camera. The system "evaluates" the drawing and outputs a print out. Then you can draw on this print out to add more lines/constraints, re-run, etc. You can take a print out to the laser room, scan its QR code, and the laser will cut your design.

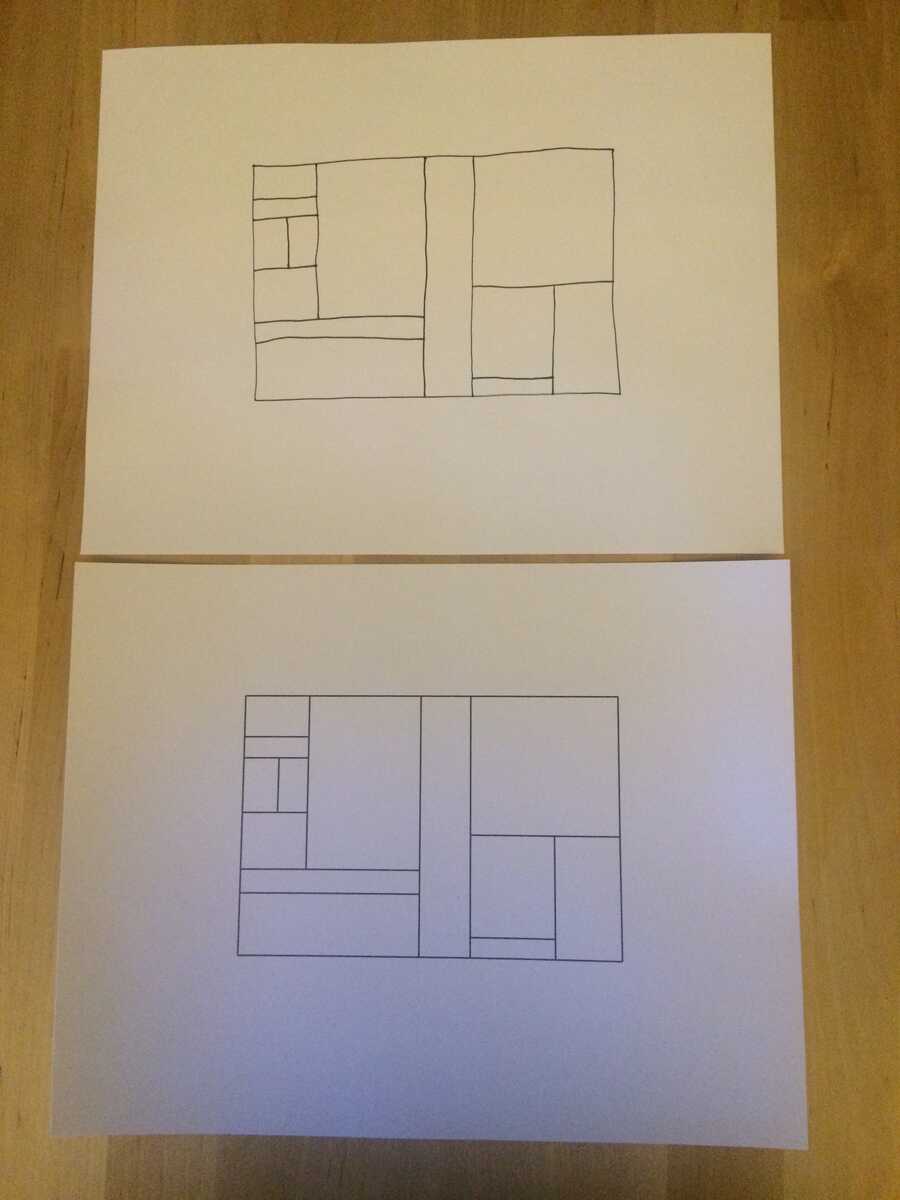

Here is a photo of a drawing next to its output. For this prototype, the semantics for all lines are "constrain to horizontal or vertical".

Notice that scale and placement on the paper are preserved.

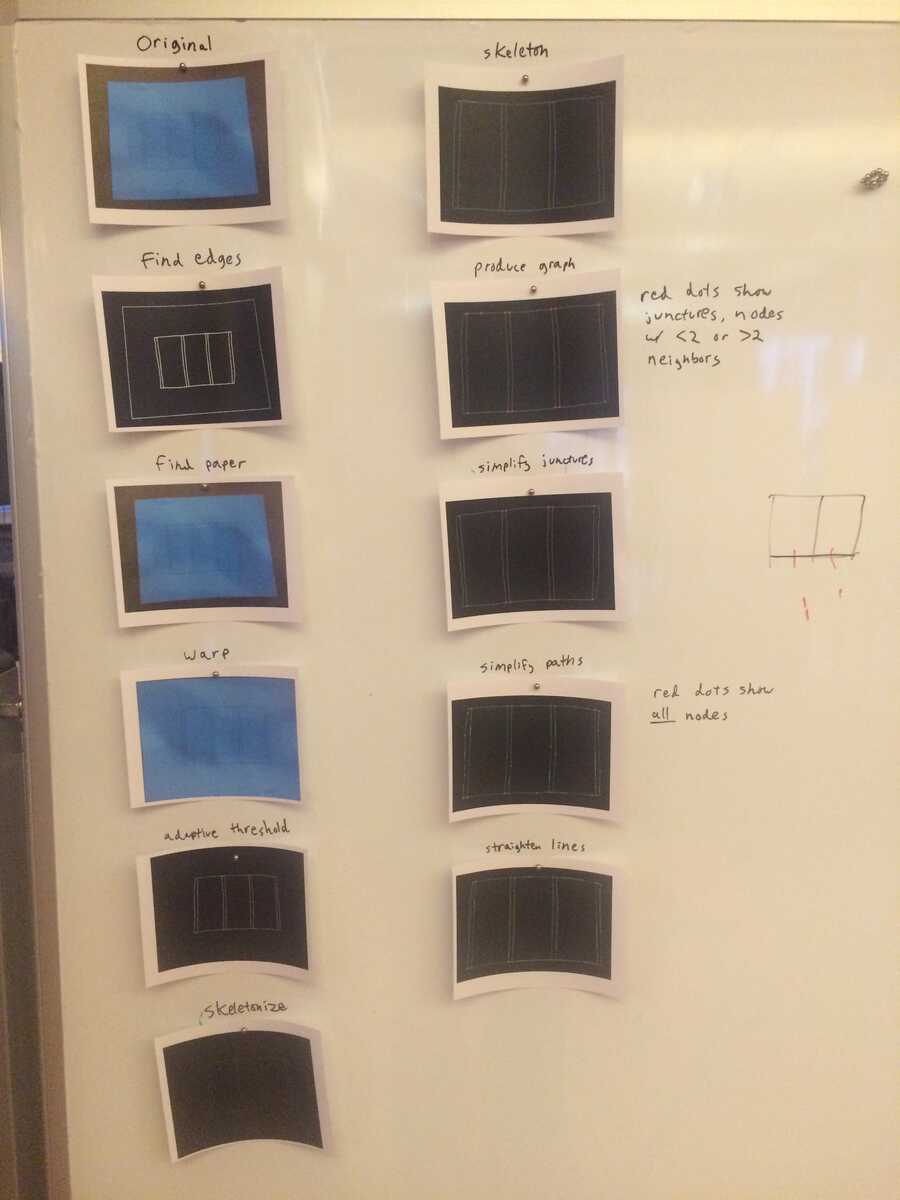

We are also interested in ways to expose the code behind the system, the computer vision algorithms. The computer vision is mostly accomplished as a pipeline of functions. Each function's output is usually easy to visualize; after all we need these visualizations in order to make sure the algorithm is working as it should. So I'm thinking that in addition to the camera/printer apparatus, we might also have a "big board" showing the visualization of each step in the pipeline.

Right now I'm thinking each visualization would be displayed on an ipad magneted to the board (like 20 ipads?). Projection via Room OS would work too, but you kind of want high resolution as there are pixel-level details that are useful to see.

Ideally, the python code would also be displayed on the big board, and would be editable.

Here is a static mockup of the main chunk of computer vision:

And with code collaged in: