An old interview and some newer interleaved cryptic sentences of mine about The Dream went online at the Castle Solitude blog without great fanfare (just the way I like it) a couple weeks ago.

That was right after the Google “deep dream” images were unleashed (“who let the dogs out?”), and I was probably not in the best position to make public judgement on them.

At the urging of Noah Swartz, I did visuals last Thursday at the EFF’s 25th anniversary party (at DNA Lounge). I thought an institutional anniversary would be a good opportunity for another take at the dream, and as I hadn’t done any better than the neural net hegemony, I fired up a GPU-enhanced Amazon EC-2 instance and started grinding through the history of the electronic frontier. I made a few text models (trained from: all legal documents on EFF’s website; all code patches on their github; all of the commit messages; all of Cory Doctorow’s novels) which were as weird and interesting and also boring as we’re used to from our CDG email bot.

I got really obsessed with the idea that you could use Google’s “deep dream” approach to see something other than dogs, if instead of training on ImageNet’s dogs you trained on another corpus, but it turns out the quantity of labeled data and computation one needs to make such a model is rather daunting. My first approach was to download 128 videos of EFF pioneer award winners and try to train a network to take a random frame and deduce which video it came from. The network got very good at the task I gave it, but I have no idea how it figured out the mapping and when I tried to deep-dream it ended up zooming into some crazy RGB/pixel/sensor-level features:

Then I wrote a super terse video tagging interface with cut detection thinking I could come up with more granular labels (i.e. of people, of logos, of settings) but after a few hours I gave up faced with the sheer amount of data I needed:

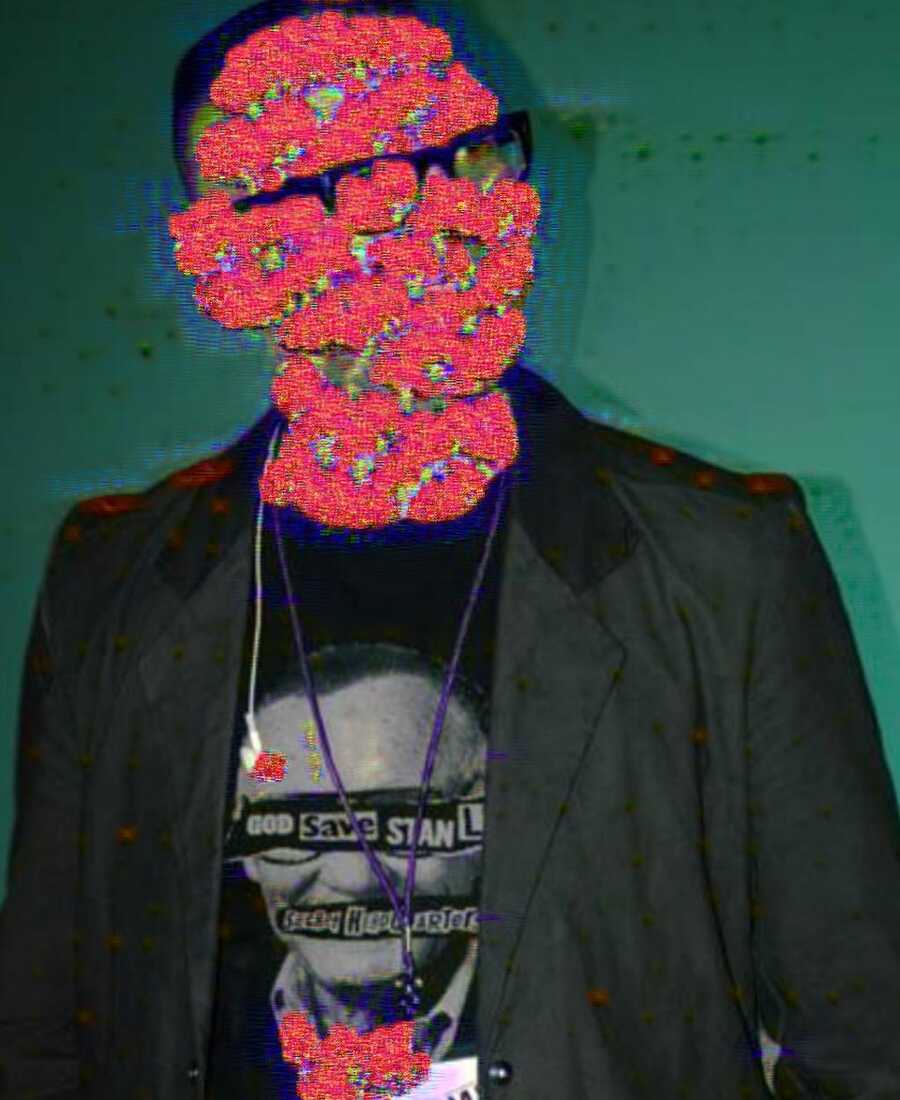

My last attempt—and I still think this is a brilliant idea that would go viral and immediately change public perception of AI—was to train a neural network to discriminate between the different Boston Dynamics robots (and some weaponized drones, terminator iconography, etc). I did this training with Google Image Search results, and I feel like it worked slightly better than the video mapping, but I still didn’t manage to get a network to fantasize BigDog everywhere, as I had hoped.

Here’s robo-Cory-Doctorow:

Clearly *something* is going on there involving flesh-eating AI robots of the future, but I have no idea what is happening. A couple hours before the party started, I put a hold on model training and compiled everything I had into a glitch-art-extraordinaire web visuals thing.

The people didn’t seem to mind it too much:

Some looked at it. Some saw proper nouns they fondly recalled in the text. It started a few interesting conversations. In this form, I don’t think very many people would have realized on their own that all of the text/code/&c was completely fabricated.

I had hoped I would be able to say I learned something more profound from making this. I’m not sure what I learned. Neural nets are weird… as far as I can tell, at least for speech and vision processing, they are increasingly indispensable. Not sure what to make of that.

Your correspondent,

R.M.O.