I am super excited about both this diorama and how demonstrative it is! It feels totally magical to take some stuff lying around and add behavior to it.

For reference, the idea for the stop motion recorded live with physical objects then projected back on the scene came from my work with a live stop-motion tool I made in November with a student of mine. (Here's the current version.) I really like the idea of being able to make little animations using physical stuff in the diorama and being able to play them back later. It felt like children could record a little scene and then play it back for their friends and parents, with sounds and everything.

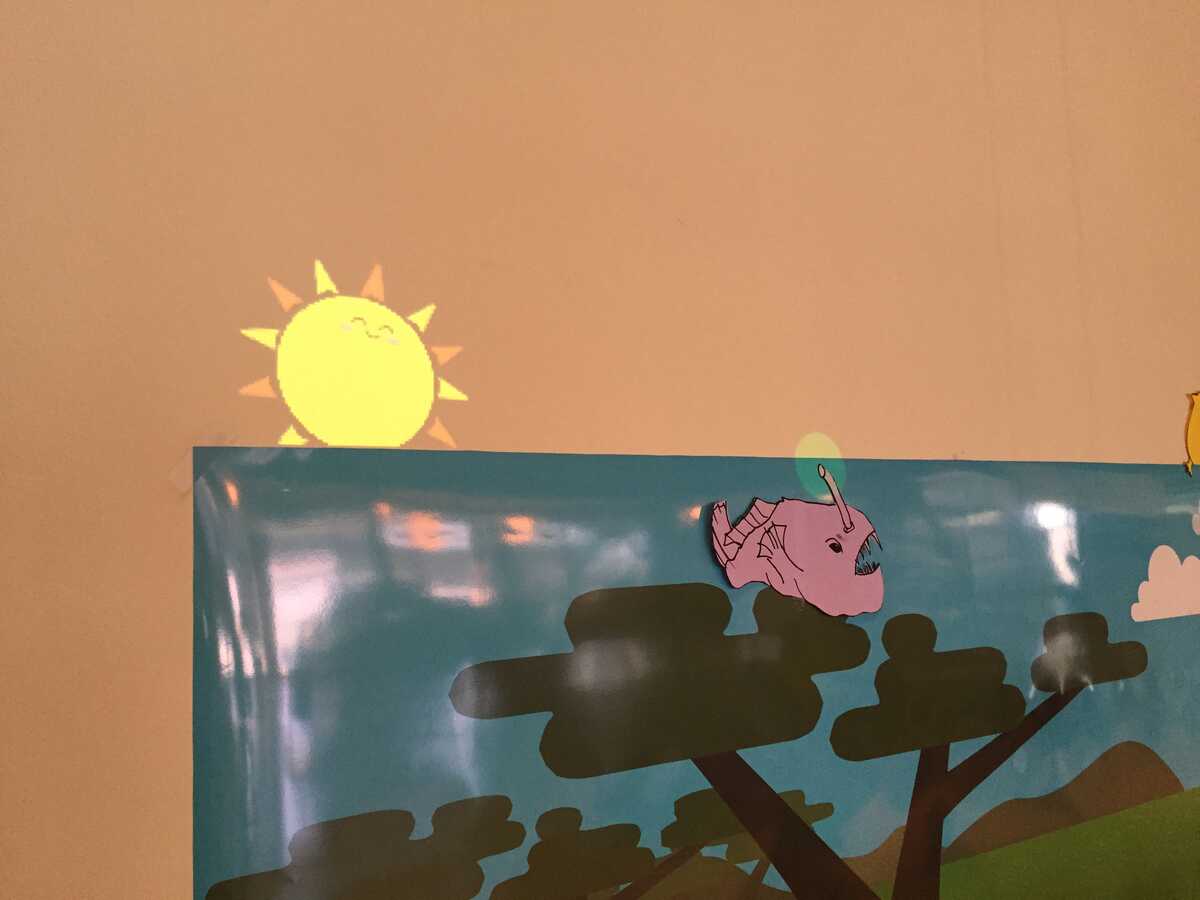

Also, I added a little happy sun I thought up in the shower this morning (with an image taken from google images):

(see it animating: http://gfycat.com/DampEasygoingHalcyon)

On Wed, Jun 17, 2015 at 12:13 PM, Bret Victor wrote:

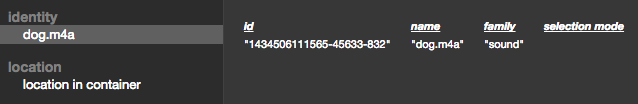

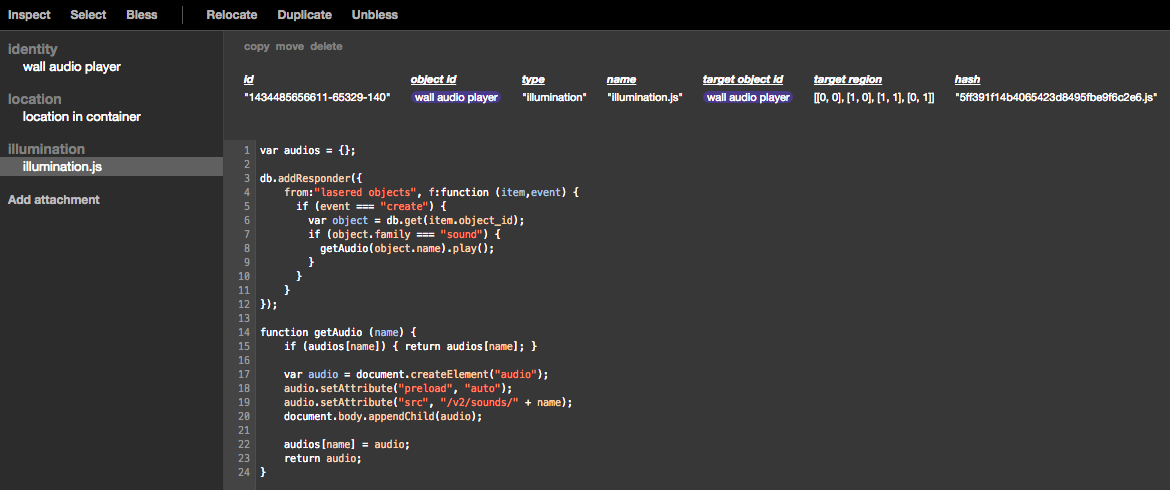

Yesterday, we started making a "dynamic diorama".video: [IMG_7878.m4v]-------I was thinking about the old HyperCard stacks which were just a picture of a scene, and you could click on objects in the scene (such as a cat) to hear a sound. One of the original goals of "Hypercard in the World" was to be able to make things like this in the room just as easily as in Hypercard.At lunch, I mentioned to May-Li that I was thinking of a mural where you could laser the cat and hear the cat. May-Li promptly drew a (cute) tiger, and I taped it to the wall and made it talk. But the flatness of a mural on the wall felt unsatisfying, too screen-like. We got the idea of making a diorama instead, propping up the animals on wooden blocks. May-Li drew a backdrop.As May-Li drew each animal, I cut it out, propped it up, recorded or downloaded a sound, and blessed it. This is me making the dog:video: [IMG_7871.mov]Really easy -- just bless the object, set its "name" to the sound filename, and set its "family" to "sound". This is literally all there is to the dog:------There's a nearby object (not shown here), called "wall audio player", which watches for lasers, and if an object of family "sound" is lasered, it plays that sound. This is all it is:I added a little more code later to allow for looping sounds and other things.-----Toby drew the "yay" man at back-right.Glen recorded a stop-motion animation with the raindrops, which plays when the cloud is lasered.Nagle made an anglerfish with glowing appendage.May-Li is making a unicorn with a sparkling horn.-----Some reflections:For the basic "click an object and hear a sound" behavior, It almost felt too easy. I think I mean that the fun of the activity had to lie in creating the artwork itself, because there was no challenge in "making it work" technically. If I were making a webpage instead, it would have been annoying/tedious enough that there would have been more of a sense of accomplishment afterward.Attaching code to physically-present objects (e.g. the "wall audio player") feels so much better than mucking around in filesystems and compilers/browsers and etc. This was one of the goals of "Hypercard in the World", and it feels like a good direction. (It does require a change of mindset. Nagle and May-Li were accustomed to "write code on the computer, then run it", and I had to make them do the opposite -- put the unicorn/anglerfish into the scene, bless it, and then write code on the animal itself".It became clearer how important it will be to track objects that are moved around by hand. May-Li was talking about having the giraffe respond when the tiger got too close, etc., and we can't really do that yet because the locations of all objects are set manually. (This is good -- one of the reasons for doing activities like this is to bring issues like this into focus.)As people added new kinds of behavior (looping sounds that are toggled on and off, rain animation), it revealed some of the limitations of the selection model I'm using, and gave me ideas on how to improve it.- Because I'm abusing the "family" field (supposed to be for mutually-exclusive selection) to mark the object as a sound, I can't use the selection mechanism here. Instead, there should probably be a separate "tags" field, so you can tag the object as "sound" without affecting its selection behavior.- There probably should be a "highlight mode" in addition to the "selection mode", so you can specify whether/how the object highlights when selected.People contributing together to a shared dynamic mural was in the Research Agenda, and I'm happy we're starting to touch on it. It'll get more interesting as more of the "programming" (creating of behavior) can be done out in the world instead of in a text editor.