I’m pretty sure this wasn’t what he meant, but Sam asked for “narrative” underlying our in-process collaboration. The first half is dull/grandiose regurgitation of basic CDG dogma, and then I try to explain/constrain the next-gen camera-projector hardware within that context. I’m not really sure how useful these words will be to anyone, but I hope it at least helps to articulate my intentions/pretensions, and perhaps will inspire some new ideas. The level of detail is a bit strange: I am taking Peppy and Chipper as “knowns,” while dynamic@ hasn’t even met Chipper yet. Well, in short, Chipper Camera is this on a servo, but has a wildly different personality from these disagreeable fellows:

The narrative also shies away from articulating all of the (many!) steps we will need to take for Big Box to be a usable abstraction, but even just writing it helped me focus on what it is, and what it isn’t. Unfortunately, many interesting things will be out of scope for Big Box, but on the other hand, I hope the submodules inside (Peppy, Chipper, &c) can be reassembled in different configurations (for instance to support overhead augmentation).

Your correspondent,

R.M.O.

—

The Desktop Metaphor introduced at PARC in the 70s was a breakthrough. It was nothing more than an illusion, but this illusion was foundational for the comprehension and adoption of mainstream computation. In short, by employing the Desktop Metaphor, computer users could believe that their documents and applications lived inside a set of icons spatially arranged on a CRT display. When icons were activated, their contents would appear in a 2-D “window” with simulated occlusion, so that the Desktop and other windows could appear to persist.

Forty-some years later the ubiquitous Desktop is giving way to the “home screen,” documents are dissociated from even a virtual location in The Cloud (itself a vessel for dismal illusions--choose from a stable mainframe or the vaporous Desktop), and the UNIX shell lives on through TTY emulators as “ground truth” in the networked age. While cats and toddlers swipe at iPads and in some ways computing has never been easier or more accessible, the knowledge and expertise necessary to create or modify one of these iPad applications is rarefied and arcane—its macho, brogrammer/beardo techniques could hardly be further from the infantilizing ease of use that is packaged and promoted.

The delicate illusion and metaphor of personal computing is losing its coherence: the pixel-art trash can is now an ill-defined “archive.” We seem to be saving everything, and yet when the signal drops out or the battery fails we have nothing. Even when we are “online,” which is most of the time, the omnipresent search bar only offers us a search in the narrowest sense, for we must already know a name for what we’re seeking. Never can we see the shape of the whole. Images and text flash onto our screen, but no sooner have they appeared then they must recede into a virtual “tab” or otherwise dissolve and allow our precious pixels to process a new notification.

The revivified old ideas of “Virtual” and “Augmented” Reality both accelerate the illusory stakes. The former proposes to create a synthetic world with such fidelity that we may step out of the real, while the latter attempts a high-framerate, personalized synchronization of the virtual on the real. When faced with a choice between modifying reality before it reaches our eyes or cutting it out entirely, we reject the set-up as a false choice. We wish to create and co-inhabit with all our senses a reality larger than our screens and richer than even our imaginations. And if there was something valuable in our word processors and web browsers, we will find a way to bring it into the world. For too long has “computer interface” meant contorting the human to the computer.

Our zeal may have us mistaken for retrograde fundamentalists. We are zealots. We are students of a forgotten past. And we are ever-concerned with the foundational units of our new world-to-be. But we are pragmatic prototypers at heart, and we will submit to great pains to catch a glimpse of what might lie beyond.

It is with all this in mind that we at CDG are focused on our room. We’re tongue-tied to talk about it, because the history of our language and our computer interfaces fixate on metaphor, and we are attempting to transcend mere analogy. When we point at a printout on the wall and say that it is code to transform laser coordinates, when we point at a glowing chart and say it is the print queue, or when we point our laser at an e-mail label, we are not pointing to metaphors or illusions pretending to be code and documents, we are pointing at the thing itself. That's the goal at least. It's not a “view” on a git-backed directory. Our room is not play-acting from a 3-D model we built of CDG. If we are still using inode-backed file systems and constructing virtual perspective transforms, that is only because we lack the meta-materials and cheap-as-sand circuitry to truly bake our bits into the room.

With Peppy Projector and Chipper Camera, the two servo-based modules we’ve conceptualized and started to assemble so far, we inch towards a more seamless union of digital into the physical. Now that we can superimpose high-resolution projection over a large area, media can come alive wherever it is and we need not shift our attention to a fixed screen. And our ability to capture high resolution images means that we no longer need to assume that the world is static and immutable; when blessed and enchanted objects are moved, their interactions can follow.

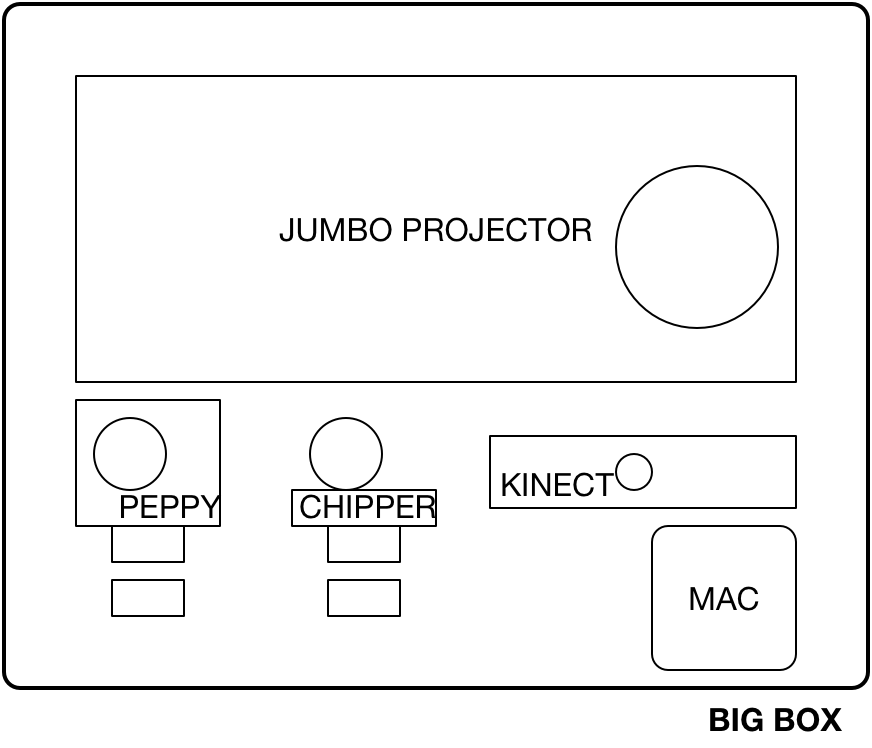

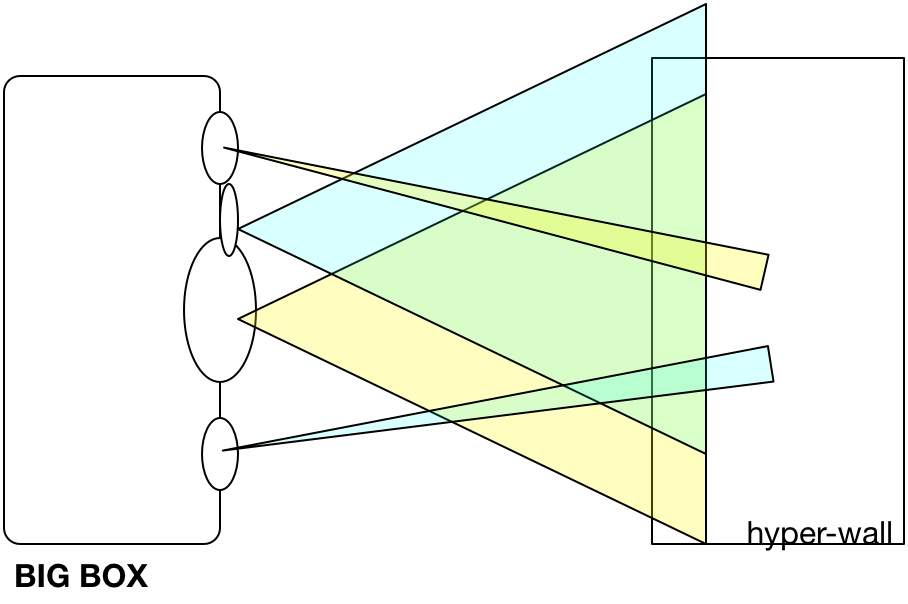

But in order to make good use of these capabilities, we need to encapsulate and abstract. Following the Digital Overhead Lamp prototype, we are working on a Big Box iteration for large-surface exhibition-style standing interactions. As (extremely poorly) illustrated in the following diagram, the field of view of a standard, wide-throw projector are matched with the servo/telephoto capabilities of custom-designed projector and camera modules. A Kinect is included for depth and RGB readings throughout the scene, and a Mac can feed images on both projectors, while processing camera data.

Big Box is of immediate utility in the creation of hyper-walls. Printed cards can be positioned (magnets? velcro? putty?) on a wall (whiteboard?). They may contain some combination of photos, text, and even hand-drawing. When printed, they may be pre-associated with links and actions, or if hand-drawn the associations may be added later. Big Box is able to detect when cards are added to the wall or removed, and by using a laser, cards may be selected. Selection invokes Peppy to create a high-quality display/action in the proximity of the card. The state of a hyper-wall can be immortalized at any moment with a scan, and in a matter of seconds, Chipper can capture and stitch together a 300+ DPI rendering of the entire wall (which could be printed and hung from the ceiling). The Kinect allows interactions to respond to the position of people in front of the wall.

We can conceptualize our database-zine-thing as a hyper-wall, where the data-tables, apps, and code segments are linked cards. (Perhaps adding the word “frame” to the vocabulary would be useful for the data-tables.) Likewise our library, where book spines hold their contents, both literally and virtually. Additionally, I would like to demonstrate hyper-walls for the study of individual books (withe quote-cards), and for videos (with moment-cards).

Big Box is but a step along the way towards the dream of dynamic reality. We will need more hardware—perhaps Toby and Nagle’s work revisiting overhead interactions will motivate a new Digital Overhead Lamp—and we will have new needs we cannot anticipate. Sound and touch are all too neglected in these designs, though at least we don’t cut off their naturally-occurring existence in the world.