The world is our barcode.

It was a strange feeling, when it worked. The edge between it sort of almost working, and totally, completely, undeniably working was shorter than anything I’ve ever experienced programming before. I’d been dragging myself along with all my reserves of willpower, reading paper after paper with names like “Learning Multiple Layers of Features from Tiny Images” and struggling through the documentation of one unsatisfying machine learning library after another. (I threw a lot of time into pylearn2, but its layers of library-obfuscation were simply too much for me—it seems like the bulk of the code I’m expected to write to use that library is in yaml, not python as the “py” would suggest.)

On Thursday, I remotely recorded (through a pair of reverse SSH tunnels) a collaborator in LA flipping through every page of The Silver Book. For each page, she removed the provisional physical post-it notes, and then removed her hand from the page, so that I would have an unobscured image of each page. This afternoon, I wrote a shim interface to scrub through and mark all of the timecodes corresponding to each unmolested page. I extracted video frames within a two second window of each key point, so that I ended up with around 50 slightly different frames for each page, catching little bits of hand occlusion and page motion. This gave me a labeled dataset, but even after cropping the images over the book, I felt that 787x385 pixels would be too many features for our impoverished early 21st century computers.

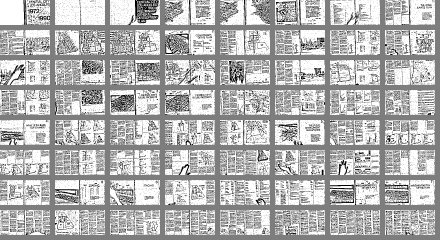

That unhappy observation put me back where I usually am with my “blind” attempts at CV—mindless tweaking. I set up a matrix livecoding session and started fiddling around, and after about an hour I had a preprocessing pipeline that seemed to produce relatively stable, small, and recognizable images of each page. The pipeline:

1. resize page to 200x100

2. average with the previous four frames

3. take adaptive threshold

4. resize to 50x25, using INTER_AREA interpolation

Now I had mere thousand-dimensional features for each image. Here’s what the page-spreads look like at that scale:

Grudgingly, I assumed I would have to reduce the feature space even further, but this was small enough that I thought I’d at least be able to run some training. First I tried training a Support Vector Machine using the python bindings to libsvm (through sklearn). I trained on the first 40 frames I had for each page spread, so I would have the last 10 frames to attempt some basic verification. The model converged in around five seconds, and actually the first two frames I manually tested were correctly predicted. The third was wrong, and I ran it on all of the test data, yielding a disappointing 72%. Reading the sklearn documentation carefully, it seemed like their bindings to LIBLINEAR might be slightly more appropriate for this case. I swapped it in, and before any manual testing, just ran the verification on all of the test data. I was shocked when the computer returned 100% success. Computers never return 100% success—I assumed it was a bug somewhere in my code.

But in fact it really seems to work. I hooked it up to the raw video, and added some scrubbing controls. To my surprise, it even worked when large portions of the page were covered by Henriette’s head, or coated in post-its. Here’s a video with a minute of me scrubbing around (70mb)—the bottom is the image from the camera, and the top is the page that the computer thinks (correctly!) is open. What’s amazing to me is how few hacks are in this process, once I stumble into generating a model. I don’t have any heuristics about nearby pages or anything, just the slightest bit of hysteresis (it needs to identify a page three times in a row to switch to it).

Your machine, learning, and correspondent,

R.M.O.