Well, this made my morning—if not my week. I want this on the film I’m working on now. I will explain the book’s anatomy in a bit more detail now that you have taken things so incredibly far.

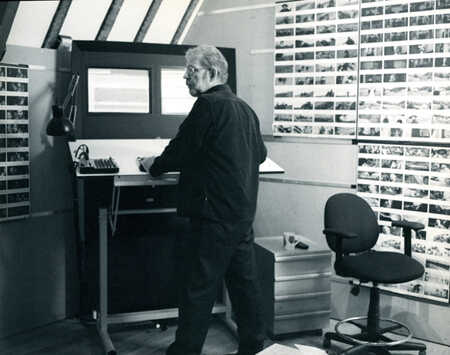

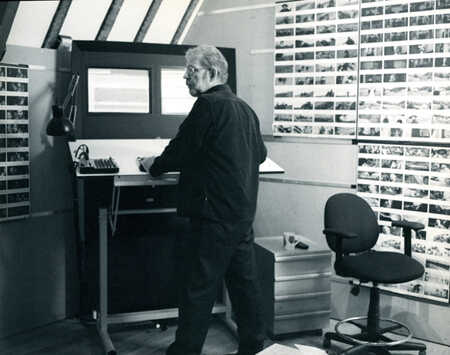

First, it’s interesting to note that the origins of this book were not a book at all, but a WALL.

Second, it should be noted that Walter is often “using” the wall in a very peripheral manner. He’s not looking at it—it’s more that he is surrounded by it. “The photos are vinegar and I’m a cucumber—the goal is to get pickled by them.” They are part of the environment, not bound to a glowing rectangle.

In the old days, the images were generated from 35mm film frames and printed at one-hour photo labs. At a certain point, when video became digital, still frames were generated from markers placed in a timeline and then arranged in a grid on a page and printed out. No more photo labs—just a relatively inexpensive color printer. At some point, the operation shrank further onto pages in a three-ring binder. The advantage is that the pages can easily be replaced on the wall-mounted foamcore using a grid of sticky-tacky tape. As he focuses on different scenes, some pages go back into the back and others go up on the wall. The binder tabs are essentially scenes (actually, macro-scenes called sequences—grouping collections of scenes together).

From what I’ve witnessed, less images go up on the walls than in the past. This may be a function of the films he has most recently worked on, but there is also the possibility that it’s simply a pain to manage all of this stuff, as awesome as it is.

What Are Those Images?

Perhaps the most important thing to say, though, is what these images are. People have different thoughts: it’s the whole movie! It’s one thumbnail image for each shot in the movie! In fact, his “system” is as much as side effect of a process as anything…

Each day, we take notes on the dailies of the movie. We use timecode and text to indicate “where” we are noting. It is painful that the timecode has to be explicitly entered, but that is for another discussion… we use timecode abbreviations to make note-taking easier (the MMSS of HH:MM:SS:FF, where FF is frames).

1602 not so good screaming... not so good

1639 talking without moving lips

1703 good mimic

1721 ok cbb

1739 frowning • good glowering

1756 good

1816 good... good for much longer

1639 talking without moving lips

1703 good mimic

1721 ok cbb

1739 frowning • good glowering

1756 good

1816 good... good for much longer

Then, after notes are taken, all the footage for that day is lined up in a Timeline and scrubbed through semi-randomly, allowing memorable or striking moments to percolate up into one’s consciousness. “Oh, I remember when she smiled like that…” or “Here’s an image that really sums up what I mean about ‘talking without moving lips.’” These moments are marked in the Timeline and then exported as images which feed the Photo Book.

What I find most compelling about it is that the act of skimming and marking the photos is an act of note-taking and familiarizing yourself with the material in a totally different way than textual notes—a way that you rarely, if ever, hear about in the world of video editing. People are obsessed with text and temporal metadata, but sometimes the image itself says more than anything else—especially if you skimmed the footage closely and picked that moment yourself. Thus, the Photo Book actually means something different to the person who created it than a casual observer—the chosen images are summaries of emotional experiences when viewing the material.

Then, when the images are placed on a grid, unintended relationships between images are created (“Hmmm, I never considered cutting from the wide shot to the close up here, but seeing them both on the grid here gives me an idea…”). This serendipitous and peripheral vision experience of one’s media (and memorable moments of their media) inspired me to build a screensaver that would do all of this automatically. The screensaver, nicknamed Kuleshov, was installed on all the editing computers at work, allowing the computers to “dream” about your footage during their downtime.

I find the whole “system” to be wonderful and ripe for further exploration. Many of the ideas RMO and others have been playing with recently touch on this.

[I wonder if it would be interesting to have Walter give a presentation exclusively on the photo wall and all of its various meanings, functions? I am only paraphrasing from my experiences working on two films with him.]

Goodness! I didn’t study your binder closely enough at first to realize that there are dozens of side tabs, but I made this one to start:<IMG_0973.jpeg>The video (hi-res, lo-res) shows me flipping through the binder, and the projection reflecting the current page. Each page is mapped to one of the 482 videos I have from the “Kay Archive” that Yoshiki has been digitizing.It’s still not completely reliable (~75%), but the biggest annoyance right now (aside from the lack of side-tabs) is that there’s no way to seek within each video. I guess pointing a laser at a thumbnail should jump to that moment in time. Or maybe the thumbnails are mere moments on a smooth continuum and the laser can seek in between, as well? TBD.This interaction feels magical in a similar way as the lasers do. In this particular instance, I like the way a viewer’s focus is spread between the binder and the screen. Thinking about what to see, and then seeing that thing. The looking up feels natural here, while the looking left felt strange in the Boston set-up. I’m still not completely sure about the underlying rules.A few reflections/rants below (“dragons,” &c):1. Alan Kay’s VHS archive is full of wonderful nuggets that pop up when you extract thumbnails or whatever. Consider:<Screen Shot 2015-03-08 at 5.58.23 PM.png>… and even more (enigmatically!) on-point:<Screen Shot 2015-03-08 at 5.56.41 PM.png>The office is real!I can’t quite figure out where our Room fits into these crossroads, but it seems like an interesting AI/IA-style dichotomy.2. Computer Vision “best practices” are not to be trusted. Since it’s a field I don’t know very well, I tend to defer to what seems (by OpenCV documentation and Google) to be standard operating practice. This task (finding photographs of known pages) seemed to be a case of object detection. The basic idea is to find noteworthy points (ie. that you’d find again in a differently-sized or rotated image) in the images of the objects and the images from the camera, and then see if there’s a a geometric mapping between the similar points in one image and the other. I got semi-promising results at first using this technique.Here are all of the point-correspondences between a PNG of the grid and a photo of me holding the grid:<Screen Shot 2015-03-08 at 4.09.53 PM.png>And here are just the point correspondences that made coherent geometric sense:<Screen Shot 2015-03-08 at 4.11.39 PM.png>But when I tried with images that didn’t match, the algorithm still found a way to make it work:<Screen Shot 2015-03-08 at 4.15.32 PM.png>and I realized that most of the tracking points found here were corners of the thumbnails against the white background. I suppose it would be notable to see a form like that in nature.3. “The simplest thing that could possibly work”—I realized while suffering through a funny film this evening—would be to keep the page in a known position, un-warp the camera image to isolate the grid, and then resize the grid so that each thumbnail is a single intensity value. The grids, then, would function somewhat as 24-item continuous-value barcodes. I was so excited when that actually worked. There’s the unwarped camera-image in the upper-right, the terminal in the lower-right telling me we “hit” item VPRI-0088 with a distance of 419, and the quick-view on the left showing that VPRI-0088 really is the same grid:<Screen Shot 2015-03-09 at 1.30.18 AM.png>4. Why is this (ie. defiant blindness) an acceptable attitude for macho computer-vision programmers?<Screen Shot 2015-03-09 at 4.13.49 AM.png>5. We shouldn’t lose track of the limitations of the physical world. No, not an emo call for help, but consider:• We ran out of printer paper so I didn’t get to print out the whole binder and I couldn’t see 4/5 of the archive!• I needed to buy a special tool in order to press holes in the paper and fit them into the binder, and then it was a repeated gesture to apply these holes. As point of fact, the shopping and hole-punching had enough novelty to keep me interested, but precisely because it’s not my normal MO. On the other hand, we’ve talked before (w/r/t film industry tooling) about Good Jobs for the Good Intern, and these would certainly fit that bill…• Sometimes the printer prints a little funny and leaves rainbow dust on the edges of the page.So much of the move to the cloud and to the rectangle are supposedly to “fix” problems/hiccups like the above. I can see much promise in these hybrid interfaces, but where’s my reprogrammable matter?• “Whenever you’re ready”: this is the gentleman’s way of saying “action!”<FbOn0l.gif>R.M.O.On Mar 4, 2015, at 2:45 PM, Robert M Ochshorn wrote:For the record, here is a short video of the installation in action.All of the new CDG installations look great! Can’t wait to be back in the mix.RMOOn Feb 28, 2015, at 4:56 PM, Robert M Ochshorn wrote:back to the gallery and make some proper documentation