On Feb 24, 2015, at 3:10 PM, Chaim Gingold wrote:My immediate reaction to this is that I want the animation effect to attenuate with distance to the mouse cursor.It would be tuned to 1.0 under the mouse, but gently fall off. I’m imagining a radius of effect of something like the width of a column.

I like the idea of mouse-driven attenuation.

I was going to try a “serious” prototype with a tapering parameterization, but then this happened somewhere along the way and I thought that it captured the idea (ie. of “focusing attention”) with brutal clarity much more so than a properly smooth & invisible prototype would have done (though I do intend to “fix” this soon). Here is a marquee. It is shouting, as it were, “LOOK HERE!”

To me, one of the most important premises/promises of room-based computing is that we can use our “natural” facilities to handle peripheralization[0]. So much film editing is theorized as directing where the viewer will look[1]. With HD and 3d and 360degree cinema, the viewer gets an infinitesimally tiny increase in capacity to focus on details and angles and degrees of what they’re seeing, but are still on a very narrow track. If “authored work,” as Glen is thinking about it, always comes “at the wrong time,” cinema is the ultimate craft of dead reckoning the “right time” to reveal what you need to see next. It is an art, and it is an industry.

The dynamic medium removes the artificial reasons that an author would need to create a linear sequence of pages or film frames, but—as gaming broadly, and the narrative around Earth Primer more specifically makes clear—does not yet have a coherent answer to what, exactly, is the alternative. I think it’s great that Earth Primer has a fully-functioning sandbox, but its real contribution to The Medium seems to be the depth and play in its path—it’s scripted and guided, but also somehow alive at every moment along the way.

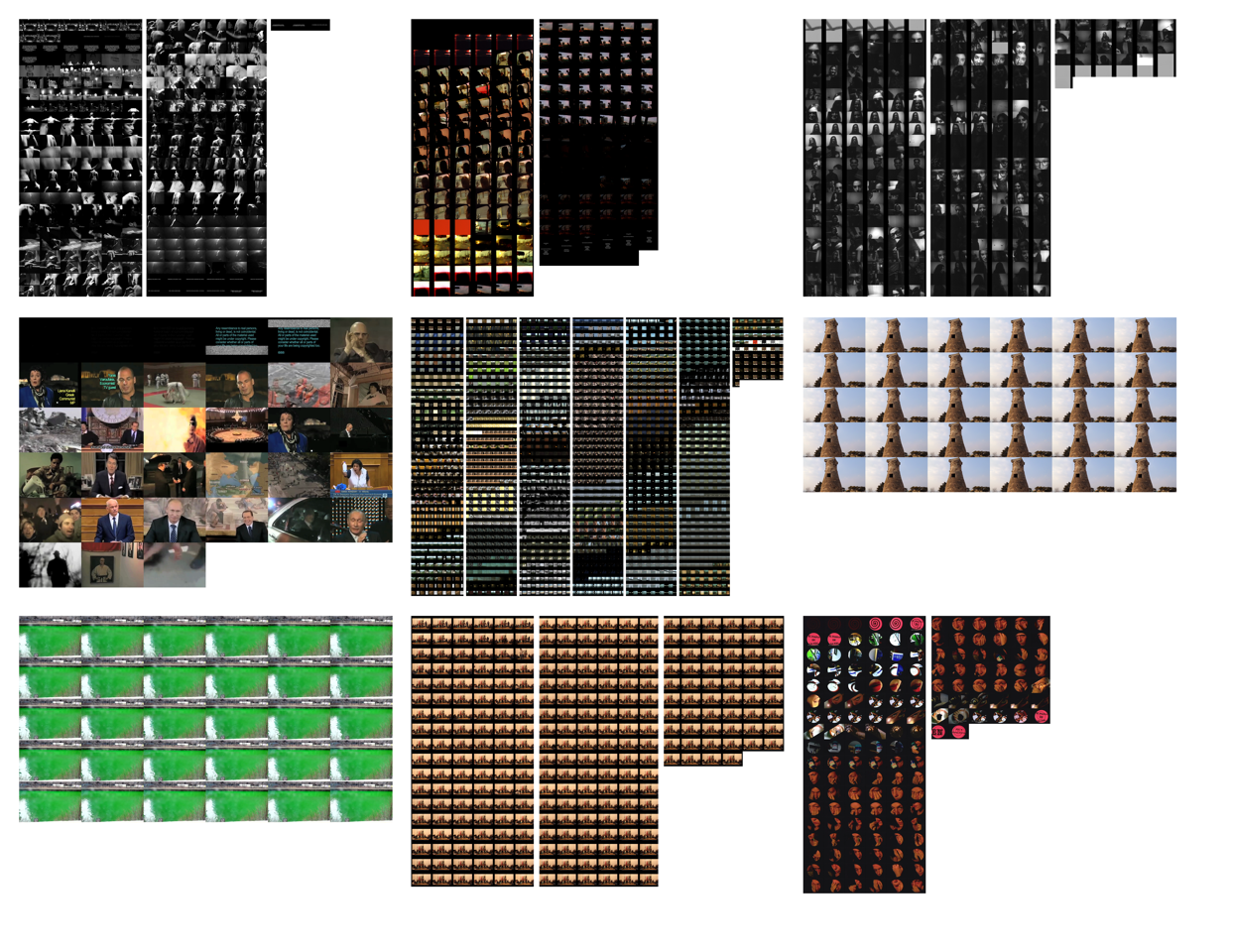

I need to go back to the gallery and make some proper documentation, but I scrambled to install a few of these video grids in a group exhibition at an academic gallery in Boston around a feminist video archive of work relating to the body. My correspondent asked to install InterLace, but it’s not really what I’m thinking about these days, and somehow didn’t feel right for a show about bodies.

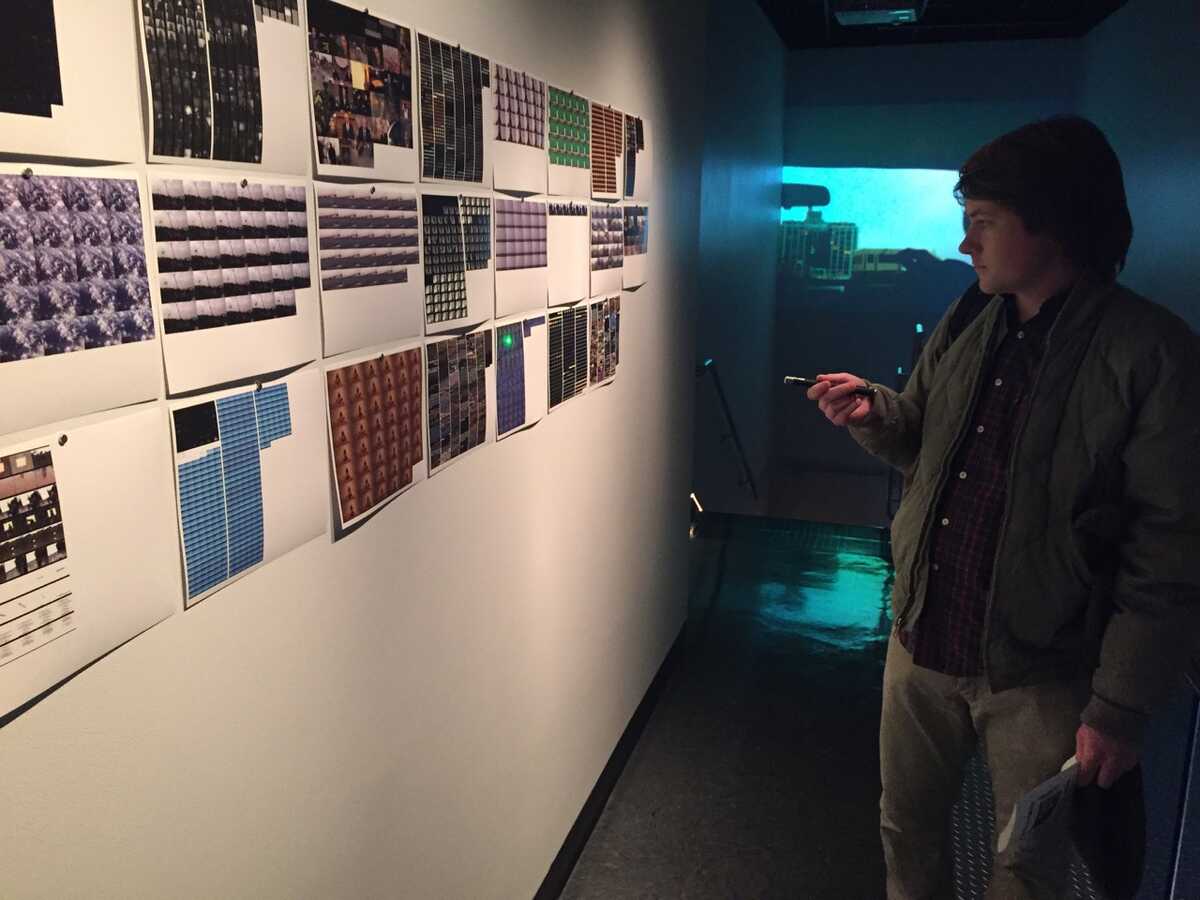

On the wall in the hallway I printed out 26 video-column renderings. A small old-school TV showed the kinect/ps3-eye feeds and their processing, while a projection in the back room showed an animated version of the currently-selected poster.

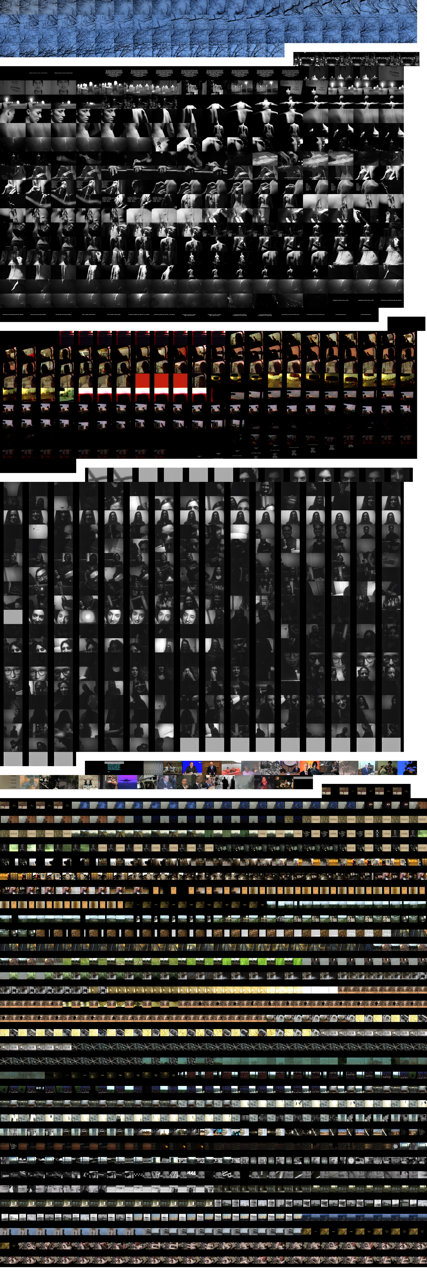

I had originally rendered out all of the grids at the same timescale with the zigzag offset thing:

Even notwithstanding the gap/aspect-ratio ragged-right weirdness, I didn’t like how this worked (for this exhibition, at least) because it gave so much more space to the long videos. Instead, I ended up giving each video the same amount of space, but trying to find some heuristics about scale and time and columns (frames come every 2 seconds, and are always six to a column):

The gallery doesn’t have any windows, which made the laser calibration much easier, though my cheap-Chinese-import green laser started losing its brightness just before the opening and replacing the batteries didn’t reliably help. The red laser is almost entirely absorbed in dark sections of the printout.

I had forgotten how much novelty the laser-pointer magic has for people. (“Whoa” and “Cool” were common responses.) Intuitively, several visitors started treating the pointer as a high-precision light pen. It was simultaneously affirming to see people point right to specific frames and expect to pull them up, but also daunting to consider how much more work we will need to do before the laser lives up to, say, centimeter precision on a big wall. I want to do some experiments with scrims, frosted glass, and other materials that can be tracked from behind. I think that might also provide an easy pathway to capturing some touch gestures (maybe combined with a contact microphone? let’s harvest Julia’s brain for technique this summer…).

I didn’t think a database view was quite appropriate for this setting, but love the gesture towards visibility of internals. In green are the thresholded Kinect readings, and in either corner are the live feeds of the Eye in raw and then unwarped coordinates. It was very helpful to have it there when I was explaining the piece to people.

I don’t have any good photos/video of the projection yet, but here’s a shot showing the cameras and semi-obscured screen. I set it up so that as you walked towards it in the hallway, the grid would show more and more, smaller and smaller, frames until (as pictured) every frame in the video was shown, and it stopped moving. They gave me a very old Mac Mini to use, so I had to precompute everything—here are some of the frames animated through Finder. The Kinect didn’t handle the reflective floor and glass railing very well, and I had to massively kludge things together to get any monotonic depth readings. Working with lower-level sensing (eg. ultrasound?) might have been more robust for the very simple input stream I was hoping to capture.

As Dave and I joke about, the biggest insult for a CDG prototype is “well, maybe it’s art.” I was hoping to make this seem so spartan and functional that people in the art gallery would say “I can see why this is useful, but why is it art?” As ever, I find myself caught in between…

It was a very small show, with a very small audience (which is good, because the tech/concept are not even close to being ready for The Public). I didn’t have projections confirming selection over the grid, which was a clear deficiency and point of confusion. Still, several filmmakers, media artists, and theorists were around, and they are so appreciative to see nascent research in their field and to be able to talk about it and what it should turn into. At this stage of development, at least, it isn’t a threat to their processes or specialties. People gave me very positive feedback, but it wasn’t completely satisfying because the work was still “impressive,” meaning that the tech had not come close to receding/disappearing.

I am deeply indebted to all of you for the contamination of my thoughts away from web/InterLace. (“InterSpace”? “PrinterLace?”)

Onward!

R.M.O.

[0] Something is very offensive about use of the term “peripherals” to refer to computer i/o devices. As if our interface with computing were of negligible import as compared, ie., to The Computation.

In a big physical space it would be cool to react to gaze. “Eye hover” or something. :^)On Feb 24, 2015, at 12:04 PM, Robert M Ochshorn wrote:On Feb 13, 2015, at 2:37 PM, Toby Schachman wrote:I understand why intellectually why you need to shift the frames horizontally as you play them in time, but it's distracting. What does it look like to just fade back to the "start" frame for each cell, or even just a cut?The problem with cuts on a grid is that it’s not one cut, it’s hundreds of cuts (or fades, or whatever). I’ve never liked the way that looks, whatever fades I’ve applied.But your question got me thinking about interleaved frames, and the fact that only 15 years ago, you couldn’t pause video into a still image on most devices! Pressing the “pause” button on a VCR or even DVD player would have you stuck between two frames. Sometimes, the image would be mostly still, but usually you’d get a little flicker in the parts of the image with motion. I’m still experimenting within the parameter space of “which two frames every N frames” but am seeing some promising results. Here’s a representative example (also available online for the hard-of-gif’ing):<lurch-1-8.gif>There’s something lost from the moving frames—when the frames are moving, I have a much easier time scanning my eyes around the image—but I think the moving frames max out at a certain number. This non-moving-interleaving may be a good choice for when you want to see a lot of frames at once, and still have a hint of motion.RMO