On Jan 7, 2015, at 2:53 PM, Glen Chiacchieri wrote:Coincidentally enough, my friend has just made a beautiful little cassette tape simulator for a mixtape site he's making:

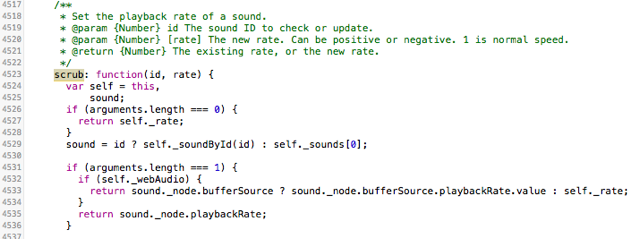

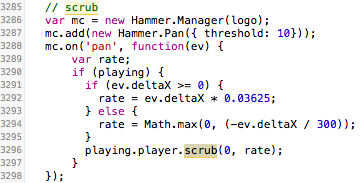

Try dragging on the tape. Other mixtapes here: http://jameszetlen.com/tapes/ (They're all really good!)On Wed, Jan 7, 2015 at 4:16 PM, Robert M Ochshorn wrote:I’m glad you enjoyed the resulting audio (CC’ing back the Dynamic Medium[0])—we’ve also talked many times about the “lack of physicality” in digital media. I’m still grasping at the right vocabulary, but lately (and in conversation with Bret) I’ve been fascinated by the dual concepts of “absolute” and “relative” manipulations. Almost all of the timelines and grids I’ve worked on provided affordances for modifying the absolute timecode, page, position, &c, but I realized I’d done very little work on relative adjustments.Tape, on the other hand, has many affordances for relative manipulations, but is (notoriously) bad at absolute adjustments. Remember how annoying it was rewinding tapes to their beginning (“BE KIND / REWIND”)? Digital should be able to give us the best of both worlds, but too often (html5, quicktime x) the players are designed with neither mode of manipulation in mind.I don’t think I completely understand what you mean by “honesty” but I’m very interested. Codec developers have a very interesting language to discuss human perceptual capabilities[1], which to them are the ultimate evaluation metric of their work. Success, then, is “fooling” the perceptual system into seeing form instead of pixel, motion instead of stillness. It doesn’t sound exactly honest—I wonder if honesty means giving control of the illusion to the viewer? Or if it means providing “explorable source code” to deconstruct the tool itself?Tibetan singing bowls are very beautiful.R.M.O.[0] It’s our new group mailing list, and its products will (very!) soon adorn our lab with receipts, laser-powered labels, &c.[1] eg.—Great consumer experiences are created by a convergence of sight, sound, and story. This paper presents an in-depth quantitative analysis of the neurobiology and optics of sight. More specifically, it examines how principles of vision science can be used to predict the bit rates and video quality needed to make video on devices ranging from smartphones to ultrahigh-definition televisions (UHDTVs) a success. It presents the psychophysical concepts of simple acuity, hyperacuity, and Snellen acuity* to examine the visibility of compression artifacts for the MPEG-4/H.264 video compression standard. It looks at the newest emerging international compression standard for High-Efficiency Video Coding (HEVC). It will investigate how the various sizes of the new coding units in HEVC would be imaged on the retina and what that could mean in terms of the HEVC video quality and bit rates that would likely be needed to deliver entertainment-quality content to smartphones, tablets, high-definition televisions, 4K TVs, and UHDTVs.

*<220px-Snellen_chart.svg.png>On Jan 6, 2015, at 11:01 PM, Dave Cerf wrote:Not knowing about the tech behind this demo, I have to say that I really enjoy the resulting audio when tapping Plus and Minus. It’s just like speeding up or slowing down a record.I was having a conversation with someone the other day about digital media and how its lack of physicality limits what one can do to experiment with it. For example, with a tape recorder, I can put my finger on the capstan and apply various levels of pressure to slow down the tape. Or I can rewind while keeping the playhead in place. I can manipulate the recording/playback medium with all of the things in the world around me. Or I can wave a magnet over various parts of a tape and see what happens to the audio—maybe it will become warbly and interesting. On the computer, I am limited to clickable buttons or menus provided by the interface designer. Or, if I am more clever, I am limited to code.Codes allow me to experiment with simulations or algorithms, the former often being insufficient due to the limited hardware interfaces (an animation of a turning capstan and a pressure sensitive touchscreen would be the default implementation of a Tape Deck Simulation), and the latter typically reserved for Computer Programmers.Perhaps what is lacking for digital media manipulation is domain specific languages. And perhaps those languages, if they existed, shouldn’t be limited to words and phrases, but also models that can be connected together via that language.There is a small Tibetan singing bowl sitting on the table here. Turning the mallet against the rim of the bowl with just the right amount of friction creates a steady resonant frequency. I can add water to the bowl. I can hit the bowl with different materials. The world is my playground. How long would it take to build a digital simulation of this experience? What sliders would I choose to add to its onscreen interface? Which sliders would I leave out, limiting other performers of this digital simulation?What is the Digital best for? I suppose we’re still trying to figure that out? Is honesty the best policy with the Digital? Or is simulation, and then the resulting precision control and fungibility the ultimate goal? Perhaps We need to explore both directions simultaneously.In your demo, I wonder: do I like it because of how it feels and sounds, or do I like it because it sounds like something I am already familiar with? What would someone’s experience be who has never had a record player? YouTube, for example, never turns into chipmunk audio when you fast forward.On Dec 30, 2014, at 9:38 PM, Robert M Ochshorn wrote:The Web Audio API is a scandal—total disaster. Maybe the worst part is that it is implemented everywhere now, from iOS to IE. Fortunately, they do slip a Turing Machine into the browser, so you can make minecraft mini-realities that behave with alternative macro-grains. Here’s the same thing as last time with the video tagging along, though it’s still floating outside of any context/gesture:http://rmozone.com/snapshots/2014/12/backandforthaandv.htmlI’m indeed conflicted about questions of high-level/low-level, maybe in a similar way to how Bret is “perversely” rather than “proudly” fond of the “primitive” texture of Dead Fish / DDV. The STEPS mock-ups are beautiful and very exciting—Knuth’s METAFONT and TeX books might be candidates for revitalizing with graphic and interactive listings.My whole ambivalence may just need to resolve as Yet Another Case of “Both”: we should be thinking grandly from as high a position as we can muster, while at the same time exploring the “ground truth” that is to be found in an intimate understanding of various foundations. The Agre book has come up before and I’m excited to check it out—in particular, “Number” v. “Integer” is a bit of a sore point in JavaScript. (Typed Arrays were tepidly introduced to support WebGL and some a/v processing, but never really fully/properly exposed.)I had an interesting conversation with my father this morning about Building Information Modeling. He’s reading a book about it that is unfortunately (though not altogether shockingly) fixated on incompatible standards and implementations that are crippling the field, but all the same he is thinking of designing curricula to incorporate BIM into the Architecture program at Cornell. The BIM stuff is interesting, and I’m curious to learn more about it (have any of you played with Revit[0]), but more interesting to me was learning of an early and unrealized dream my father had some 30 years ago that, instead of Frank Gehry scribbling sketches for his masterworks on hotel napkins—<Screen Shot 2014-12-30 at 11.58.53 PM.png>—and getting the engineers to figure out the details from there, one could imagine design tools that built up from a detailed model of the lowly 2x4 and support the design of buildings that don’t leak. (Apparently, abstractions cause buildings, as well as software, to leak.)Your correspondent,R.M.O.[0] Architectural software is funny. Wikipedia is funny. Wikipedia’s writing on architectural software is hilarious:Grasshopper features a fairly advanced GUI with a lot of features that are only rarely found in production software. It is not known however whether these elements improve or impede effective usage.<Screen Shot 2014-12-31 at 12.29.17 AM.png>On Dec 29, 2014, at 2:55 PM, Bret Victor wrote:It’s amazing how “against the grain”[0] of web/media such a basic experiment as this is:When I wanted to make the "guitar switch" in http://worrydream.com/ExplorableExplanations/ I took a look at the Web Audio API and recoiled in horror at the complexity. I had no idea what they were talking about, and I've implemented all that stuff myself. Fortunately, I also read that the API (at the time) wasn't well supported and basically didn't work, so I did it in twenty lines of Flash.Bedrock abstractions (such as "the only way to generate sound on the web") shouldn't have a grain. The libraries and languages that others build on top of them should have grains.There's a bit in one of Stroustrup's books, where he mentions that a language designer constantly hears "This is a really common need -- it should go in the standard library", and he responds with, "No, it should go in some library. But not necessarily the standard library."There’s a lot to disentangle from this tendency, which seems to continue unabated even today (and at CDG, &c).I'm perversely fond of how low-level the Dead Fish and Drawing Dynamic Visualizations systems are -- they're kind of direct-manipulation geometric assembly languages. There's something about that purity that helps you understand the essence of the new concept, without being obscured by compiler or IDE "helpfulness".Open source is appealing, at least in theory, because the abstractions provided are “descendable.” Unfortunately it’s painful to go down into the Linux kernel, &cYeah, you don't really want "open source" so much as "explorable source". You need to be able to descend and actually understand it.I made some mockups for Alan's STEPS project, to try to suggest that the source code of the operating system should feel like an interactive book (with chapters, sections, etc. corresponding to functional components) where there's a high-level description/depiction of every module, and you can go deeper as needed, and this readable explorable description is the running source code of the system.